filmov

tv

Design Matrix Examples in R, Clearly Explained!!!

Показать описание

For a complete index of all the StatQuest videos, check out:

If you'd like to support StatQuest, please consider...

...or...

...buying one of my books, a study guide, a t-shirt or hoodie, or a song from the StatQuest store...

...or just donating to StatQuest!

Lastly, if you want to keep up with me as I research and create new StatQuests, follow me on twitter:

#statquest #regression

Design Matrix Examples in R, Clearly Explained!!!

Design Matrices For Linear Models, Clearly Explained!!!

R : How to create design matrix in r

Design Matrices For Linear Models, Clearly Explained!!!

Design matrix basics

Design Matrices

R : own design matrix in r

Design Matrix As Data Storage | ML Course 2.20

How to Perform Wild Bootstrap using R#r #bootstrap #wildbootstrap #heteroskedastic

Understanding the Expression ~. in R's Model Matrix Function

3D printed Suzuki Jimny - available on printables #3dprinting #3dprinter #3dprinted #3ddruck

Building the Linear Model - Data Analysis with R

Performing Matrix Operations in R

Matrix in R Statistics #Shorts

Gearless Transmission using Elbow mechanism 📌 #mechanical #engineering #cad #project #prototype #3d...

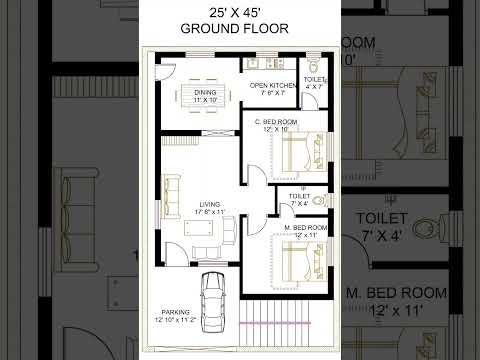

simple house plan design #housedesign #houseplans #homeplan #shorts

Optional: Finding the Line of Best Fit with Matrices in R

MATH3714, Section 2.5: Normal Equations in R

Design Matrix & Normal Equations for Simple & Multiple Linear Regression (Mathematica & ...

Linear Regression with Matrices in R

How to create shapes in microsoft word?

The Matrix Movie Explained

Understanding model.matrix and the Tilde Operator in R

The Least Squares Method R Code

Комментарии

0:08:20

0:08:20

0:14:40

0:14:40

0:01:01

0:01:01

0:14:40

0:14:40

0:05:28

0:05:28

0:16:25

0:16:25

0:01:28

0:01:28

0:06:55

0:06:55

0:05:23

0:05:23

0:01:03

0:01:03

0:00:16

0:00:16

0:01:21

0:01:21

0:06:42

0:06:42

0:00:58

0:00:58

0:00:11

0:00:11

0:00:05

0:00:05

0:16:00

0:16:00

0:10:50

0:10:50

0:40:51

0:40:51

0:10:42

0:10:42

0:00:22

0:00:22

0:00:38

0:00:38

0:01:38

0:01:38

0:08:42

0:08:42