filmov

tv

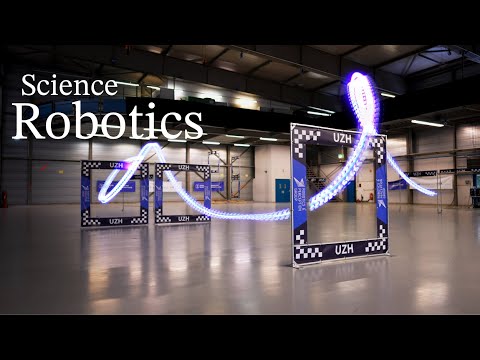

Reaching the Limit in Autonomous Racing: Optimal Control versus Reinforcement Learning (SciRob 23)

Показать описание

A central question in robotics is how to design a control system for an agile, mobile robot. This paper studies this question systematically, focusing on a challenging setting: autonomous drone racing. We show that a neural network controller trained with reinforcement learning (RL) outperforms optimal control (OC) methods in this setting. We then investigate which fundamental factors have contributed to the success of RL or have limited OC. Our study indicates that the fundamental advantage of RL over OC is not that it optimizes its objective better but that it optimizes a better objective. OC decomposes the problem into planning and control with an explicit intermediate representation, such as a trajectory, that serves as an interface. This decomposition limits the range of behaviors that can be expressed by the controller, leading to inferior control performance when facing unmodeled effects. In contrast, RL can directly optimize a task-level objective and can leverage domain randomization to cope with model uncertainty, allowing the discovery of more robust control responses. Our findings allow us to push an agile drone to its maximum performance, achieving a peak acceleration greater than 12 g and a peak velocity of 108 km/h. Our policy achieves superhuman control within minutes of training on a standard workstation. This work presents a milestone in agile robotics and sheds light on the role of RL and OC in robot control.

Reference:

Y. Song, A. Romero, M. Müller, V. Koltun, D. Scaramuzza,

"Reaching the Limit in Autonomous Racing: Optimal Control versus Reinforcement Learning",

Science Robotics, September 13, 2023

For more info about our research on:

Affiliations:

Y. Song, A. Romero, and D. Scaramuzza are with the Robotics and Perception Group, Dep. of Informatics, University of Zurich, and Dep. of Neuroinformatics, University of Zurich and ETH Zurich, Switzerland

M. Müller and V. Koltun are with Intel Labs

Reference:

Y. Song, A. Romero, M. Müller, V. Koltun, D. Scaramuzza,

"Reaching the Limit in Autonomous Racing: Optimal Control versus Reinforcement Learning",

Science Robotics, September 13, 2023

For more info about our research on:

Affiliations:

Y. Song, A. Romero, and D. Scaramuzza are with the Robotics and Perception Group, Dep. of Informatics, University of Zurich, and Dep. of Neuroinformatics, University of Zurich and ETH Zurich, Switzerland

M. Müller and V. Koltun are with Intel Labs

Комментарии

0:04:43

0:04:43

0:05:14

0:05:14

0:03:13

0:03:13

![[ICRA21 Autonomous Racing]](https://i.ytimg.com/vi/_rTawyZghEg/hqdefault.jpg) 0:23:08

0:23:08

0:18:47

0:18:47

0:02:06

0:02:06

0:02:22

0:02:22

0:00:06

0:00:06

0:01:42

0:01:42

0:02:54

0:02:54

0:06:43

0:06:43

0:02:26

0:02:26

0:03:42

0:03:42

0:23:53

0:23:53

0:09:59

0:09:59

0:03:01

0:03:01

0:26:23

0:26:23

0:10:18

0:10:18

0:09:12

0:09:12

1:02:46

1:02:46

0:09:31

0:09:31

0:12:24

0:12:24

0:06:32

0:06:32

0:00:52

0:00:52