filmov

tv

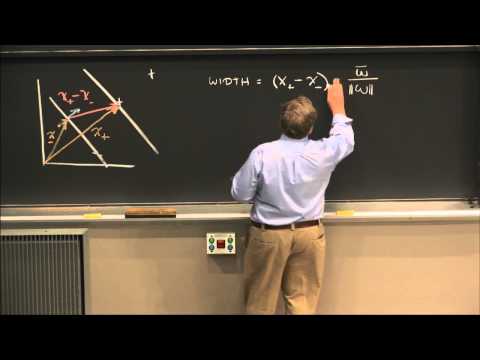

Maths Intuition Behind Support Vector Machine Part 2 | Machine Learning Data Science

Показать описание

In machine learning, support-vector machines are supervised learning models with associated learning algorithms that analyze data used for classification and regression analysis.

Please join as a member in my channel to get additional benefits like materials in Data Science, live streaming for Members and many more

Please do subscribe my other channel too

If you want to Give donation to support my channel, below is the Gpay id

Connect with me here:

Please join as a member in my channel to get additional benefits like materials in Data Science, live streaming for Members and many more

Please do subscribe my other channel too

If you want to Give donation to support my channel, below is the Gpay id

Connect with me here:

SVM (The Math) : Data Science Concepts

Maths Intuition Behind Support Vector Machine Part 2 | Machine Learning Data Science

Support Vector Machine (SVM) in 2 minutes

Support Vector Machines - THE MATH YOU SHOULD KNOW

Support Vector Machines Part 1 (of 3): Main Ideas!!!

7.3.2. Math behind Support Vector Machine Classifier

Support Vector Machine (SVM) Basic Intuition- Part 1| Machine Learning

The Kernel Trick in Support Vector Machine (SVM)

[Paper Reading] One Initialization to Rule them All: Fine-tuning via Explained Variance Adaptation.

Understanding the mathematics behind Support Vector Machines ( Part 1)

16. Learning: Support Vector Machines

Support Vector Machines (SVM) Math Explained | Mathematics of SVM

Support Vector Machines (SVM) - the basics | simply explained

Support Vector Machines: All you need to know!

Mathematics of SVM | Support Vector Machines | Hard margin SVM

Support Vector Regression SVR

Support Vector Machines : Data Science Concepts

But what is the intuition behind Support Vector Machines....?? How does Amazon use them?

#053- Math Intuition Behind Support Vector Machine Part 2

How Support Vector Machine (SVM) Works Types of SVM Linear SVM Non-Linear SVM ML DL by Mahesh Huddar

Support Vector Regression Indepth Maths Intuition In Hindi

Support Vector Machine | Detailed Mathematical Intuition | Hard Margin | Working | Machine Learning

Support Vector Machines | Geometric Intuition

Support Vector Machines (SVM) - Part 1 - Geometric and Mathematical Intuition

Комментарии

0:10:19

0:10:19

0:23:27

0:23:27

0:02:19

0:02:19

0:11:21

0:11:21

0:20:32

0:20:32

0:31:55

0:31:55

0:12:50

0:12:50

0:03:18

0:03:18

![[Paper Reading] One](https://i.ytimg.com/vi/DpFdVwn_IHM/hqdefault.jpg) 1:29:03

1:29:03

0:13:45

0:13:45

0:49:34

0:49:34

0:26:54

0:26:54

0:28:44

0:28:44

0:14:58

0:14:58

0:34:54

0:34:54

0:08:28

0:08:28

0:08:07

0:08:07

0:08:09

0:08:09

0:13:23

0:13:23

0:09:34

0:09:34

0:11:02

0:11:02

0:32:19

0:32:19

0:11:46

0:11:46

0:11:27

0:11:27