filmov

tv

USENIX ATC '22 - Building a High-performance Fine-grained Deduplication Framework...

Показать описание

USENIX ATC '22 - Building a High-performance Fine-grained Deduplication Framework for Backup Storage with High Deduplication Ratio

Xiangyu Zou and Wen Xia, Harbin Institute of Technology, Shenzhen; Philip Shilane, Dell Technologies; Haijun Zhang and Xuan Wang, Harbin Institute of Technology, Shenzhen

Fine-grained deduplication, which first removes identical chunks and then eliminates redundancies between similar but non-identical chunks (i.e., delta compression), could exploit workloads' compressibility to achieve a very high deduplication ratio but suffers from poor backup/restore performance. This makes it not as popular as chunk-level deduplication thus far. This is because allowing workloads to share more references among similar chunks further reduces spatial/temporal locality, causes more I/O overhead, and leads to worse backup/restore performance.

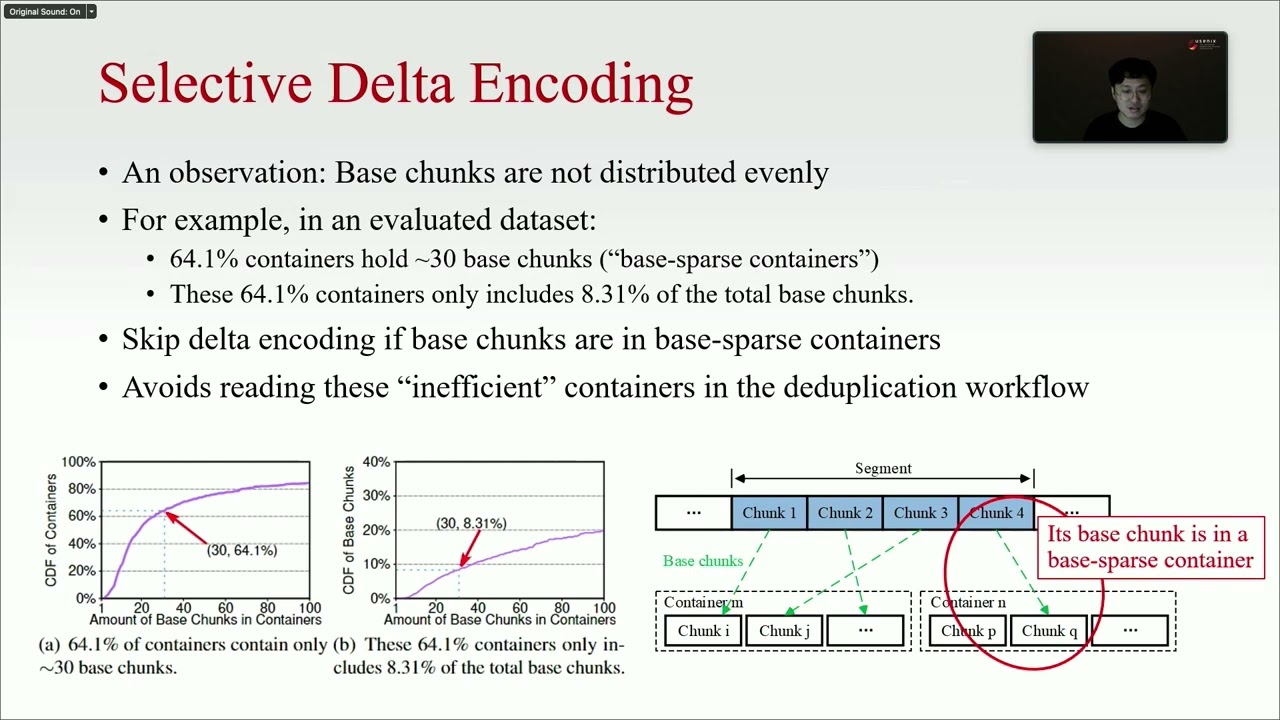

In this paper, we address issues for different forms of poor locality with several techniques, and propose MeGA, which achieves backup and restore speed close to chunk-level deduplication while preserving fine-grained deduplication's significant deduplication ratio advantage. Specifically, MeGA applies (1) a backup-workflow-oriented delta selector to address poor locality when reading base chunks, and (2) a delta-friendly data layout and "Always-Forward-Reference" traversing in the restore workflow to deal with the poor spatial/temporal locality of deduplicated data.

Evaluations on four datasets show that MeGA achieves a better performance than other fine-grained deduplication approaches. In particular, compared with the traditional greedy approach, MeGA achieves a 4.47–34.45 times higher backup performance and a 30–105 times higher restore performance while maintaining a very high deduplication ratio.

Xiangyu Zou and Wen Xia, Harbin Institute of Technology, Shenzhen; Philip Shilane, Dell Technologies; Haijun Zhang and Xuan Wang, Harbin Institute of Technology, Shenzhen

Fine-grained deduplication, which first removes identical chunks and then eliminates redundancies between similar but non-identical chunks (i.e., delta compression), could exploit workloads' compressibility to achieve a very high deduplication ratio but suffers from poor backup/restore performance. This makes it not as popular as chunk-level deduplication thus far. This is because allowing workloads to share more references among similar chunks further reduces spatial/temporal locality, causes more I/O overhead, and leads to worse backup/restore performance.

In this paper, we address issues for different forms of poor locality with several techniques, and propose MeGA, which achieves backup and restore speed close to chunk-level deduplication while preserving fine-grained deduplication's significant deduplication ratio advantage. Specifically, MeGA applies (1) a backup-workflow-oriented delta selector to address poor locality when reading base chunks, and (2) a delta-friendly data layout and "Always-Forward-Reference" traversing in the restore workflow to deal with the poor spatial/temporal locality of deduplicated data.

Evaluations on four datasets show that MeGA achieves a better performance than other fine-grained deduplication approaches. In particular, compared with the traditional greedy approach, MeGA achieves a 4.47–34.45 times higher backup performance and a 30–105 times higher restore performance while maintaining a very high deduplication ratio.

0:17:30

0:17:30

0:23:19

0:23:19

0:21:49

0:21:49

0:18:49

0:18:49

0:19:03

0:19:03

0:19:49

0:19:49

0:19:35

0:19:35

0:20:54

0:20:54

0:22:46

0:22:46

0:19:58

0:19:58

0:16:39

0:16:39

0:18:49

0:18:49

0:19:24

0:19:24

0:20:36

0:20:36

0:59:48

0:59:48

0:21:49

0:21:49

0:21:24

0:21:24

0:18:47

0:18:47

0:20:11

0:20:11

0:18:03

0:18:03

0:50:29

0:50:29

0:18:45

0:18:45

0:50:38

0:50:38

0:19:31

0:19:31