filmov

tv

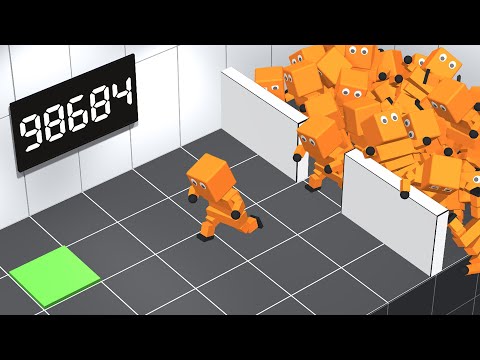

Teaching an AI to Beat You At Your Own Game!

Показать описание

I'm trying to teach an AI to play a simple game and beat me at it.

For that I'm using Unity 3D and machine learning.

More specifically: An evolutionary algorithm that evolves the AI over time.

The training took about 50 hours. Unfortunately it didn't quite reach super human levels. Not quite sure what went wrong...

In this video I want to tell you what I found out about machine learning so far and show you my results.

For that I'm using Unity 3D and machine learning.

More specifically: An evolutionary algorithm that evolves the AI over time.

The training took about 50 hours. Unfortunately it didn't quite reach super human levels. Not quite sure what went wrong...

In this video I want to tell you what I found out about machine learning so far and show you my results.

Teaching an AI to Beat You At Your Own Game!

I taught an A.I. to speedrun Minecraft. It made history.

He said this AI is Unbeatable. I took it personally.

AI Learns Insane Monopoly Strategies

So Someones Teaching An AI How To Nuzlocke...

Training an unbeatable AI in Trackmania

AI Learns To Play Golf

I Made 1.000 A.I Warriors FIGHT... (Deep Reinforcement Learning)

AI Learns to Speedrun Mario

AI learns to beat a crazy map

AI Learns to Play Tag (and breaks the game)

This Superhuman Poker AI Was Trained in 20 Hours!

AI Learns to Walk (deep reinforcement learning)

AI Learns to Run Faster than Usain Bolt | World Record

AI Learns to Escape (deep reinforcement learning)

AI beats multiple World Records in Trackmania

Training AI to Play Pokemon with Reinforcement Learning

This AI Learned Boxing…With Serious Knockout Power! 🥊

AI Olympics (multi-agent reinforcement learning)

Can an AI survive Five Nights at Freddingus'?

AI Learns to DESTROY old CPUs | Mario Kart Wii

AI Invents New Bowling Techniques

AI Learns to Outrun Police Officers

I tried to make a Valorant AI using computer vision

Комментарии

0:09:29

0:09:29

0:11:10

0:11:10

0:16:05

0:16:05

0:11:30

0:11:30

0:12:25

0:12:25

0:20:41

0:20:41

0:13:57

0:13:57

0:11:50

0:11:50

0:08:07

0:08:07

0:18:13

0:18:13

0:10:29

0:10:29

0:05:32

0:05:32

0:08:40

0:08:40

0:10:22

0:10:22

0:08:18

0:08:18

0:37:18

0:37:18

0:33:53

0:33:53

0:06:03

0:06:03

0:11:13

0:11:13

0:24:40

0:24:40

0:09:54

0:09:54

0:11:33

0:11:33

0:11:44

0:11:44

0:19:23

0:19:23