filmov

tv

GPT Explained!

Показать описание

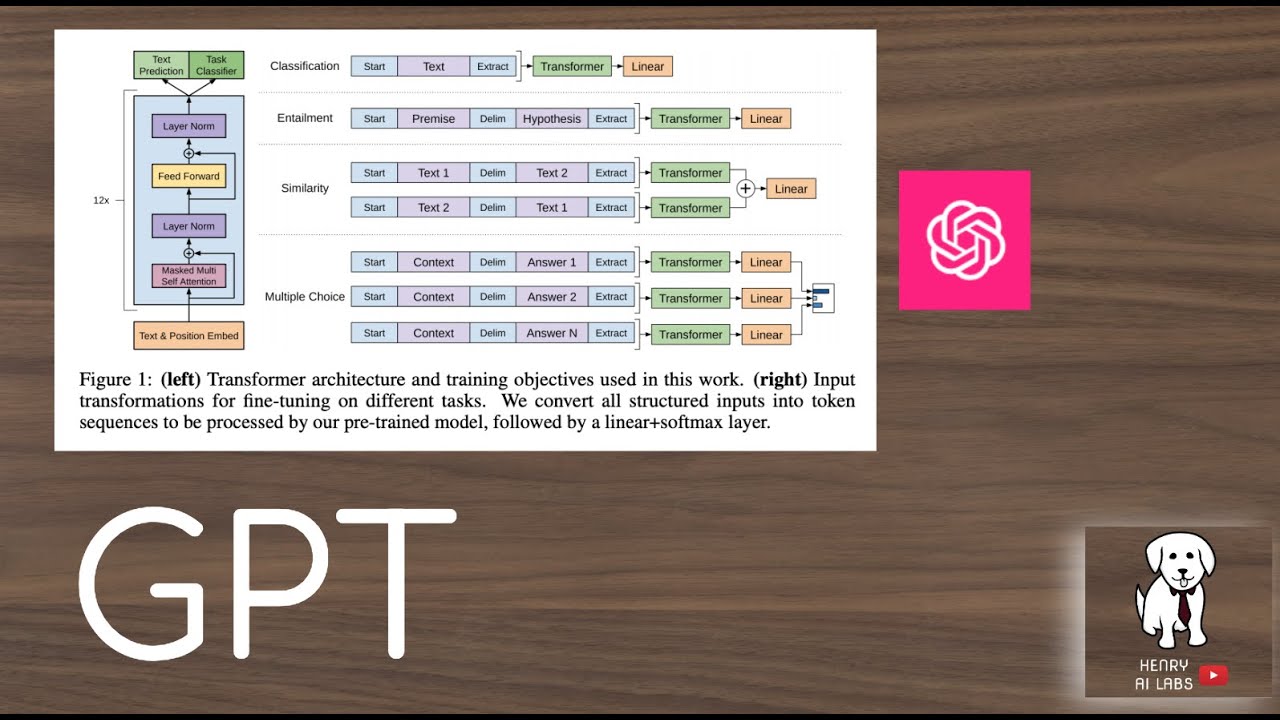

This video explains the original GPT model, "Improving Language Understanding by Generative Pre-Training". I think the key takeaways are understanding that they use a new unlabeled text dataset that requires the pre-training language modeling to incorporate longer range context, the way that they format input representations for supervised fine-tuning, and the different NLP tasks this is evaluated on!

Paper Links:

Thanks for watching! Please Subscribe!

Paper Links:

Thanks for watching! Please Subscribe!

GPT - Explained!

Transformers, explained: Understand the model behind GPT, BERT, and T5

Transforming Language with Generative Pre-trained Transformers (GPT)

ChatGPT Explained Completely.

What is ChatGPT? (In About A Minute)

Large Language Models explained briefly

Transformer Neural Networks, ChatGPT's foundation, Clearly Explained!!!

Deep Dive into LLMs like ChatGPT

[AI News.today] Is GPT-5 the Future of AI? OpenAI's Next Big Leap Explained

What are Transformers (Machine Learning Model)?

What is ChatGPT? OpenAI's Chat GPT Explained

Transformers, the tech behind LLMs | Deep Learning Chapter 5

What GPT-4 Can Really Do

How ChatGPT Works? | Working of ChatGPT in 6 Minutes | ChatGPT For Beginners | Simplilearn

How ChatGPT Works Technically | ChatGPT Architecture

What is GPT-3 (Generative Pre-Trained Transformer)?

Chat GPT Explained in 5 Minutes | What Is Chat GPT ? | Introduction To Chat GPT | Simplilearn

BERT vs GPT

GPT Explained!

ChatGPT Tutorial: How to Use Chat GPT For Beginners

Is ChatGPT Premium worth it?

What Are GPTs and How to Build your Own Custom GPT

Top Minds in AI Explain What’s Coming After GPT-4o | EP #130

Stop using ChatGPT❌🤖Like & Share #tech #chatgpt #malayalam

Комментарии

0:09:11

0:09:11

0:09:11

0:09:11

0:08:33

0:08:33

0:27:39

0:27:39

0:01:23

0:01:23

0:07:58

0:07:58

0:36:15

0:36:15

3:31:24

3:31:24

![[AI News.today] Is](https://i.ytimg.com/vi/YWo8PiidwKo/hqdefault.jpg) 0:00:59

0:00:59

0:05:51

0:05:51

0:09:17

0:09:17

0:27:14

0:27:14

0:00:40

0:00:40

0:06:16

0:06:16

0:07:54

0:07:54

0:03:41

0:03:41

0:05:12

0:05:12

0:01:00

0:01:00

0:10:13

0:10:13

0:27:51

0:27:51

0:00:33

0:00:33

0:09:09

0:09:09

0:25:30

0:25:30

0:00:26

0:00:26