filmov

tv

Python Tutorial: Understanding sequential models

Показать описание

---

Here, you will learn about the machine learning model used to implement the encoder and the decoder of the machine translator.

A sentence is a time-series input which means that every word in the sentence is affected by previous words.

The encoder and the decoder use a machine learning model that can learn from time-series or sequential inputs like sentences. The machine learning model is called a sequential model.

Sequential models go from one input to the other while producing an output at each time step. During time step 1, the first word is processed and during time step 2, the second word is processed. The same model processes each input.

You will be using a type of sequential models called a gated recurrent unit, or GRU, in your translator. For example, the inputs to the encoder is a sequence of English words encoded as one-hot vectors.

Let's consider an example. At time equals 1, the GRU model takes in the input word "We" and some initial hidden state which are all zeros. Then the model produces a new hidden state 0.8 and 0.3.

In the next time step, the GRU model sees the next word "like" and the previous hidden state 0.8 and 0.3. The GRU takes in these two inputs to produce a new hidden state, and continues this way to the end of the sentence.

The hidden state obtained from the previous step acts as memory of what the model has seen previously. These hidden states are computed using the internal parameters of the GRU model which are learned during the model training.

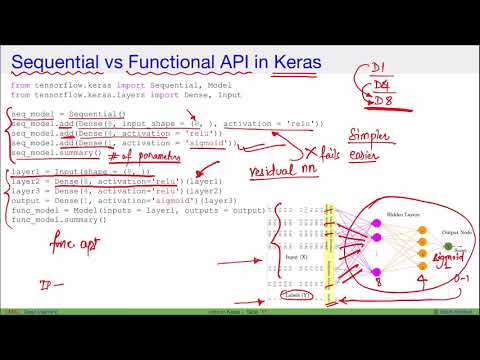

Let's quickly revisit the Keras functional API. Keras has two important objects: Layers and Models.

You can define an input layer using the Input object.

You can also define a hidden layer like a GRU layer using the Keras GRU object.

Then you can get the output of that layer by passing inp to the layer.

Finally, you can define a model object by specifying inputs and outputs of the model.

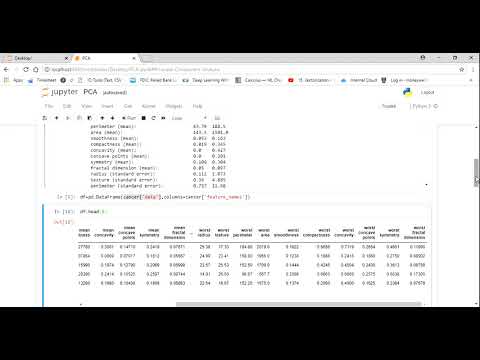

Before getting to implementing GRUs you must understand that sequential data has three dimensions.

The sequences are usually processed in groups or batches. The batch dimension specifies the number of sequences in a batch.

The time dimension describes the length of the sequences or sentences.

The input dimension describes the length of one-hot vectors.

The input layer of a GRU model needs to have this 3 dimensional shape.

When using Keras to implement a GRU model, first you need to define an input layer that can take in the data. In this example, you have an input of batch size 2, sequence length 3 and input dimensionality 4. Here, the sequence length is the number of words in the sentence. Then you define a GRU layer which has 10 hidden units. Hidden size determines the size of the hidden state produced by the GRU.

Finally, these layers are wrapped up into a keras model which produces the output of the GRU as the model output.

You can then use the model to predict using model dot predict and passing in some data which has the exact shape defined in the input layer.

You can also define the input layer by setting the batch size to None. To do that you use the shape argument instead of batch_shape and only set the sequence length and input dimensionality.

In Keras, doing this means that the input layer will accept any arbitrary sized batch of data.

This allows to define the Keras model once and experiment with different batch sizes without changing the model.

A GRU layer has two more important arguments return_state and return_sequences. If you set the return_state argument to True, the model will return two outputs instead of one, one is the last hidden state and the other is the last output.

For a GRU layer these are identical.

Next, if you set return_sequences to True, the model will output all the outputs in the sequence instead of the last output.

This will be a batch size by sequence length by hidden size shaped output.

Great! Let's have some fun with GRU layers in Keras.

#PythonTutorial #Python #DataCamp #sequential #models

0:05:34

0:05:34

0:05:57

0:05:57

0:11:33

0:11:33

0:05:24

0:05:24

0:05:01

0:05:01

0:00:55

0:00:55

0:03:03

0:03:03

0:02:39

0:02:39

0:53:42

0:53:42

0:16:00

0:16:00

0:09:09

0:09:09

0:01:00

0:01:00

0:02:27

0:02:27

2:47:55

2:47:55

0:12:30

0:12:30

0:23:54

0:23:54

0:23:03

0:23:03

0:15:01

0:15:01

0:05:07

0:05:07

0:53:18

0:53:18

0:26:48

0:26:48

0:29:19

0:29:19

0:04:41

0:04:41

0:11:54

0:11:54