filmov

tv

Insert | Update | Delete On Datalake (S3) with Apache Hudi and glue Pyspark

Показать описание

Note: You can load your views also based on schedule. ideally all your updates can happen on HUDI tables and then you may load your Athena view based on Schedule

All material and code can be found

All material and code can be found

SQL Query Basics: Insert, Select, Update, and Delete

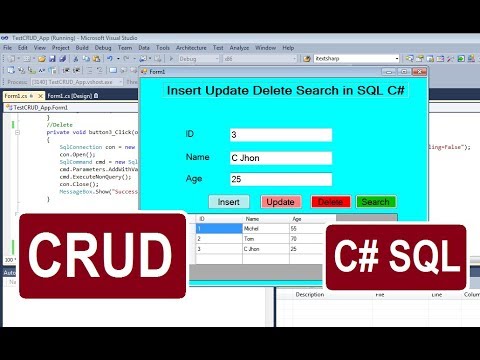

Insert Update Delete View and search data from database in C#.net

18 | INSERT, UPDATE & DELETE to Change Table Data | 2023 | Learn PHP Full Course for Beginners

4. What is Data Manipulation Language in SQL? Using SELECT, INSERT, UPDATE, DELETE commands in MySQL

7 - SQL Server Databases : Insert Update Delete

VB.NET insert update delete view and search data with SQL database (WITH CODE)

gridview insert update delete in asp.net

SQL Insert, Update, & Delete

Beginner PHP CRUD Tutorial Database Connection ,Insert, Update, Delete using MYSQL

Complete CRUD Operation in C# With SQL | Insert Delete Update Search in SQL using ConnectionString

SQL Merge | Insert Update Delete in a Single Statement | Incremental Load

COMO USAR INSERT , UPDATE ,DELETE E SELECT NO SQL PASSO A PASSO

SQL Triggers Explained: Insert, Update, Delete, Before, and After Triggers with Examples

C# Asp.Net Web Form CRUD : Insert, Update, Delete and View With Sql Server Database

Read, insert, update or delete with @Supabase — Course part 9

Introduction to Normalization | Insert, Update, Delete Anomaly With Examples | TechnonTechTV

CRUD Operation in C# With SQL Database | Insert, Update, Delete, Search Using ConnectionString

Entity Framework - Insert Update and Delete in C#

Comandos fundamentais do SQL: SELECT, UPDATE, INSERT E DELETE - Root #13

PHP Front To Back [Part 22] - MySQLi Insert, Update & Delete

C# Tutorial - Insert Update Delete into multiple tables in SQL Server Part 1 | FoxLearn

MySQL Triggers | INSERT , UPDATE , DELETE

How to Create, Insert, Update, and Delete in MsAccess using SQL

C# Tutorial - Insert Update Delete data in Database from DataGridView | FoxLearn

Комментарии

0:11:05

0:11:05

0:13:47

0:13:47

0:19:13

0:19:13

0:13:30

0:13:30

0:13:58

0:13:58

0:23:49

0:23:49

0:08:32

0:08:32

0:11:49

0:11:49

0:17:04

0:17:04

0:27:02

0:27:02

0:06:21

0:06:21

0:33:36

0:33:36

0:08:54

0:08:54

0:36:55

0:36:55

0:18:11

0:18:11

0:03:56

0:03:56

0:17:02

0:17:02

0:32:08

0:32:08

0:21:03

0:21:03

0:23:27

0:23:27

0:11:33

0:11:33

0:01:50

0:01:50

0:13:41

0:13:41

0:25:28

0:25:28