filmov

tv

Regularization

Показать описание

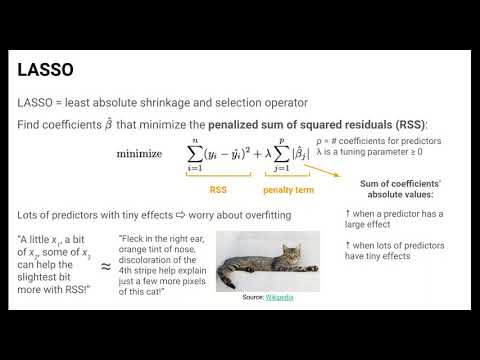

Regularization is one way of reducing the total error, by finding a good trade-off between bias and variance automatically. To do so, we simply add another term to the loss function, which will try to minimize the complexity of the model. The importance of this additional term can be controlled by a scalar, which is an example of a hyperparameter. In this video, we discuss the two most prominent methods, ridge, and lasso.

0:20:27

0:20:27

0:11:40

0:11:40

0:19:21

0:19:21

0:04:57

0:04:57

0:07:10

0:07:10

0:00:59

0:00:59

0:09:45

0:09:45

0:00:34

0:00:34

0:15:31

0:15:31

0:09:43

0:09:43

0:08:19

0:08:19

0:12:00

0:12:00

0:04:30

0:04:30

0:08:19

0:08:19

0:05:55

0:05:55

0:21:14

0:21:14

0:02:21

0:02:21

0:09:06

0:09:06

0:13:33

0:13:33

0:20:17

0:20:17

0:09:26

0:09:26

0:05:36

0:05:36

0:19:02

0:19:02

0:00:57

0:00:57