filmov

tv

Precision, Recall, & F1 Score Intuitively Explained

Показать описание

Classification performance metrics are an important part of any machine learning system. Here we discuss the most basic and common measures of model performance; accuracy, precision, recall, and F1 score.

Chapters:

0:00 Introduction

1:02 Basic Definitions

2:50 Accuracy

4:30 Precision

5:36 Recall

6:34 F1 Score

8:07 Conclusion

Chapters:

0:00 Introduction

1:02 Basic Definitions

2:50 Accuracy

4:30 Precision

5:36 Recall

6:34 F1 Score

8:07 Conclusion

Never Forget Again! // Precision vs Recall with a Clear Example of Precision and Recall

Precision, Recall, F1 score, True Positive|Deep Learning Tutorial 19 (Tensorflow2.0, Keras & Pyt...

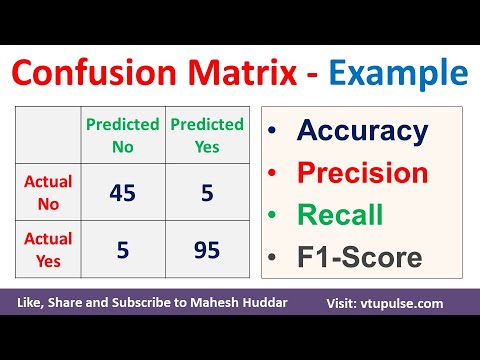

Confusion Matrix Solved Example Accuracy Precision Recall F1 Score Prevalence by Mahesh Huddar

Introduction to Precision, Recall and F1 | Classification Models

Precision, Recall, & F1 Score Intuitively Explained

Precision, Recall and F1-Score

Como entender as métricas Precision & Recall

8.8. Precision, Recall, F1 score | Model Evaluation

Machine Learning Fundamentals: The Confusion Matrix

How to evaluate ML models | Evaluation metrics for machine learning

TP, FP, TN, FN, Accuracy, Precision, Recall, F1-Score, Sensitivity, Specificity, ROC, AUC

MFML 044 - Precision vs recall

Precision, Recall, and F1 Score Explained for Binary Classification

Precision e recall

Precision und Recall in 100 Sekunden

Precision, Recall and F1 Score | Classification Metrics Part 2

Scikit-Learn Classification Report - Precision, Recall, F1, Accuracy of ML Models

What are Precision and Recall in Machine Learning?

Precision and Recall | Unforgettable explanation with easy example on White Board | Confusion Matrix

Confusion Matrix for Multiclass Classification Precision Recall Weighted F1 Score by Mahesh Huddar

12.2 Precision, Recall, and F1 Score (L12 Model Eval 5: Performance Metrics)

Precision, Recall, F1-score, confusion matrix, multilabel classification micro e macro average (ita)

Mastering the Confusion Matrix | Accuracy, Precision, Recall & F1 Score | Machine Learning

Tutorial 34- Performance Metrics For Classification Problem In Machine Learning- Part1

Комментарии

0:05:24

0:05:24

0:11:46

0:11:46

0:05:50

0:05:50

0:03:06

0:03:06

0:08:56

0:08:56

0:09:03

0:09:03

0:11:36

0:11:36

0:32:12

0:32:12

0:07:13

0:07:13

0:10:05

0:10:05

0:14:01

0:14:01

0:05:47

0:05:47

0:09:04

0:09:04

0:07:29

0:07:29

0:01:41

0:01:41

0:42:42

0:42:42

0:00:32

0:00:32

0:08:08

0:08:08

0:14:30

0:14:30

0:08:22

0:08:22

0:11:48

0:11:48

0:12:43

0:12:43

0:06:08

0:06:08

0:24:12

0:24:12