filmov

tv

TP, FP, TN, FN, Accuracy, Precision, Recall, F1-Score, Sensitivity, Specificity, ROC, AUC

Показать описание

In this video, we cover the definitions that revolve around classification evaluation - True Positive, False Positive, True Negative, False Negative, Accuracy, Precision, Recall, F1-Score, Sensitivity, Specificity, ROC, AUC

These metrics are widely used in machine learning, data science, and statistical analysis.

#machinelearning #datascience #statistics #explanation #explained

VIDEO CHAPTERS

0:00 Introduction

1:15 True Positive, False Positive, True Negative, False Negative

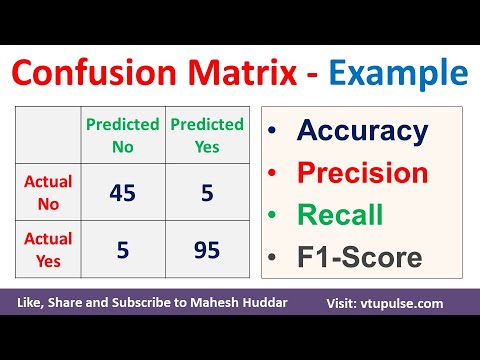

6:08 Accuracy, Precision, Recall, F1-Score

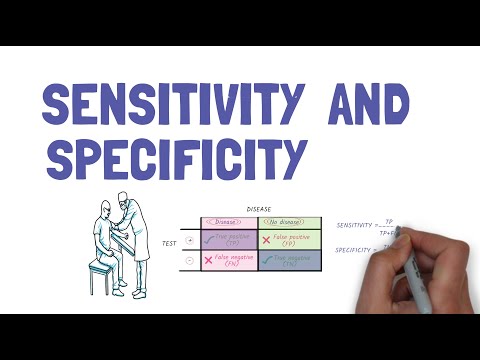

8:59 Sensitivity, Specificity

10:30 ROC, AUC

These metrics are widely used in machine learning, data science, and statistical analysis.

#machinelearning #datascience #statistics #explanation #explained

VIDEO CHAPTERS

0:00 Introduction

1:15 True Positive, False Positive, True Negative, False Negative

6:08 Accuracy, Precision, Recall, F1-Score

8:59 Sensitivity, Specificity

10:30 ROC, AUC

TP, FP, TN, FN, Accuracy, Precision, Recall, F1-Score, Sensitivity, Specificity, ROC, AUC

Confusion Matrix Solved Example Accuracy Precision Recall F1 Score Prevalence by Mahesh Huddar

Confusion Matrix in Machine Learning ⚡️ Explained in 60 Seconds

Precision, Recall, F1 score, True Positive|Deep Learning Tutorial 19 (Tensorflow2.0, Keras & Pyt...

Binary Classification: Understanding AUC, ROC, Precision/Recall & Sensitivity/Specificity

What is a Confusion Matrix | TP, TN, FP, FN | Type 1 & 2 Error | Easily explained | Machine Lear...

Confusion Matrix for Multiclass Classification Precision Recall Weighted F1 Score by Mahesh Huddar

Sensitivity and Specificity simplified

Machine Learning Fundamentals: Sensitivity and Specificity

Confusion Matrix - Model Building and Validation

Confusion Matrix ll Accuracy,Error Rate,Precision,Recall Explained with Solved Example in Hindi

TPR,FPR,FNR,TNR, Confusion Matrix

Machine Learning Fundamentals: The Confusion Matrix

Confusion matrix, True Positive (TP), True Negative (TN), False Positive (FP),False Negative(FN)

Understanding TP, FP, TN, and FN in Clustering Evaluation

Tutorial 34- Performance Metrics For Classification Problem In Machine Learning- Part1

True Positive vs. True Negative vs. False Positive vs. False Negative

Performance Metrics, Accuracy,Precision,Recall And F-Beta Score Explained In Hindi|Machine Learning

Write your own function for Multiclass Classification Confusion matrix, F1 score, precision, recall

Accuracy and Confusion Matrix | Type 1 and Type 2 Errors | Classification Metrics Part 1

Machine Learning - Confusion Matrix Analizi - Precision, Recall, F1 Score

confusion matrix exam question solve | confusion matrix A to Z | precision , recall,accura formula

How to find Calculate F1 Score for Multi-Class Classification Machine Learning by Dr. Mahesh Huddar

Medical Statistics: Calculating Sensitivity and Specificity using a 2x2 table

Комментарии

0:14:01

0:14:01

0:05:50

0:05:50

0:00:59

0:00:59

0:11:46

0:11:46

0:07:30

0:07:30

0:09:27

0:09:27

0:08:22

0:08:22

0:06:06

0:06:06

0:11:47

0:11:47

0:01:32

0:01:32

0:08:22

0:08:22

0:25:12

0:25:12

0:07:13

0:07:13

0:10:13

0:10:13

0:01:40

0:01:40

0:24:12

0:24:12

0:04:09

0:04:09

0:23:35

0:23:35

0:31:11

0:31:11

0:34:08

0:34:08

0:12:55

0:12:55

0:14:22

0:14:22

0:10:13

0:10:13

0:01:43

0:01:43