filmov

tv

Scalar Root-Finding - Pushforward/Jvp rule

Показать описание

In the spirit of differentiable programming, one could just call the forward-mode AD engine on the mathematical operations to the root-finding algorithm. However, this often introduces unnecessary overhead and for some algorithms it might not be possible. This could be either it contains certain non-differentiable functions or is itself a call to a foreign-language library (like a FORTRAN solver) that is interfacing with the AD framework. This video presents a classical remedy that involves an application of the implicit function theorem.

-------

-------

Timestamps:

00:00 What is scalar root finding?

00:54 Example Algorithms

01:26 Dimensionalities involved

01:54 Assumption that solver converges

02:22 Task or propagating tangent information

02:36 NOT by unrolling the iterations

03:23 Definition of the pushforward / Jvp

04:11 Implicit Function Theorem via total derivative

06:43 Assembling the tangent propagation

07:41 Final Pushforward operation

08:17 Obtain additional derivatives by forward-mode AD

08:39 Summary

09:05 Outro

Scalar Root-Finding - Pushforward/Jvp rule

Scalar Root-Finding - Pullback/vJp rule

Nonlinear System Solve - Pushforward/Jvp rule

Adjoint Sensitivities in Julia with Zygote & ChainRules

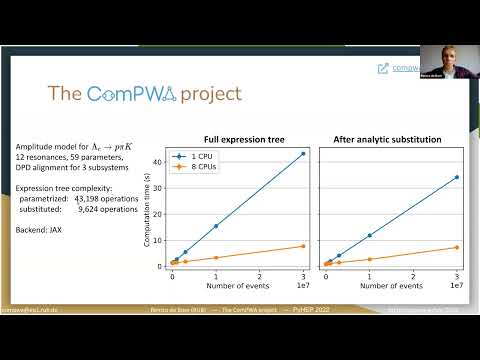

PyHEP2022 Speeding up differentiable programming with a Computer Algebra System

Simple reverse-mode Autodiff in Julia - Computational Chain

PyHEP 2020 Tutorial on Automatic Differentiation

Oliver Strickson - A functional tour of automatic differentiation - Lambda Days 2020

Understanding automatic differentiation (in Julia)

Differentiable Programming via Differentiable Search of Program Structures

Differentiable Programming for Modeling and Control of Dynamical Systems

Differentiate Everything: a Lesson from Deep Learning

Intro of Differentiable Programming - FutureAI 1 (Volume 30dB Up)

Martin Monperrus - Curtain talk: Executable Equations

Basic Automatic Differentiation Theory

Differential Geometry: linearization and push-forward, 1-20-21 part 5

Adjoint Sensitivities of a Non-Linear system of equations | Full Derivation

OCaml Workshop 2020 - AD-OCaml: Algorithmic Differentiation for OCaml

Idea of automatic differentiation. Autograd in Jax, PyTorch. Optimization methods. MSAI @ MIPT.

Differentiable Programming for Oceanography with Patrick Heimbach - #557

[LAFI'22] Probabilistic and Differentiable Programming in Scientific Simulators

Differentiable Earth system models in Julia | JuliaCon 2022

CAMEE Seminar: Deep Learning and Differentiable Physics with David Chambers

TensorIR: An Abstraction for Tensorized Program Optimization | SAMPL Talk 2022/01/20

Комментарии

0:09:47

0:09:47

0:06:47

0:06:47

0:16:47

0:16:47

0:20:15

0:20:15

0:28:35

0:28:35

0:12:51

0:12:51

0:49:24

0:49:24

0:34:38

0:34:38

1:24:11

1:24:11

0:58:16

0:58:16

0:47:00

0:47:00

1:15:42

1:15:42

0:41:08

0:41:08

0:35:40

0:35:40

0:06:26

0:06:26

0:44:51

0:44:51

0:27:14

0:27:14

0:18:14

0:18:14

0:54:58

0:54:58

0:36:53

0:36:53

![[LAFI'22] Probabilistic and](https://i.ytimg.com/vi/sEN1ID4a42E/hqdefault.jpg) 0:32:26

0:32:26

2:52:26

2:52:26

0:52:37

0:52:37

0:36:03

0:36:03