filmov

tv

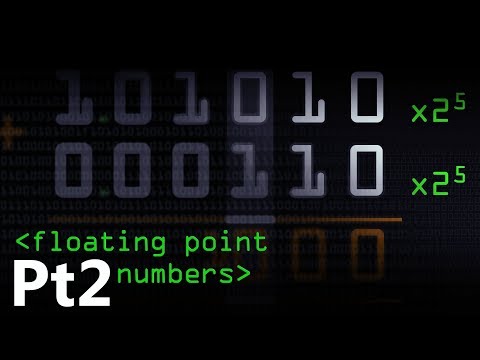

Floating Point Numbers (Part2: Fp Addition) - Computerphile

Показать описание

This video was filmed and edited by Sean Riley.

Floating Point Numbers (Part2: Fp Addition) - Computerphile

3 Adding Floating Point Numbers

Floating Point Numbers (Part1: Fp vs Fixed) - Computerphile

CO14a - Addition of floating point numbers

Binary 7 – Floating Point Binary Addition

FP IEEE

FP Basic

IEEE Standard for Floating-Point Arithmetic (IEEE 754)

CO15 - Multiply and divide floating point numbers

[28] MIPS Floating Point Addition Circuit Design - MIPS ALU Design

IEEE 754 Floating Point Representation to its Decimal Equivalent

Lecture 19. Floating-Point Unit (FPU)

MIPS Tutorial 30 Floating Point Arithmetic

Floating Point Numbers

Floating point representation | Example-3 | COA | Lec-9 | Bhanu Priya

How to Convert Floating Point Decimal Numbers to Binary

Floating Point Numbers: Solved Problems - 3 | COA

Multiplication of floating point numbers - Computer Organization and Architecture

Example: IEEE 754 (32-Bit) to Decimal

Lecture 9 - Learning how to add two FP numbers: Part 2- 23-11-2016

Lec-10: Floating Point Representation with examples | Number System

Represent 32.75 in IEEE 754 Single Precision 32 bit Format || #ece #engineering #osmaniauniversity

33 addition and substraction of floating point numbers

Forward to the Past: The Case for Uniformly Strict Floating Point Arithmetic on the JVM - Joe Darcy

Комментарии

0:08:09

0:08:09

0:09:01

0:09:01

0:15:41

0:15:41

0:06:22

0:06:22

0:11:16

0:11:16

0:13:34

0:13:34

0:11:24

0:11:24

0:09:16

0:09:16

0:04:07

0:04:07

![[28] MIPS Floating](https://i.ytimg.com/vi/oYaoIHSqrk0/hqdefault.jpg) 0:11:23

0:11:23

0:07:26

0:07:26

0:15:04

0:15:04

0:07:47

0:07:47

0:17:30

0:17:30

0:07:54

0:07:54

0:10:01

0:10:01

0:08:39

0:08:39

0:14:31

0:14:31

0:04:27

0:04:27

0:35:29

0:35:29

0:18:10

0:18:10

0:05:09

0:05:09

0:05:12

0:05:12

0:46:43

0:46:43