filmov

tv

Faster database indexes (straight from the docs)

Показать описание

——————————————————

00:00 MySQL documentation

00:58 Addresses table

02:17 Hash columns

04:13 concat vs concat_ws

05:40 Generated columns

07:31 The UNHEX function

09:34 Searching via hash

11:22 MD5 hash collisions

12:46 Functional indexes

——————————————————

💬 Follow PlanetScale on social media

Faster database indexes (straight from the docs)

SQL indexing best practices | How to make your database FASTER!

Database Indexing for Dumb Developers

How do indexes make databases read faster?

What is Indexing? SQL Database Index Explained #sql #nosql #aws #rdbms #nosql #postgresql #mysql

Faster than a regular database index

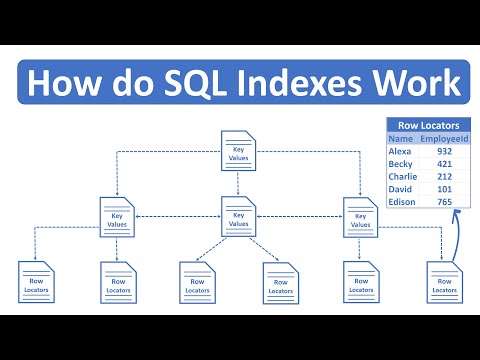

How do SQL Indexes Work

Database Indexing: Unveiling The Secrets of Fast Queries, in just 5 Minutes!

Rocket Your Queries

Database Indexes: What do they do? | Systems Design Interview: 0 to 1 with Google Software Engineer

21. Database Indexing: How DBMS Indexing done to improve search query performance? Explained

Things every developer absolutely, positively needs to know about database indexing - Kai Sassnowski

Database Indexes & Performance Simplified

Don't hide your database indexes!

7 Must-know Strategies to Scale Your Database

Database Indexing Tutorial - How do Database Indexes Work

Optimizing Vector Databases With Indexing Strategies

5 Secrets for making PostgreSQL run BLAZING FAST. How to improve database performance.

How to Create Database Indexes: Databases for Developers: Performance #4

Master MongoDB Indexing in One Video: Boost Your Database Performance Instantly!

CREATE INDEX Statement (SQL) - Creating New Database Indexes

How to Leverage Existing Pages to Index Soon

Performance with Database Indexes

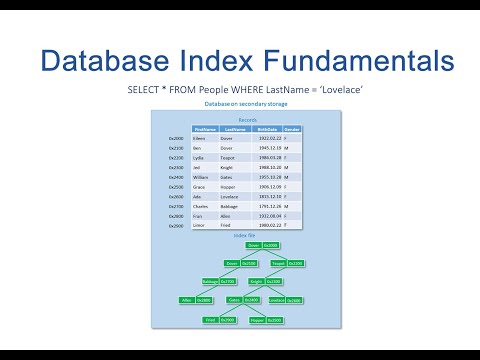

Database Index Fundamentals

Комментарии

0:13:28

0:13:28

0:04:08

0:04:08

0:15:59

0:15:59

0:23:25

0:23:25

0:00:46

0:00:46

0:13:39

0:13:39

0:12:12

0:12:12

0:05:02

0:05:02

0:38:08

0:38:08

0:10:45

0:10:45

1:23:52

1:23:52

0:41:50

0:41:50

0:25:10

0:25:10

0:11:35

0:11:35

0:08:42

0:08:42

0:08:25

0:08:25

0:07:35

0:07:35

0:08:12

0:08:12

0:12:47

0:12:47

0:38:22

0:38:22

0:01:11

0:01:11

0:00:27

0:00:27

0:15:31

0:15:31

0:04:20

0:04:20