filmov

tv

Neural Networks Demystified [Part 3: Gradient Descent]

Показать описание

Neural Networks Demystified

@stephencwelch

Supporting Code:

Link to Yann's Talk:

In this short series, we will build and train a complete Artificial Neural Network in python. New videos every other friday.

Part 1: Data + Architecture

Part 2: Forward Propagation

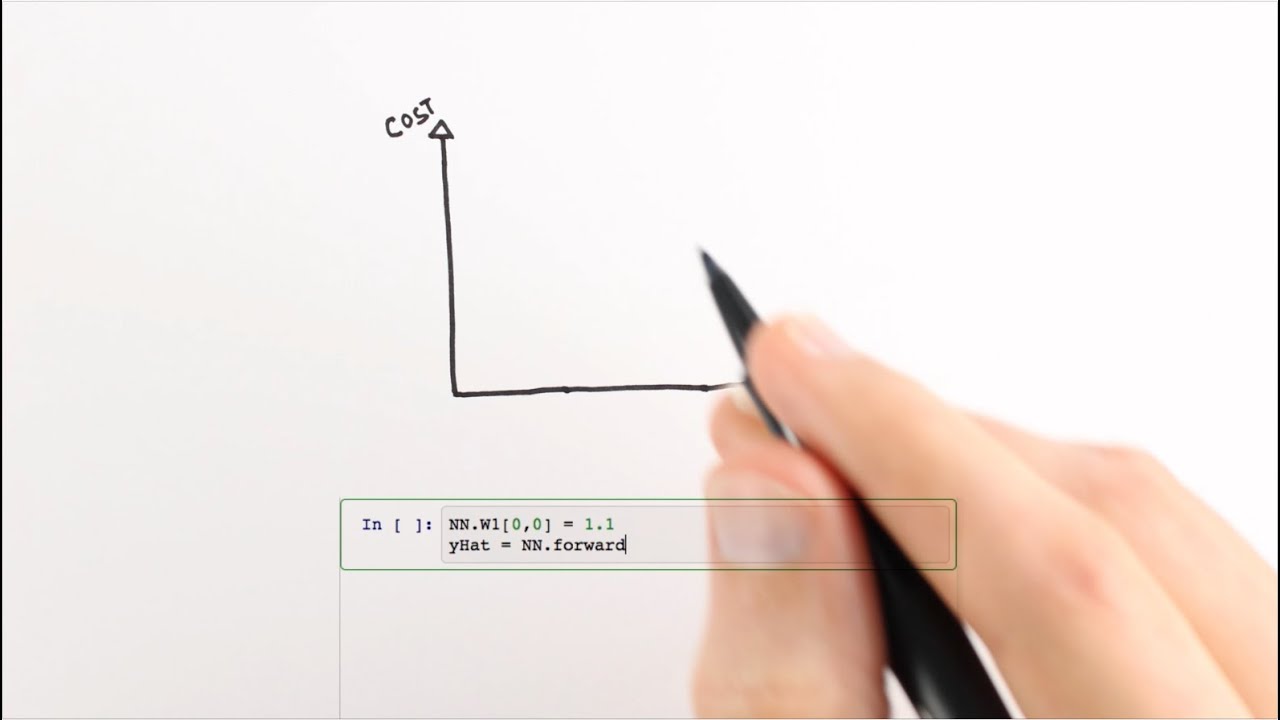

Part 3: Gradient Descent

Part 4: Backpropagation

Part 5: Numerical Gradient Checking

Part 6: Training

Part 7: Overfitting, Testing, and Regularization

@stephencwelch

Supporting Code:

Link to Yann's Talk:

In this short series, we will build and train a complete Artificial Neural Network in python. New videos every other friday.

Part 1: Data + Architecture

Part 2: Forward Propagation

Part 3: Gradient Descent

Part 4: Backpropagation

Part 5: Numerical Gradient Checking

Part 6: Training

Part 7: Overfitting, Testing, and Regularization

Neural Networks Demystified [Part 3: Gradient Descent]

Neural Networks Demystified: Part 3, Welch Labs @ MLconf SF

Neural Networks Demystified Part 3 Gradient Descent

Neural Networks Demystified [Part 4: Backpropagation]

Neural Networks Demystified [Part 2: Forward Propagation]

Neural Networks Demystified [Part 1: Data and Architecture]

Neural Networks Demystified [Part 6: Training]

Neural Networks Demystified [Part 5: Numerical Gradient Checking]

Neural Network Fundamentals (Part3): Regression

Neural Network Training (Part 3): Gradient Calculation

Neural Networks Demystified [Part 7: Overfitting, Testing, and Regularization]

Lecture 5: Neural Networks: Learning the network: Part 3

Neural Network Calculation (Part 3): Feedforward Neural Network Calculation

Neural Networks Demystified: Part 1, Welch Labs @ MLconf SF

Neural Networks Demystified: Part 4, Welch Labs @ MLconf SF

Neural Networks Demystified Part 2 Forward Propagation

Neural Networks Demystified: Your Ultimate Guide to Understanding and Implementing ANNs

Neural Networks Demystified: The Key to Understanding AI & Machine Learning!

Artificial Neural Networks Demystified

CS231n Winter 2016: Lecture 6: Neural Networks Part 3 / Intro to ConvNets

Neural Networks Demystified: Part 5, Welch Labs @ MLconf SF

But what is a neural network? | Chapter 1, Deep learning

Backpropagation Details Pt. 2: Going bonkers with The Chain Rule

Deep Learning using Deep Neural Networks Part- 3

Комментарии

0:06:56

0:06:56

0:07:03

0:07:03

0:06:56

0:06:56

0:07:56

0:07:56

0:04:28

0:04:28

0:03:07

0:03:07

0:04:41

0:04:41

0:04:14

0:04:14

0:09:51

0:09:51

0:15:53

0:15:53

0:05:53

0:05:53

1:27:35

1:27:35

0:15:02

0:15:02

0:04:23

0:04:23

0:08:03

0:08:03

0:04:28

0:04:28

1:26:06

1:26:06

0:01:08

0:01:08

0:10:07

0:10:07

1:09:36

1:09:36

0:04:21

0:04:21

0:18:40

0:18:40

0:13:09

0:13:09

0:38:42

0:38:42