filmov

tv

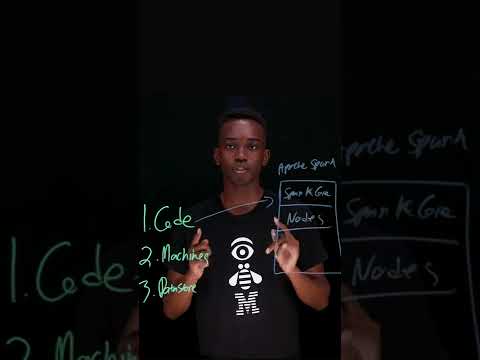

Apache Spark Concepts and Python Examples

Показать описание

Apache Spark is a unified analytics engine for large-scale data processing. It provides high-level APIs in Java, Python, and Scala, and a highly optimized engine that supports general execution graphs. Spark is designed to be highly flexible and can be used for a wide range of tasks, from data integration and data processing to machine learning and graph processing.

The Spark Core is the foundation of the Spark engine and provides the basic functionality for data processing. The Resilient Distributed Datasets (RDD) API is a fundamental data structure in Spark that allows for data to be split across multiple nodes in a cluster and processed in parallel. Spark also provides a range of libraries and APIs for tasks such as data streaming, graph processing, and machine learning.

To reinforce your understanding of Spark, we recommend reviewing the official Apache Spark documentation and experimenting with the Spark shell. Additionally, practicing with real-world datasets and working on projects that involve data processing and analysis can help solidify your skills.

Spark is an essential tool for anyone working with large-scale data, and having a strong grasp of its concepts and APIs can open up new opportunities in data science, data engineering, and related fields.

Additional Resources:

#ApacheSpark #SparkConcepts #PythonExamples #BigData #DataProcessing #DataScience #MachineLearning #Scala #Java #RDD #ResilientDistributedDatasets #DataEngineering #STEM #Programming #Tech #SoftwareDevelopment #DataAnalysis #DataIntegration #GraphProcessing #StreamingData #IT #ComputerScience #ArtificialIntelligence

Find this and all other slideshows for free on our website:

The Spark Core is the foundation of the Spark engine and provides the basic functionality for data processing. The Resilient Distributed Datasets (RDD) API is a fundamental data structure in Spark that allows for data to be split across multiple nodes in a cluster and processed in parallel. Spark also provides a range of libraries and APIs for tasks such as data streaming, graph processing, and machine learning.

To reinforce your understanding of Spark, we recommend reviewing the official Apache Spark documentation and experimenting with the Spark shell. Additionally, practicing with real-world datasets and working on projects that involve data processing and analysis can help solidify your skills.

Spark is an essential tool for anyone working with large-scale data, and having a strong grasp of its concepts and APIs can open up new opportunities in data science, data engineering, and related fields.

Additional Resources:

#ApacheSpark #SparkConcepts #PythonExamples #BigData #DataProcessing #DataScience #MachineLearning #Scala #Java #RDD #ResilientDistributedDatasets #DataEngineering #STEM #Programming #Tech #SoftwareDevelopment #DataAnalysis #DataIntegration #GraphProcessing #StreamingData #IT #ComputerScience #ArtificialIntelligence

Find this and all other slideshows for free on our website:

0:03:20

0:03:20

0:03:00

0:03:00

0:10:47

0:10:47

0:02:09

0:02:09

0:02:39

0:02:39

0:13:35

0:13:35

0:02:53

0:02:53

0:01:00

0:01:00

0:15:30

0:15:30

0:17:16

0:17:16

0:22:42

0:22:42

1:15:10

1:15:10

0:17:21

0:17:21

0:01:01

0:01:01

0:00:25

0:00:25

5:54:22

5:54:22

1:07:44

1:07:44

0:29:11

0:29:11

0:02:51

0:02:51

0:44:15

0:44:15

0:30:33

0:30:33

0:04:11

0:04:11

0:35:07

0:35:07

0:01:00

0:01:00