filmov

tv

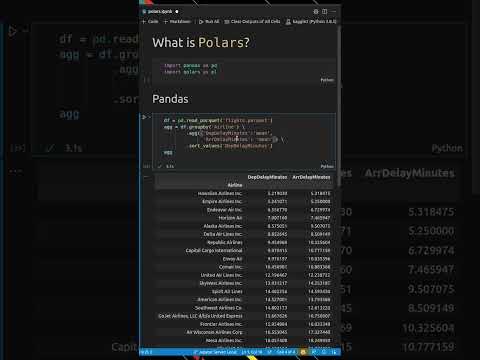

Working with larger-than-memory datasets with Polars

Показать описание

Polars makes it easy to work with very large datasets even on a regular laptop. In this video I show you just how easy on a typical data science query.

This video comes from my Data Analysis with Polars course on Udemy - check out the course here with a half price discount:

Want to know more about Polars for high performance data science and ML? Then you can:

- subscribe to my channel

This video comes from my Data Analysis with Polars course on Udemy - check out the course here with a half price discount:

Want to know more about Polars for high performance data science and ML? Then you can:

- subscribe to my channel

Working with larger-than-memory datasets with Polars

Python Pandas Tutorial 15. Handle Large Datasets In Pandas | Memory Optimization Tips For Pandas

Handling kaggle large datasets on 16Gb RAM | CSV | Yashvi Patel

Working with Large Datasets as a Data Scientist (with Python)

Polars vs Spark - Larger Than Memory Datasets

Peter Hoffmann - Using Pandas and Dask to work with large columnar datasets in Apache Parquet

Read Giant Datasets Fast - 3 Tips For Better Data Science Skills

Using Pandas and Dask to work with large columnar datasets in Apache Parquet

handling large datasets in python

Why and How to use Dask (Python API) for Large Datasets ?

Will Polars replace Pandas for Data Science?

Using the {arrow} and {duckdb} packages to wrangle medical datasets that are Larger than RAM

Tools for Working with Large Datasets

This INCREDIBLE trick will speed up your data processes.

Algorithm Researcher explains how Pytorch Datasets and DataLoaders work

Vector databases are so hot right now. WTF are they?

Handling Large Datasets in Pandas | #42 of 53: The Complete Pandas Course

Spatial Statistics for Huge Datasets and Best Practices

Financial Modeling on Large, Streaming Datasets

Financial Modeling on Large, Streaming Datasets

Managing Large Datasets

Try limiting rows when creating reporting for big data in Power BI

Very Large Datasets with the GPU Data Frame

Data Visualization Fundamentals - Ingesting large datasets

Комментарии

0:02:04

0:02:04

0:05:43

0:05:43

0:08:10

0:08:10

1:07:01

1:07:01

0:11:11

0:11:11

0:38:33

0:38:33

0:15:17

0:15:17

0:38:33

0:38:33

0:03:04

0:03:04

0:11:18

0:11:18

0:00:53

0:00:53

0:18:18

0:18:18

0:31:21

0:31:21

0:12:54

0:12:54

0:08:10

0:08:10

0:03:22

0:03:22

0:03:55

0:03:55

1:18:26

1:18:26

0:46:24

0:46:24

0:55:59

0:55:59

0:11:37

0:11:37

0:05:01

0:05:01

0:10:59

0:10:59

0:07:56

0:07:56