filmov

tv

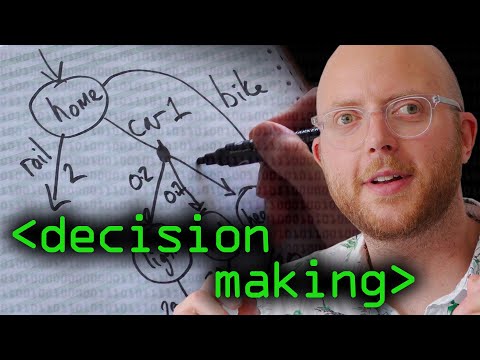

Markov Decision Processes - Computerphile

Показать описание

Deterministic route finding isn't enough for the real world - Nick Hawes of the Oxford Robotics Institute takes us through some problems featuring probabilities.

This video was previously called "Robot Decision Making"

This video was filmed and edited by Sean Riley.

This video was previously called "Robot Decision Making"

This video was filmed and edited by Sean Riley.

Markov Decision Processes - Computerphile

Markov Decision Process (MDP) - 5 Minutes with Cyrill

Section3 Markov Decision Processes MDPs II

Markov Decision Processes in Reinforcement Learning - Artificial Intelligence

First MDP Problem

Knowledge Graphs - Computerphile

10 1 Introduction to Markov Decision Process

How to solve problems with Reinforcement Learning | Markov Decision Process

MARKOV DECISION PROCESSES: POLICY ITERATION AND APPLICATIONS & EXTENSIONS OF MDPS

NPTEL: NPTEL: An Introduction to Artificial Intelligence -Markov Decision Process)

Example of calculation value function of Markov Decision Process

Ch(e)at GPT? - Computerphile

Chomsky Hierarchy - Computerphile

Introduction to the Markov Decision Process Software by SpiceLogic Inc.

Reinforcement Learning 2: Markov Decision Processes

Markov Decision Processes Two - Georgia Tech - Machine Learning

COMPSCI 188 - 2018-09-18 - Markov Decision Processes (MDPs) Part 1/2

Markov Decision Processes

Section 3 Worksheet Solutions: MDPs

010 Markov Decision Process

Markov decision problems

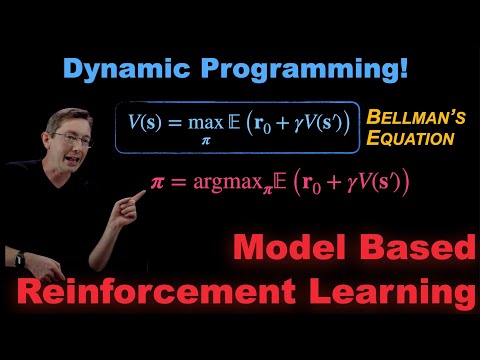

Model Based Reinforcement Learning: Policy Iteration, Value Iteration, and Dynamic Programming

Markov Decision Processes Three - Georgia Tech - Machine Learning

Unit- V Lecture 59 - Markov Decision Process

Комментарии

0:17:42

0:17:42

0:03:36

0:03:36

0:25:27

0:25:27

0:24:55

0:24:55

0:02:10

0:02:10

0:12:05

0:12:05

0:49:03

0:49:03

0:08:04

0:08:04

0:18:23

0:18:23

1:44:26

1:44:26

0:06:21

0:06:21

0:13:52

0:13:52

0:06:57

0:06:57

0:01:38

0:01:38

0:54:04

0:54:04

0:04:18

0:04:18

1:25:00

1:25:00

0:14:01

0:14:01

0:26:16

0:26:16

0:16:27

0:16:27

0:02:23

0:02:23

0:27:10

0:27:10

0:05:02

0:05:02

0:15:20

0:15:20