filmov

tv

Improve Your Python Function's Runtime: Efficient File Handling Strategy

Показать описание

Discover how to enhance the performance of your Python functions by optimizing file reading processes. Learn to use dictionaries effectively to avoid redundant file access.

---

Visit these links for original content and any more details, such as alternate solutions, latest updates/developments on topic, comments, revision history etc. For example, the original title of the Question was: Approach for improving runtime of Python function

If anything seems off to you, please feel free to write me at vlogize [AT] gmail [DOT] com.

---

Improve Your Python Function's Runtime: Efficient File Handling Strategy

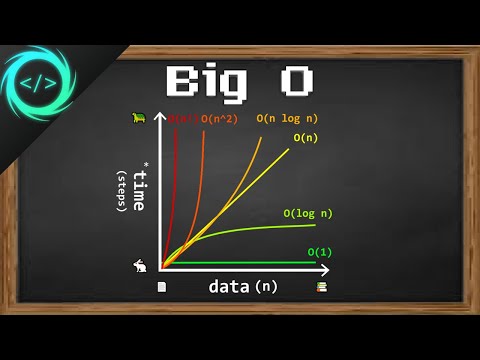

In today's fast-paced programming environment, efficiency is key. As a Python developer, you may often find yourself facing performance issues, especially when handling large datasets or repetitive tasks. This guide tackles a common challenge: improving the runtime of a Python function that reads files, processes data, and calculates statistics. If you're dealing with similar issues, keep reading for a streamlined solution!

The Problem: Redundant File Access

Imagine you've built a function that processes various geospatial files based on a dictionary mapping variable names to file paths. However, this function repeatedly reads the same file multiple times when different variables are linked to the same file. This redundancy is not only time-consuming but also inefficient.

Here's a simplified overview of the situation. You have a data dictionary like this:

[[See Video to Reveal this Text or Code Snippet]]

Your function processes each variable and calculates some zonal statistics. The current implementation looks something like this:

[[See Video to Reveal this Text or Code Snippet]]

The Result: Slow Performance

Since the same file is read multiple times, the execution time for your function can dramatically increase. As noted, this inefficiency has real performance implications that could slow down your whole workflow.

The Solution: Optimize File Handling

To enhance the function's runtime substantially, we need to adjust the approach to file handling. Below are the steps to improve the function's efficiency.

Step 1: Read Each File Once

Instead of reading each .tif file multiple times, modify your function to first read each file once and store the result as a numpy array. This step will significantly reduce redundant reads.

Step 2: Transform File Paths Function

Create a new function, transform_filepaths, that initializes a dictionary mapping each variable to its respective numpy array. Here’s how you can implement it:

[[See Video to Reveal this Text or Code Snippet]]

This function ensures that each unique file path is read only once, avoiding unnecessary processing for overlapping file paths.

Step 3: Modify Your Main Function

Next, you need to adapt your existing function to accept numpy arrays instead of file paths. This way, you're improving the organization of data and further cutting down on time required for file access. Here’s an example:

[[See Video to Reveal this Text or Code Snippet]]

Step 4: Implement the New Workflow

Finally, your workflow will look as follows:

[[See Video to Reveal this Text or Code Snippet]]

Measuring Performance

After implementing these changes, you can measure the improvement in performance. For instance, before optimization, the execution time might be around 6.69 seconds; after implementing the above improvements, you could see a reduction to 0.42 seconds!

Conclusion

Optimizing your functions by redesigning how you handle files can significantly improve performance and reduce runtime, especially when managing large datasets. By reading each file once and modifying how your functions access the data, you’ll notice a substantial impact on efficiency.

Apply these strategies to your own projects and observe the difference!

---

Visit these links for original content and any more details, such as alternate solutions, latest updates/developments on topic, comments, revision history etc. For example, the original title of the Question was: Approach for improving runtime of Python function

If anything seems off to you, please feel free to write me at vlogize [AT] gmail [DOT] com.

---

Improve Your Python Function's Runtime: Efficient File Handling Strategy

In today's fast-paced programming environment, efficiency is key. As a Python developer, you may often find yourself facing performance issues, especially when handling large datasets or repetitive tasks. This guide tackles a common challenge: improving the runtime of a Python function that reads files, processes data, and calculates statistics. If you're dealing with similar issues, keep reading for a streamlined solution!

The Problem: Redundant File Access

Imagine you've built a function that processes various geospatial files based on a dictionary mapping variable names to file paths. However, this function repeatedly reads the same file multiple times when different variables are linked to the same file. This redundancy is not only time-consuming but also inefficient.

Here's a simplified overview of the situation. You have a data dictionary like this:

[[See Video to Reveal this Text or Code Snippet]]

Your function processes each variable and calculates some zonal statistics. The current implementation looks something like this:

[[See Video to Reveal this Text or Code Snippet]]

The Result: Slow Performance

Since the same file is read multiple times, the execution time for your function can dramatically increase. As noted, this inefficiency has real performance implications that could slow down your whole workflow.

The Solution: Optimize File Handling

To enhance the function's runtime substantially, we need to adjust the approach to file handling. Below are the steps to improve the function's efficiency.

Step 1: Read Each File Once

Instead of reading each .tif file multiple times, modify your function to first read each file once and store the result as a numpy array. This step will significantly reduce redundant reads.

Step 2: Transform File Paths Function

Create a new function, transform_filepaths, that initializes a dictionary mapping each variable to its respective numpy array. Here’s how you can implement it:

[[See Video to Reveal this Text or Code Snippet]]

This function ensures that each unique file path is read only once, avoiding unnecessary processing for overlapping file paths.

Step 3: Modify Your Main Function

Next, you need to adapt your existing function to accept numpy arrays instead of file paths. This way, you're improving the organization of data and further cutting down on time required for file access. Here’s an example:

[[See Video to Reveal this Text or Code Snippet]]

Step 4: Implement the New Workflow

Finally, your workflow will look as follows:

[[See Video to Reveal this Text or Code Snippet]]

Measuring Performance

After implementing these changes, you can measure the improvement in performance. For instance, before optimization, the execution time might be around 6.69 seconds; after implementing the above improvements, you could see a reduction to 0.42 seconds!

Conclusion

Optimizing your functions by redesigning how you handle files can significantly improve performance and reduce runtime, especially when managing large datasets. By reading each file once and modifying how your functions access the data, you’ll notice a substantial impact on efficiency.

Apply these strategies to your own projects and observe the difference!

0:02:14

0:02:14

0:01:58

0:01:58

0:26:37

0:26:37

0:29:31

0:29:31

0:23:22

0:23:22

0:06:25

0:06:25

0:01:37

0:01:37

0:30:07

0:30:07

1:09:16

1:09:16

0:08:14

0:08:14

0:00:23

0:00:23

0:01:43

0:01:43

0:09:08

0:09:08

0:03:21

0:03:21

0:00:46

0:00:46

0:00:27

0:00:27

0:00:51

0:00:51

0:00:12

0:00:12

0:00:15

0:00:15

0:00:59

0:00:59

0:00:38

0:00:38

0:00:15

0:00:15

0:00:39

0:00:39

0:01:37

0:01:37