filmov

tv

Tutorial 37: Entropy In Decision Tree Intuition

Показать описание

Entropy gives measure of impurity in a node. In a decision tree building process, two important decisions are to be made — what is the best split(s) and which is the best variable to split a node.

Buy the Best book of Machine Learning, Deep Learning with python sklearn and tensorflow from below

amazon url:

Connect with me here:

Subscribe my unboxing Channel

Below are the various playlist created on ML,Data Science and Deep Learning. Please subscribe and support the channel. Happy Learning!

You can buy my book on Finance with Machine Learning and Deep Learning from the below url

🙏🙏🙏🙏🙏🙏🙏🙏

YOU JUST NEED TO DO

3 THINGS to support my channel

LIKE

SHARE

&

SUBSCRIBE

TO MY YOUTUBE CHANNEL

Buy the Best book of Machine Learning, Deep Learning with python sklearn and tensorflow from below

amazon url:

Connect with me here:

Subscribe my unboxing Channel

Below are the various playlist created on ML,Data Science and Deep Learning. Please subscribe and support the channel. Happy Learning!

You can buy my book on Finance with Machine Learning and Deep Learning from the below url

🙏🙏🙏🙏🙏🙏🙏🙏

YOU JUST NEED TO DO

3 THINGS to support my channel

LIKE

SHARE

&

SUBSCRIBE

TO MY YOUTUBE CHANNEL

Tutorial 37: Entropy In Decision Tree Intuition

How to find the Entropy and Information Gain in Decision Tree Learning by Mahesh Huddar

Entropy (for data science) Clearly Explained!!!

Gini Index and Entropy|Gini Index and Information gain in Decision Tree|Decision tree splitting rule

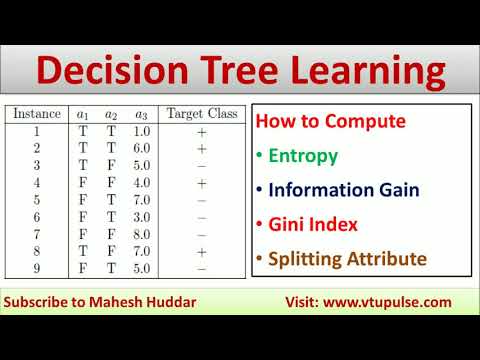

How to find Entropy Information Gain | Gini Index Splitting Attribute Decision Tree by Mahesh Huddar

Entropy Calculation Part 1 - Intro to Machine Learning

7.6.2. Entropy, Information Gain & Gini Impurity - Decision Tree

Entropy Calculation Part 3 - Intro to Machine Learning

How to find Entropy | Information Gain | Gain in terms of Gini Index | Decision Tree Mahesh Huddar

How to find the Entropy Given Probabilities decision Tree Learning Machine Learning by Mahesh Huddar

Decision Trees: Entropy Simple Example

Decision Tree Classifier - Entropy

Calculating Decision Tree Entropy (Simple Example)

Shannon's Entropy Decision Tree | Machine Learning | Tutorial For Beginners | Great Learning

Tutorial 38- Decision Tree Information Gain

#056- Entropy In Decision Tree

Formula of Entropy- Decision Tree | Machine Learning-

Decision Tree: Information Gain and Entropy | Machine Learning | Solved Example

[Machine Learning] Decision Tree - ID3 algorithm (entropy + Information Gain)

Lec-10: Decision Tree 🌲 ID3 Algorithm with Example & Calculations 🧮

1. Decision Tree | ID3 Algorithm | Solved Numerical Example | by Mahesh Huddar

Entropy for Machine Learning

How to Overcome Entropy in Your Business…

Tamasha Dekho 😂 IITian Rocks Relatives Shock 😂😂😂 #JEEShorts #JEE #Shorts

Комментарии

0:08:53

0:08:53

0:12:15

0:12:15

0:16:35

0:16:35

0:11:35

0:11:35

0:13:06

0:13:06

0:00:49

0:00:49

0:18:23

0:18:23

0:00:14

0:00:14

0:13:12

0:13:12

0:03:47

0:03:47

0:02:47

0:02:47

0:09:02

0:09:02

0:01:35

0:01:35

0:08:18

0:08:18

0:12:40

0:12:40

0:14:57

0:14:57

0:01:48

0:01:48

0:13:06

0:13:06

![[Machine Learning] Decision](https://i.ytimg.com/vi/H_S8DaHAAnc/hqdefault.jpg) 0:03:08

0:03:08

0:16:38

0:16:38

0:23:53

0:23:53

0:01:38

0:01:38

0:00:24

0:00:24

0:00:13

0:00:13