filmov

tv

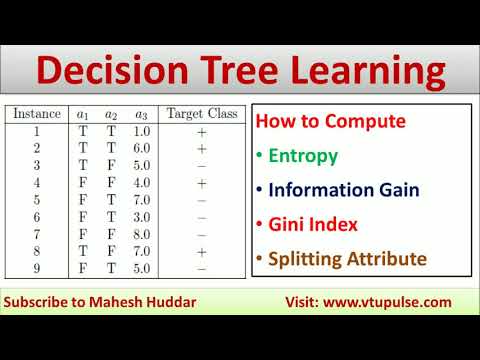

How to find the Entropy and Information Gain in Decision Tree Learning by Mahesh Huddar

Показать описание

How to find the Entropy and Information Gain in Decision Tree Learning by Mahesh Huddar

In this video, I will discuss how to find entropy and information gain given a set of training examples in constructing a decision tree.

entropy nptel,

entropy explained,

entropy data mining,

entropy data mining example,

entropy based discretization example data mining,

entropy machine learning,

entropy machine learning example,

entropy calculation machine learning,

entropy in machine learning in hindi,

information gain decision tree,

gain decision tree,

information gain and entropy in the decision tree,

information gain,

information gain and entropy,

information gain feature selection,

information gain calculation,

information gain and Gini index,

information gain and Gini index,

information gain for continuous-valued attributes

In this video, I will discuss how to find entropy and information gain given a set of training examples in constructing a decision tree.

entropy nptel,

entropy explained,

entropy data mining,

entropy data mining example,

entropy based discretization example data mining,

entropy machine learning,

entropy machine learning example,

entropy calculation machine learning,

entropy in machine learning in hindi,

information gain decision tree,

gain decision tree,

information gain and entropy in the decision tree,

information gain,

information gain and entropy,

information gain feature selection,

information gain calculation,

information gain and Gini index,

information gain and Gini index,

information gain for continuous-valued attributes

How to find the Entropy and Information Gain in Decision Tree Learning by Mahesh Huddar

Entropy Calculation Part 1 - Intro to Machine Learning

How to Calculate Standard Entropy of Reaction using Standard Entropy Examples & Practice Problem...

15.2/R1.4.1 Predict the entropy change for a given reaction or process [HL IB Chemistry]

Entropy (for data science) Clearly Explained!!!

Intuitively Understanding the Shannon Entropy

How to Calculate the Standard Entropy Change for a Reaction

Entropy Change For Melting Ice, Heating Water, Mixtures & Carnot Cycle of Heat Engines - Physics

Machine Learning and Deep Learning - Fundamentals and Applications

Tutorial 37: Entropy In Decision Tree Intuition

Calculating Entropy Changes in the Surroundings

Gibbs Free Energy - Entropy, Enthalpy & Equilibrium Constant K

How To Calculate Entropy Changes: Ideal Gases

Entropy and Reactions Problem (AP Chemistry)

What is entropy? - Jeff Phillips

The Laws of Thermodynamics, Entropy, and Gibbs Free Energy

Decision Tree 🌳 Example | Calculate Entropy, Information ℹ️ Gain | Supervised Learning

Entropy - 2nd Law of Thermodynamics - Enthalpy & Microstates

Standard Molar Entropy calculation

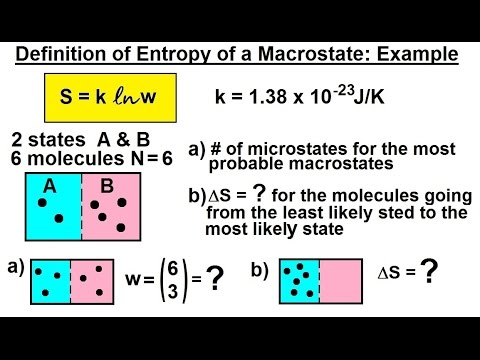

Physics 32.5 Statistical Thermodynamics (16 of 39) Definition of Entropy of a Microstate: Example

How To Calculate Entropy Changes: Mixing Ideal Gases

How to Predict Sign of Delta S (Entropy Change) Practice Problems, Examples, Rules, Summary

How to find Entropy Information Gain | Gini Index Splitting Attribute Decision Tree by Mahesh Huddar

Entropy Calculation Part 4 - Intro to Machine Learning

Комментарии

0:12:15

0:12:15

0:00:49

0:00:49

0:03:44

0:03:44

0:03:35

0:03:35

0:16:35

0:16:35

0:08:03

0:08:03

0:03:50

0:03:50

0:22:09

0:22:09

1:02:10

1:02:10

0:08:53

0:08:53

0:05:32

0:05:32

0:44:45

0:44:45

0:05:14

0:05:14

0:04:46

0:04:46

0:05:20

0:05:20

0:08:12

0:08:12

0:06:57

0:06:57

0:29:46

0:29:46

0:04:24

0:04:24

0:04:38

0:04:38

0:04:20

0:04:20

0:05:44

0:05:44

0:13:06

0:13:06

0:00:12

0:00:12