filmov

tv

Moritz Neeb - Bayesian Optimization and it's application to Neural Networks

Показать описание

PyData Berlin 2016

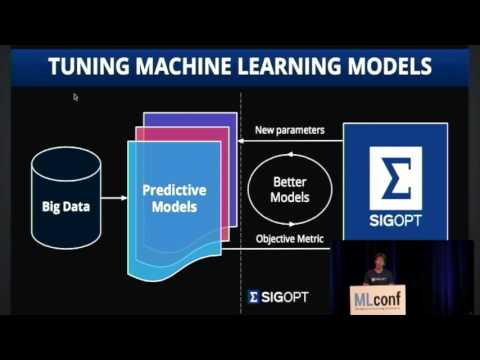

This talk will be about the fundamentals of Bayesian Optimization and how it can be used to train ML Algorithms in Python. To this end we'll consider it's application to Neural Networks. The NNs will be implemented in keras, the Bayesian Optimization will be optimized with hyperas/hyperopt.

Have you ever failed to train a Neural Network? Spent hours, to get it even learn anything? If you ask an expert on how she does this, the answer might be something like: "It needs a lot of experience and some luck". If you know this problem, then this talk is for you. Also, let's steer our luck with ML!

When tuning hyperparameters, an expert has built a model, that means some expectations on how the output might change on a certain parameter adaption. For example, what happens to your Convolutional Neural Network if you set the dropout from 0.5 to 0.25.

Bayesian Optimization is a method that is able to build exactly this kind of model. It uses for example Gaussian Processes to take decisions on which parameter-change might bring you the most benefit, and if it does not, the model is adapted accodingly.

This talk will be about the fundamentals of Bayesian Optimization and how it can be used to train ML Algorithms in Python.

To this end we'll consider it's application to Neural Networks. The NNs will be implemented in keras, the Bayesian Optimization will be optimized with hyperas/hyperopt.

I am planning to split this talk 50:50 into theory and practice. 00:00 Welcome!

00:10 Help us add time stamps or captions to this video! See the description for details.

This talk will be about the fundamentals of Bayesian Optimization and how it can be used to train ML Algorithms in Python. To this end we'll consider it's application to Neural Networks. The NNs will be implemented in keras, the Bayesian Optimization will be optimized with hyperas/hyperopt.

Have you ever failed to train a Neural Network? Spent hours, to get it even learn anything? If you ask an expert on how she does this, the answer might be something like: "It needs a lot of experience and some luck". If you know this problem, then this talk is for you. Also, let's steer our luck with ML!

When tuning hyperparameters, an expert has built a model, that means some expectations on how the output might change on a certain parameter adaption. For example, what happens to your Convolutional Neural Network if you set the dropout from 0.5 to 0.25.

Bayesian Optimization is a method that is able to build exactly this kind of model. It uses for example Gaussian Processes to take decisions on which parameter-change might bring you the most benefit, and if it does not, the model is adapted accodingly.

This talk will be about the fundamentals of Bayesian Optimization and how it can be used to train ML Algorithms in Python.

To this end we'll consider it's application to Neural Networks. The NNs will be implemented in keras, the Bayesian Optimization will be optimized with hyperas/hyperopt.

I am planning to split this talk 50:50 into theory and practice. 00:00 Welcome!

00:10 Help us add time stamps or captions to this video! See the description for details.

0:34:25

0:34:25

0:00:17

0:00:17

0:32:53

0:32:53

0:40:50

0:40:50

1:27:05

1:27:05

0:24:05

0:24:05

0:01:11

0:01:11

0:02:09

0:02:09

0:15:26

0:15:26

0:23:43

0:23:43

0:03:14

0:03:14

0:02:24

0:02:24

0:51:46

0:51:46

0:03:11

0:03:11

0:39:21

0:39:21

0:28:54

0:28:54

0:49:31

0:49:31

0:28:48

0:28:48

0:11:25

0:11:25

![[DeepBayes2018]: Day 5,](https://i.ytimg.com/vi/vZePurXEJ5Q/hqdefault.jpg) 1:22:19

1:22:19

0:55:01

0:55:01

0:23:45

0:23:45

0:29:29

0:29:29

0:18:54

0:18:54