filmov

tv

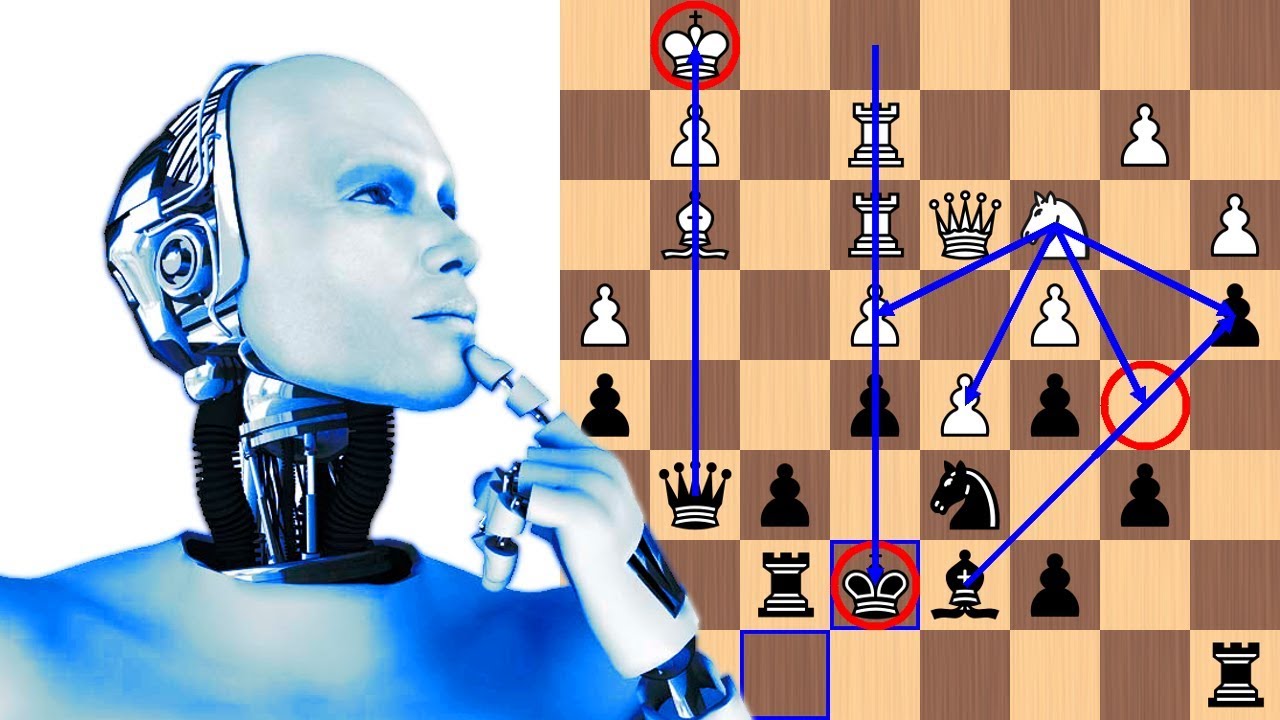

Google's self-learning AI AlphaZero masters chess in 4 hours

Показать описание

Google's AI AlphaZero has shocked the chess world. Leaning on its deep neural networks, and general reinforcement learning algorithm, DeepMind's AI Alpha Zero learned to play chess well beyond the skill level of master, besting the 2016 top chess engine Stockfish 8 in a 100-game match. Alpha Zero had 28 wins, 72 draws, and 0 losses. Impressive right? And it took just 4 hours of self-play to reach such a proficiency. What the chess world has witnessed from this historic event is, simply put, mind-blowing! AlphaZero vs Magnus Carlsen anyone? :)

19-page paper via Cornell University Library

PGN:

1. e4 e5 2. Nf3 Nc6 3. Bb5 Nf6 4. d3 Bc5 5. Bxc6 dxc6 6. 0-0 Nd7 7. c3 0-0 8. d4 Bd6 9. Bg5 Qe8 10. Re1 f6 11. Bh4 Qf7 12. Nbd2 a5 13. Bg3 Re8 14. Qc2 Nf8 15. c4 c5 16. d5 b6 17. Nh4 g6 18. Nhf3 Bd7 19. Rad1 Re7 20. h3 Qg7 21. Qc3 Rae8 22. a3 h6 23. Bh4 Rf7 24. Bg3 Rfe7 25. Bh4 Rf7 26. Bg3 a4 27. Kh1 Rfe7 28. Bh4 Rf7 29. Bg3 Rfe7 30. Bh4 g5 31. Bg3 Ng6 32. Nf1 Rf7 33. Ne3 Ne7 34. Qd3 h5 35. h4 Nc8 36. Re2 g4 37. Nd2 Qh7 38. Kg1 Bf8 39. Nb1 Nd6 40. Nc3 Bh6 41. Rf1 Ra8 42. Kh2 Kf8 43. Kg1 Qg6 44. f4 gxf3 45. Rxf3 Bxe3+ 46. Rfxe3 Ke7 47. Be1 Qh7 48. Rg3 Rg7 49. Rxg7+ Qxg7 50. Re3 Rg8 51. Rg3 Qh8 52. Nb1 Rxg3 53. Bxg3 Qh6 54. Nd2 Bg4 55. Kh2 Kd7 56. b3 axb3 57. Nxb3 Qg6 58. Nd2 Bd1 59. Nf3 Ba4 60. Nd2 Ke7 61. Bf2 Qg4 62. Qf3 Bd1 63. Qxg4 Bxg4 64. a4 Nb7 65. Nb1 Na5 66. Be3 Nxc4 67. Bc1 Bd7 68. Nc3 c6 69. Kg1 cxd5 70. exd5 Bf5 71. Kf2 Nd6 72. Be3 Ne4+ 73. Nxe4 Bxe4 74. a5 bxa5 75. Bxc5+ Kd7 76. d6 Bf5 77. Ba3 Kc6 78. Ke1 Kd5 79. Kd2 Ke4 80. Bb2 Kf4 81. Bc1 Kg3 82. Ke2 a4 83. Kf1 Kxh4 84. Kf2 Kg4 85. Ba3 Bd7 86. Bc1 Kf5 87. Ke3 Ke6

I'm a self-taught National Master in chess out of Pennsylvania, USA who was introduced to the game by my father in 1988 at the age of 8. The purpose of this channel is to share my knowledge of chess to help others improve their game. I enjoy continuing to improve my understanding of this great game, albeit slowly. Consider subscribing here on YouTube for frequent content, and/or connecting via any or all of the below social medias. Your support is greatly appreciated. Take care, bye. :)

19-page paper via Cornell University Library

PGN:

1. e4 e5 2. Nf3 Nc6 3. Bb5 Nf6 4. d3 Bc5 5. Bxc6 dxc6 6. 0-0 Nd7 7. c3 0-0 8. d4 Bd6 9. Bg5 Qe8 10. Re1 f6 11. Bh4 Qf7 12. Nbd2 a5 13. Bg3 Re8 14. Qc2 Nf8 15. c4 c5 16. d5 b6 17. Nh4 g6 18. Nhf3 Bd7 19. Rad1 Re7 20. h3 Qg7 21. Qc3 Rae8 22. a3 h6 23. Bh4 Rf7 24. Bg3 Rfe7 25. Bh4 Rf7 26. Bg3 a4 27. Kh1 Rfe7 28. Bh4 Rf7 29. Bg3 Rfe7 30. Bh4 g5 31. Bg3 Ng6 32. Nf1 Rf7 33. Ne3 Ne7 34. Qd3 h5 35. h4 Nc8 36. Re2 g4 37. Nd2 Qh7 38. Kg1 Bf8 39. Nb1 Nd6 40. Nc3 Bh6 41. Rf1 Ra8 42. Kh2 Kf8 43. Kg1 Qg6 44. f4 gxf3 45. Rxf3 Bxe3+ 46. Rfxe3 Ke7 47. Be1 Qh7 48. Rg3 Rg7 49. Rxg7+ Qxg7 50. Re3 Rg8 51. Rg3 Qh8 52. Nb1 Rxg3 53. Bxg3 Qh6 54. Nd2 Bg4 55. Kh2 Kd7 56. b3 axb3 57. Nxb3 Qg6 58. Nd2 Bd1 59. Nf3 Ba4 60. Nd2 Ke7 61. Bf2 Qg4 62. Qf3 Bd1 63. Qxg4 Bxg4 64. a4 Nb7 65. Nb1 Na5 66. Be3 Nxc4 67. Bc1 Bd7 68. Nc3 c6 69. Kg1 cxd5 70. exd5 Bf5 71. Kf2 Nd6 72. Be3 Ne4+ 73. Nxe4 Bxe4 74. a5 bxa5 75. Bxc5+ Kd7 76. d6 Bf5 77. Ba3 Kc6 78. Ke1 Kd5 79. Kd2 Ke4 80. Bb2 Kf4 81. Bc1 Kg3 82. Ke2 a4 83. Kf1 Kxh4 84. Kf2 Kg4 85. Ba3 Bd7 86. Bc1 Kf5 87. Ke3 Ke6

I'm a self-taught National Master in chess out of Pennsylvania, USA who was introduced to the game by my father in 1988 at the age of 8. The purpose of this channel is to share my knowledge of chess to help others improve their game. I enjoy continuing to improve my understanding of this great game, albeit slowly. Consider subscribing here on YouTube for frequent content, and/or connecting via any or all of the below social medias. Your support is greatly appreciated. Take care, bye. :)

Комментарии

0:18:10

0:18:10

0:04:08

0:04:08

0:02:48

0:02:48

1:30:28

1:30:28

0:17:59

0:17:59

0:02:14

0:02:14

0:09:54

0:09:54

0:01:51

0:01:51

0:13:44

0:13:44

0:42:29

0:42:29

0:03:45

0:03:45

0:13:23

0:13:23

0:17:40

0:17:40

0:11:20

0:11:20

0:04:39

0:04:39

0:06:39

0:06:39

0:42:29

0:42:29

1:03:44

1:03:44

0:09:53

0:09:53

0:05:01

0:05:01

0:02:16

0:02:16

4:07:54

4:07:54

0:07:33

0:07:33

0:19:07

0:19:07