filmov

tv

Understanding PyTorch Tensor Reshaping: Differences Between Methods Using Transpose

Показать описание

Explore the differences in output between reshaping tensors in `PyTorch` using transpose versus direct view methods. Discover why memory layout affects tensor results in a clear, detailed explanation.

---

Visit these links for original content and any more details, such as alternate solutions, latest updates/developments on topic, comments, revision history etc. For example, the original title of the Question was: Pytorch different outputs between with transpose

If anything seems off to you, please feel free to write me at vlogize [AT] gmail [DOT] com.

---

Understanding PyTorch Tensor Reshaping: Differences Between Methods Using Transpose

The Problem: Confusion About Tensor Outputs

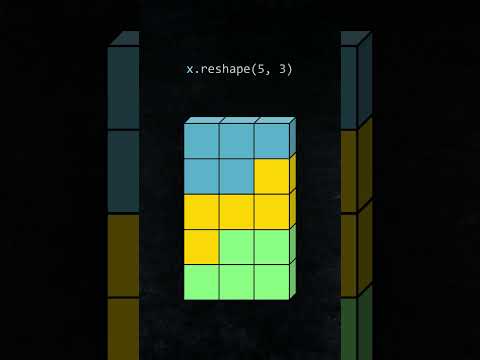

Let's consider a scenario where you have a tensor with a dimension of (B, N^2, C) and you want to reshape it into (B, C, N, N). Here’s a simple representation of your tensor operations:

[[See Video to Reveal this Text or Code Snippet]]

Both methods lead to outputs that appear similar yet are unexpectedly different. What is causing this discrepancy?

The Solution: Understanding Memory Layout

1. What Happens with Transpose?

For example, let’s take a smaller tensor as an illustration:

[[See Video to Reveal this Text or Code Snippet]]

2. Memory Arrangement: A Key Concept

Here's where it gets interesting—although reshaping (using .view() or .flatten()) doesn’t change the underlying data, how the data is arranged in memory can produce different results. The layout affects how tensors are stored, even if they share the same values.

Here is how the memory arrangement looks for both tensors:

For tensor A from the example above, the flattened version appears as follows:

[[See Video to Reveal this Text or Code Snippet]]

Comparatively, B shows a different layout when flattened, even though both tensors A and B have similar shapes.

[[See Video to Reveal this Text or Code Snippet]]

This variation brings us to a crucial takeaway: while both A and B share values, their underlying memory structures are different.

3. Key Takeaways on Reshaping

Reshaping Doesn't Change Data: Using .view() or similar commands does not alter the tensor's data but rather changes how you access it. Thus, it serves as an abstraction layer.

Different Outputs: In the end, tensor C retains its original memory layout (and thus shares data with A), while tensor B, as a result of its transposition, has a distinct memory layout.

Conclusion: Mastering Tensors in PyTorch

Understanding how operations like transpose and view affect tensor outputs is critical for effective programming with PyTorch. This knowledge is not only fundamental for debugging but also for optimizing tensor operations and improving performance in your applications.

By appreciating the differences in memory layout and output when manipulating tensors, you can make more informed decisions for your data processing needs in PyTorch. Happy coding!

---

Visit these links for original content and any more details, such as alternate solutions, latest updates/developments on topic, comments, revision history etc. For example, the original title of the Question was: Pytorch different outputs between with transpose

If anything seems off to you, please feel free to write me at vlogize [AT] gmail [DOT] com.

---

Understanding PyTorch Tensor Reshaping: Differences Between Methods Using Transpose

The Problem: Confusion About Tensor Outputs

Let's consider a scenario where you have a tensor with a dimension of (B, N^2, C) and you want to reshape it into (B, C, N, N). Here’s a simple representation of your tensor operations:

[[See Video to Reveal this Text or Code Snippet]]

Both methods lead to outputs that appear similar yet are unexpectedly different. What is causing this discrepancy?

The Solution: Understanding Memory Layout

1. What Happens with Transpose?

For example, let’s take a smaller tensor as an illustration:

[[See Video to Reveal this Text or Code Snippet]]

2. Memory Arrangement: A Key Concept

Here's where it gets interesting—although reshaping (using .view() or .flatten()) doesn’t change the underlying data, how the data is arranged in memory can produce different results. The layout affects how tensors are stored, even if they share the same values.

Here is how the memory arrangement looks for both tensors:

For tensor A from the example above, the flattened version appears as follows:

[[See Video to Reveal this Text or Code Snippet]]

Comparatively, B shows a different layout when flattened, even though both tensors A and B have similar shapes.

[[See Video to Reveal this Text or Code Snippet]]

This variation brings us to a crucial takeaway: while both A and B share values, their underlying memory structures are different.

3. Key Takeaways on Reshaping

Reshaping Doesn't Change Data: Using .view() or similar commands does not alter the tensor's data but rather changes how you access it. Thus, it serves as an abstraction layer.

Different Outputs: In the end, tensor C retains its original memory layout (and thus shares data with A), while tensor B, as a result of its transposition, has a distinct memory layout.

Conclusion: Mastering Tensors in PyTorch

Understanding how operations like transpose and view affect tensor outputs is critical for effective programming with PyTorch. This knowledge is not only fundamental for debugging but also for optimizing tensor operations and improving performance in your applications.

By appreciating the differences in memory layout and output when manipulating tensors, you can make more informed decisions for your data processing needs in PyTorch. Happy coding!

0:01:42

0:01:42

0:11:09

0:11:09

0:07:48

0:07:48

0:03:28

0:03:28

0:08:25

0:08:25

0:55:33

0:55:33

0:01:21

0:01:21

0:17:09

0:17:09

0:03:35

0:03:35

0:03:11

0:03:11

0:18:39

0:18:39

0:18:14

0:18:14

0:01:46

0:01:46

1:01:42

1:01:42

0:06:16

0:06:16

0:02:33

0:02:33

0:00:32

0:00:32

0:10:17

0:10:17

0:03:34

0:03:34

0:25:51

0:25:51

0:22:08

0:22:08

0:02:05

0:02:05

0:16:51

0:16:51

0:10:48

0:10:48