filmov

tv

Understanding Metropolis-Hastings algorithm

Показать описание

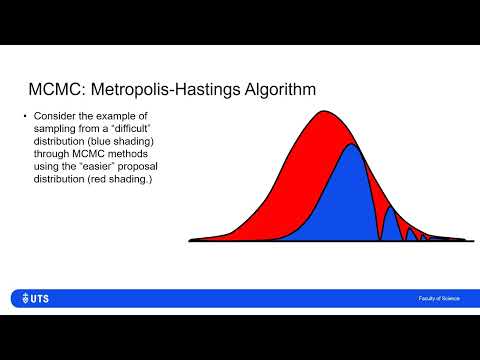

Metropolis-Hastings is an algorithm that allows us to sample from a generic probability distribution, which we'll call our target distribution, even if we don't know the normalizing constant. To do this, we construct and sample from a Markov chain whose stationary distribution is the target distribution that we're looking for. It consists of picking an arbitrary starting value and then iteratively accepting or rejecting candidate samples drawn from another distribution, one that is easy to sample. Let's say we want to produce samples from a target distribution. We're going to call it p of theta. But we only know it up to a normalizing constant or up to proportionality. What we have is g of theta. So we don't know the normalizing constant because perhaps this is difficult to integrate. So we only have g of theta to work with. The Metropolis Hastings Algorithm will proceed as follows. The first step is to select an initial value for theta. We're going to call it theta-naught. The next step is for a large number of iterations, so for i from 1 up to some large number m, we're going to repeat the following. The first thing we're going to do is draw a candidate. We'll call that theta-star as our candidate. And we're going to draw this from a proposal distribution. We're going to call the proposal distribution q of theta-star, given the previous iteration's value of theta. We'll take more about this q distribution soon. The next step is to compute the following ratio. We're going to call this alpha. It is this g function evaluated at the candidate divided by the distribution, or the density here of q, evaluated at the candidate given the previous iteration. And all of this will be divided by g evaluated at the old iteration. That divided by q, evaluated at the old iteration. Given the candidate value. If we rearrange this, it'll be g of the candidate times q of the previous value given the candidate divided by g at the previous value. And q evaluated at the candidate, given the previous value.....

Комментарии

0:09:49

0:09:49

0:18:15

0:18:15

0:08:14

0:08:14

0:24:45

0:24:45

0:17:36

0:17:36

0:11:28

0:11:28

0:10:41

0:10:41

0:20:27

0:20:27

0:10:38

0:10:38

0:12:11

0:12:11

0:24:24

0:24:24

0:14:35

0:14:35

0:03:39

0:03:39

0:38:44

0:38:44

0:01:50

0:01:50

0:58:52

0:58:52

0:00:41

0:00:41

0:44:03

0:44:03

0:01:46

0:01:46

0:44:31

0:44:31

0:08:19

0:08:19

0:19:30

0:19:30

0:09:37

0:09:37

0:13:14

0:13:14