filmov

tv

Intuitively Understanding the Shannon Entropy

Показать описание

Intuitively Understanding the Shannon Entropy

Entropy (for data science) Clearly Explained!!!

Intuitively Understanding the Cross Entropy Loss

Intuitively Understanding the KL Divergence

Shannon's Information Entropy (Physical Analogy)

Understanding Shannon entropy: (4) continuous distributions

Why Information Theory is Important - Computerphile

Shannon Entropy Intuitively #education #informationtheory #intuitivelearning #shannon #animated

Claude Shannon Explains Information Theory

The Key Equation Behind Probability

Understanding Shannon entropy: (3) permutations and variability

The Statistical Interpretation of Entropy

Understanding Shannon entropy: (1) variability within a distribution

Intro to Information Theory | Digital Communication | Information Technology

Understanding Shannon entropy: (6) Connection to physics

Intro to Shannon Entropy

Quantum Machine Learning 02: Kolmogorov Complexity & Shannon Entropy

1. Shannon's Entropy - The Problem

Understanding Shannon entropy: (5) invariant distributions

5. Shannon's Entropy - Rational probabilities

The Most Misunderstood Concept in Physics

Cross Entropy Loss

uncertainty and amount of info (shannon entropy)

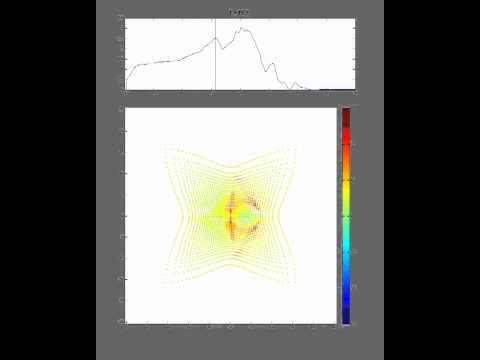

Local transfer entropy (TE) of swarm individuals over time (square configuration)

Комментарии

0:08:03

0:08:03

0:16:35

0:16:35

0:05:24

0:05:24

0:05:13

0:05:13

0:07:05

0:07:05

0:09:02

0:09:02

0:12:33

0:12:33

0:01:01

0:01:01

0:02:18

0:02:18

0:26:24

0:26:24

0:03:50

0:03:50

0:13:00

0:13:00

0:12:07

0:12:07

0:10:09

0:10:09

0:10:38

0:10:38

0:25:47

0:25:47

0:04:31

0:04:31

0:01:14

0:01:14

0:06:27

0:06:27

0:01:17

0:01:17

0:27:15

0:27:15

0:00:23

0:00:23

0:05:17

0:05:17

0:00:27

0:00:27