filmov

tv

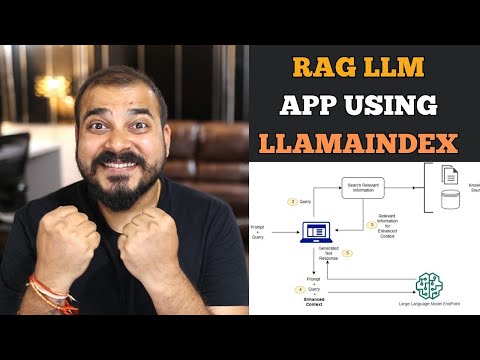

🦙LlamaIndex | Text-To-SQL ( LlamaIndex + DuckDB)

Показать описание

LlamaIndex is a simple, flexible data framework for connecting

custom data sources to large language models. It provides the key tools to augment your LLM applications with data.

In this video, I will walk you through the process of text-to-SQL capabilities using different query engine of LlamaIndex and DuckDB.

Happy Learning 😎

👉🏼 Links:

------------------------------------------------------------------------------------------

------------------------------------------------------------------------------------------

🔗 🎥 Other videos you might find helpful:

------------------------------------------------------------------------------------------

🤝 Connect with me:

#llamaindex #llm #texttosql #datasciencebasics

custom data sources to large language models. It provides the key tools to augment your LLM applications with data.

In this video, I will walk you through the process of text-to-SQL capabilities using different query engine of LlamaIndex and DuckDB.

Happy Learning 😎

👉🏼 Links:

------------------------------------------------------------------------------------------

------------------------------------------------------------------------------------------

🔗 🎥 Other videos you might find helpful:

------------------------------------------------------------------------------------------

🤝 Connect with me:

#llamaindex #llm #texttosql #datasciencebasics

Discover LlamaIndex: Joint Text to SQL and Semantic Search

🦙LlamaIndex | Text-To-SQL ( LlamaIndex + DuckDB)

Text to SQL with Llama Index and ClickHouse

NL2SQL with LlamaIndex: Querying Databases Using Natural Language | Code

🦙LlamaIndex | Combined Text-TO-SQL + Semantic Search

LLMs for Advanced Question-Answering over Tabular/CSV/SQL Data (Building Advanced RAG, Part 2)

Llama-Index: Chat with SQL Database

Talk to Your Documents, Powered by Llama-Index

Text to SQL with Llama Index

Introduction to Query Pipelines (Building Advanced RAG, Part 1)

End To End Text To SQL LLM App Along With Querying SQL Database Using Google Gemini Pro

Discover LlamaIndex: Ask Complex Queries over Multiple Documents

Announcing LlamaIndex Gen AI Playlist- Llamaindex Vs Langchain Framework

LlamaIndex overview & use cases | LangChain integration

Locally-hosted, offline LLM w/LlamaIndex + OPT (open source, instruction-tuning LLM)

Mastering LlamaIndex : Create, Save & Load Indexes, Customize LLMs, Prompts & Embeddings | C...

What is Llama Index? how does it help in building LLM applications? #languagemodels #chatgpt

End to end RAG LLM App Using Llamaindex and OpenAI- Indexing and Querying Multiple pdf's

Natural Language to SQL | LangChain, SQL Database & OpenAI LLMs

Text to SQL RAG pipeline with LlamaIndex, Llama3 and Groq in 15 mins #groq #llama3 #llamaindex #llm

Build AI sql assistant with LLM. LanghChain tutorial. Streamlit Tutorial. LLM Tutorial . ChatGPT.

LangChain, SQL Agents & OpenAI LLMs: Query Database Using Natural Language | Code

LlamaIndex Sessions: 12 RAG Pain Points and Solutions

LlamaIndex Webinar: From Prompt to Schema Engineering with Pydantic (with @jxnlco)

Комментарии

0:20:08

0:20:08

0:14:44

0:14:44

0:06:15

0:06:15

0:19:51

0:19:51

0:23:46

0:23:46

0:35:07

0:35:07

0:12:37

0:12:37

0:17:32

0:17:32

0:01:53

0:01:53

0:33:01

0:33:01

0:30:03

0:30:03

0:08:48

0:08:48

0:15:22

0:15:22

0:12:36

0:12:36

0:32:27

0:32:27

0:23:56

0:23:56

0:00:39

0:00:39

0:27:21

0:27:21

0:09:04

0:09:04

0:15:40

0:15:40

0:19:29

0:19:29

0:20:39

0:20:39

0:37:57

0:37:57

0:51:01

0:51:01