filmov

tv

Nonlinear Control: Hamilton Jacobi Bellman (HJB) and Dynamic Programming

Показать описание

This video discusses optimal nonlinear control using the Hamilton Jacobi Bellman (HJB) equation, and how to solve this using dynamic programming.

This is a lecture in a series on reinforcement learning, following the new Chapter 11 from the 2nd edition of our book "Data-Driven Science and Engineering: Machine Learning, Dynamical Systems, and Control" by Brunton and Kutz

This video was produced at the University of Washington

This is a lecture in a series on reinforcement learning, following the new Chapter 11 from the 2nd edition of our book "Data-Driven Science and Engineering: Machine Learning, Dynamical Systems, and Control" by Brunton and Kutz

This video was produced at the University of Washington

Nonlinear Control: Hamilton Jacobi Bellman (HJB) and Dynamic Programming

EE 564: Lecture 26 (Optimal Control): The Hamilton Jacobi Bellman Approach

HJB and LQR | Control Theory | Lecture 7

Hamilton Jacobi Bellman equation

Introduction to Optimal Control and Hamilton-Jacobi Equation

Optimal Control Tutorial 2 - HJB and LQR

Continuous time model; Hamilton-Jacobi-Bellman PDE

Qi Gong: 'Nonlinear optimal feedback control - a model-based learning approach'

Karl Kunisch: 'Solution Concepts for Optimal Feedback Control of Nonlinear PDEs'

Dynamic Programming Principle (from optimal control) and Hamilton-Jacobi equations

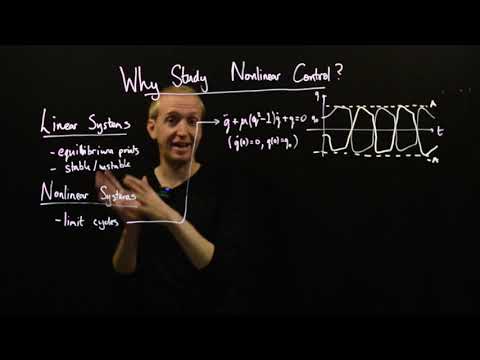

Why study nonlinear control?

HJB equations, dynamic programming principle and stochastic optimal control 1 - Andrzej Święch

Marianne Akian: 'Probabilistic max-plus schemes for solving Hamilton-Jacobi-Bellman equations&a...

Real-Time Hamilton-Jacobi Reachability Analysis of Autonomous System With An FPGA - Run 1

Wei Kang: 'Data Development and Deep Learning for HJB Equations'

Hamilton-Jacobi Theory: Finding the Best Canonical Transformation + Examples | Lecture 9

High-dimensional Hamilton-Jacobi PDEs: Approximation, Representation, and Learning

Numerical Optimal Control Lecture 4 - Nonlinear optimization

Recent trends in optimal control and partial differential equations - 10 maggio 2023

HJB equations, dynamic programming principle and stochastic optimal control 4 - Andrzej Święch

HJB equations, dynamic programming principle and stochastic optimal control 3 - Andrzej Święch

HJB equations, dynamic programming principle and stochastic optimal control 5 - Andrzej Święch

Ivan Yegorov: 'Attenuation of the curse of dimensionality in continuous-time nonlinear optimal ...

mod10lec60 Constrained Optimization in Optimal Control Theory - Part 06

Комментарии

0:17:39

0:17:39

0:31:20

0:31:20

1:14:36

1:14:36

0:16:30

0:16:30

1:35:30

1:35:30

1:06:47

1:06:47

0:48:01

0:48:01

0:57:29

0:57:29

0:58:38

0:58:38

0:56:32

0:56:32

0:14:55

0:14:55

1:04:32

1:04:32

0:53:59

0:53:59

0:00:33

0:00:33

0:59:13

0:59:13

0:53:51

0:53:51

1:20:57

1:20:57

1:21:35

1:21:35

2:03:44

2:03:44

1:04:52

1:04:52

1:04:08

1:04:08

1:00:13

1:00:13

0:47:18

0:47:18

0:40:21

0:40:21