filmov

tv

Adding Self-Attention to a Convolutional Neural Network! PyTorch Deep Learning Tutorial

Показать описание

TIMESTAMPS:

0:00 Introduction

0:22 Attention Mechanism Overview

1:20 Self-Attention Introduction

3:02 CNN Limitations

4:09 Using Attention in CNNs

6:30 Attention Integration in CNN

9:06 Learnable Scale Parameter

10:14 Attention Implementation

12:52 Performance Comparison

14:10 Attention Map Visualization

14:29 Conclusion

In this video I show how we can add Self-Attention to a CNN in order to improve the performance of our classifier!

Donations

The corresponding code is available here! (Section 13)

Discord Server:

0:00 Introduction

0:22 Attention Mechanism Overview

1:20 Self-Attention Introduction

3:02 CNN Limitations

4:09 Using Attention in CNNs

6:30 Attention Integration in CNN

9:06 Learnable Scale Parameter

10:14 Attention Implementation

12:52 Performance Comparison

14:10 Attention Map Visualization

14:29 Conclusion

In this video I show how we can add Self-Attention to a CNN in order to improve the performance of our classifier!

Donations

The corresponding code is available here! (Section 13)

Discord Server:

Adding Self-Attention to a Convolutional Neural Network! PyTorch Deep Learning Tutorial

Attention mechanism: Overview

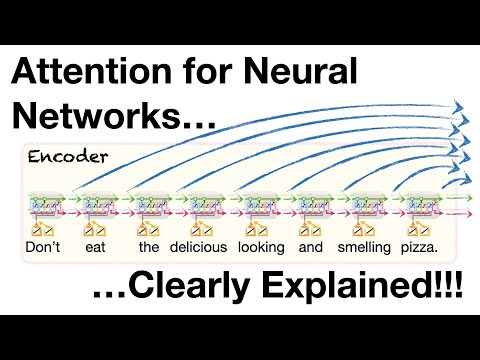

Attention for Neural Networks, Clearly Explained!!!

Cross Attention vs Self Attention

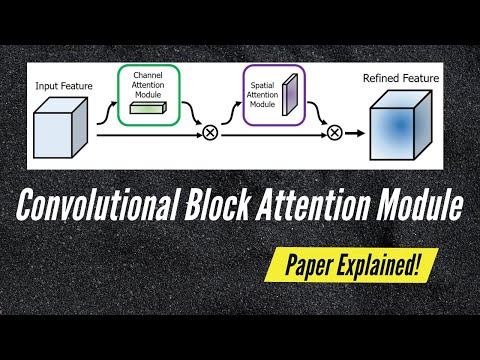

Convolutional Block Attention Module (CBAM) Paper Explained

Self-Attention-based Convolutional Neural Network for Fraudulent Behavior Detection in Sports

SENets: Channel-Wise Attention in Convolutional Neural Networks

Self Attention - A crucial building block of Transformers Architecture

Visual Generative Modeling workshop@CVPR 2025, morning session

On the relationship between Self-Attention and Convolutional Layers

Self-Attention Modeling for Visual Recognition, by Han Hu

Transformer Neural Networks, ChatGPT's foundation, Clearly Explained!!!

Why Sine & Cosine for Transformer Neural Networks

Vision Transformer Quick Guide - Theory and Code in (almost) 15 min

Illustrated Guide to Transformers Neural Network: A step by step explanation

Focal Transformer: Focal Self-attention for Local-Global Interactions in Vision Transformers

Position Encoding Details in Transformer Neural Networks

The Lipschitz Constant of Self-Attention

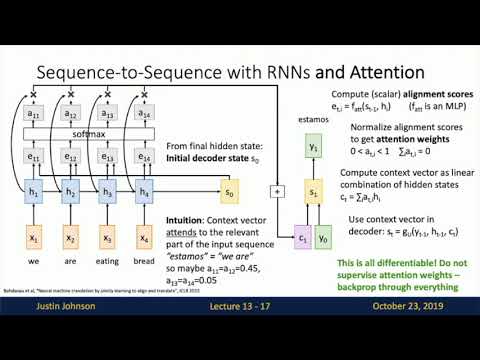

Lecture 13: Attention

Automatic Lip-reading with Hierarchical Pyramidal Convolution and Self-Attention for Image Seque...

ICML 2019 Self-Attention Generative Adversarial Networks (SAGAN)

Lets code the Transformer Encoder

5 concepts in transformers (part 3)

Convolutional graph neural networks and attention mechanisms

Комментарии

0:14:32

0:14:32

0:05:34

0:05:34

0:15:51

0:15:51

0:00:45

0:00:45

0:07:05

0:07:05

0:06:00

0:06:00

0:09:52

0:09:52

0:05:14

0:05:14

3:42:14

3:42:14

0:56:03

0:56:03

0:30:00

0:30:00

0:36:15

0:36:15

0:00:51

0:00:51

0:16:51

0:16:51

0:15:01

0:15:01

0:22:39

0:22:39

0:00:55

0:00:55

0:12:01

0:12:01

1:11:53

1:11:53

0:14:37

0:14:37

0:10:54

0:10:54

0:00:34

0:00:34

0:01:00

0:01:00

0:23:08

0:23:08