filmov

tv

Calculating Tensorflow Object Detection Metrics with Python | Mean Average Precision (mAP) & Recall

Показать описание

Finished building your object detection model?

Want to see how it stacks up against benchmarks?

Need to calculate precision and recall for your reporting?

I got you!

In this video you'll learn how to calculate both training and evaluation metrics for object detection models built using the Tensorflow Object Detection API with Python. You'll learn how to evaluate your Python Object Detection model and calculate mean average precision (mAP) and average recall.

In this video, you'll learn how to:

1. Viewing Training Results and Loss inside of Tensorboard

2. Evaluating the Performance of Tensorflow Object Detection Models

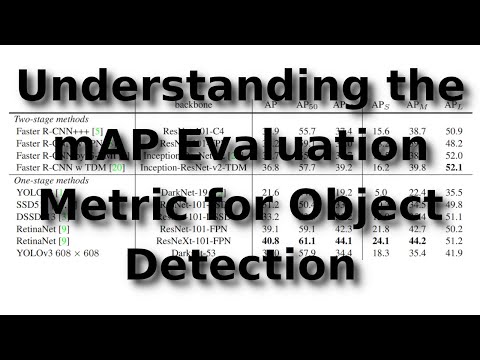

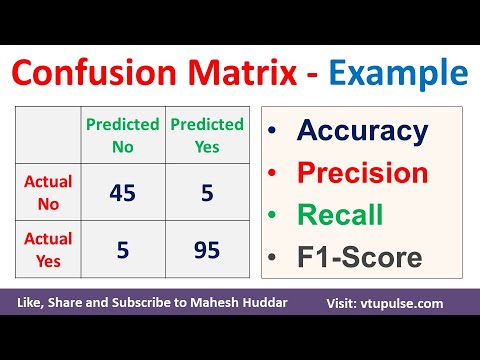

3. Calculating Mean Average Precision (mAP) and Average Recall (AP) for Object Detection models

4. Viewing mAP and AR for Evaluation Data using Tensorboard

Chapters:

0:00 - Start

3:19 - Calculating Training Loss and Metrics

6:14 - Coding the Evaluation Script

9:08 - Calculating Mean Average Precision (mAP) and Average Recall (AP)

12:21 - Viewing mAP and AP in Tensorboard

Oh, and don't forget to connect with me!

Happy coding!

Nick

P.s. Let me know how you go and drop a comment if you need a hand!

Want to see how it stacks up against benchmarks?

Need to calculate precision and recall for your reporting?

I got you!

In this video you'll learn how to calculate both training and evaluation metrics for object detection models built using the Tensorflow Object Detection API with Python. You'll learn how to evaluate your Python Object Detection model and calculate mean average precision (mAP) and average recall.

In this video, you'll learn how to:

1. Viewing Training Results and Loss inside of Tensorboard

2. Evaluating the Performance of Tensorflow Object Detection Models

3. Calculating Mean Average Precision (mAP) and Average Recall (AP) for Object Detection models

4. Viewing mAP and AR for Evaluation Data using Tensorboard

Chapters:

0:00 - Start

3:19 - Calculating Training Loss and Metrics

6:14 - Coding the Evaluation Script

9:08 - Calculating Mean Average Precision (mAP) and Average Recall (AP)

12:21 - Viewing mAP and AP in Tensorboard

Oh, and don't forget to connect with me!

Happy coding!

Nick

P.s. Let me know how you go and drop a comment if you need a hand!

Комментарии