filmov

tv

Computing Derivatives with FFT [Python]

Показать описание

This video describes how to compute derivatives with the Fast Fourier Transform (FFT) in Python.

These lectures follow Chapter 2 from:

"Data-Driven Science and Engineering: Machine Learning, Dynamical Systems, and Control" by Brunton and Kutz

This video was produced at the University of Washington

These lectures follow Chapter 2 from:

"Data-Driven Science and Engineering: Machine Learning, Dynamical Systems, and Control" by Brunton and Kutz

This video was produced at the University of Washington

Computing Derivatives with FFT [Python]

Denoising Data with FFT [Python]

Spectral Derivative with FFT in NumPy

Computing Derivatives with FFT [Matlab]

np.fft.rfft for spectral derivatives in Python

Lecture -- Numerical Differentiation Using the FFT

Solving PDEs with the FFT [Python]

Np fft rfft for spectral derivatives in python

Spectral derivative with fft in numpy

Numerical Differentiation (First) and How to Minimize Error with Python

NumPy Tutorials : 013 : Fourier Filtering and Spectral Differentiation

Detrending and deseasonalizing data with fourier series

FFT (Fast Fourier Transform) in Scipy-Pandas

Take Derivatives in Python - How to take derivatives in python - diff() in Python - SymPy Derivative

2D Spectral Derivatives with NumPy.FFT

Bro’s hacking life 😭🤣

Spring Break Day 3: How To Find Derivatives In Python

How to solve differential equations

The Fast Fourier Transform (FFT)

Image Compression and the FFT (Examples in Python)

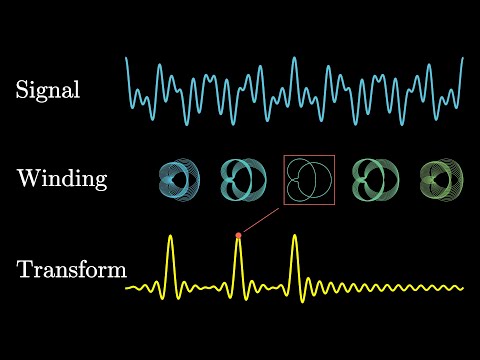

But what is the Fourier Transform? A visual introduction.

How to Solve Differential Equations in PYTHON

Why greatest Mathematicians are not trying to prove Riemann Hypothesis? || #short #terencetao #maths

METR2021 - Lab 3 - Segment 10: Evaluating Partial Derivatives in Python

Комментарии

0:11:09

0:11:09

0:10:03

0:10:03

0:08:58

0:08:58

0:12:00

0:12:00

0:02:49

0:02:49

0:05:58

0:05:58

0:14:56

0:14:56

0:11:41

0:11:41

0:07:27

0:07:27

0:06:52

0:06:52

0:12:03

0:12:03

0:12:16

0:12:16

0:10:50

0:10:50

0:03:34

0:03:34

0:26:00

0:26:00

0:00:20

0:00:20

0:14:37

0:14:37

0:00:46

0:00:46

0:08:46

0:08:46

0:12:53

0:12:53

0:20:57

0:20:57

0:23:37

0:23:37

0:00:38

0:00:38

0:15:06

0:15:06