filmov

tv

Stacking Ensemble Learning|Stacking and Blending in ensemble machine learning

Показать описание

Stacking Ensemble Learning|Stacking and Blending in ensemble machine learning

#StackingEnsemble #StackingandBlending #unfolddatascience

Welcome! I'm Aman, a Data Scientist & AI Mentor.

🚀 **Level Up Your Skills:**

* **Udemy Courses:** 🔥 **Start Learning Now!** 🔥

* X (Twitter): @unfolds

* Instagram: @unfold_data_science

🎬 **Featured Playlists:**

🎥 **My Studio Gear:**

#DataScience #AI #MachineLearning

About this video:

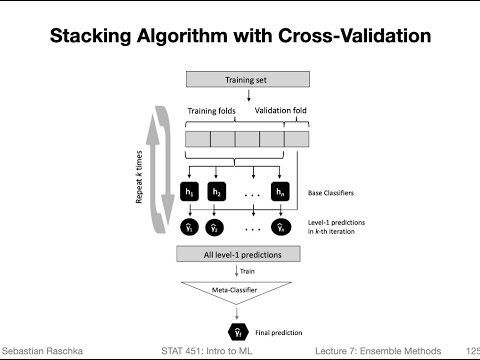

In this video I explain the concepts of stacking and blending in ensemble learning. I discuss in detail the working methodology of stacking and blending in ensemble machine learning. Below topics are mentioned in this video:

1. What is stacking ensemble learning

2. Stacking and blending in machine learning

3. Stacking ensemble learning in python

4. What is meta classifier in stacking ensemble

5. Stacking meta classifier explanation

#StackingEnsemble #StackingandBlending #unfolddatascience

Welcome! I'm Aman, a Data Scientist & AI Mentor.

🚀 **Level Up Your Skills:**

* **Udemy Courses:** 🔥 **Start Learning Now!** 🔥

* X (Twitter): @unfolds

* Instagram: @unfold_data_science

🎬 **Featured Playlists:**

🎥 **My Studio Gear:**

#DataScience #AI #MachineLearning

About this video:

In this video I explain the concepts of stacking and blending in ensemble learning. I discuss in detail the working methodology of stacking and blending in ensemble machine learning. Below topics are mentioned in this video:

1. What is stacking ensemble learning

2. Stacking and blending in machine learning

3. Stacking ensemble learning in python

4. What is meta classifier in stacking ensemble

5. Stacking meta classifier explanation

Stacking Ensemble Learning|Stacking and Blending in ensemble machine learning

Ensemble (Boosting, Bagging, and Stacking) in Machine Learning: Easy Explanation for Data Scientists

Stacking and Blending Ensembles

Stacking Explained for Beginners - Ensemble Learning

Hands-On Stacking and Blending Algorithm Implementation | Ensemble Machine Learning Algorithms

Ensemble Learning - Bagging, Boosting, and Stacking explained in 4 minutes!

Ensemble Machine Learning Techniques: Overview of Stacking Technique|packtpub.com

Stacking (blending)

Ensemble Machine Learning Technique: Blending & Stacking

59 Stacking, Blending - Advanced Ensemble Learning Algorithms

Stacking in Ensemble Learning: The Ultimate Model Fusion!

stacking and blending ensembles

#12 ( Stacking Ensemble Learning ) || Section - 9 || Ensemble Learning

Ensemble Learning Method Machine Learning Stacking

What is a Ensemble Model? (Stacking)

Ensemble Learning Techniques Voting Bagging Boosting Random Forest Stacking in ML by Mahesh Huddar

Understanding Ensemble Models | Stacking Models | Blending | Meta Model

Ensemble Method: Stacking (Stacked Generalization)

Ensembling, Blending & Stacking

Ensemble Learning pour les Débutants : Bagging, Boosting et Stacking Démystifié

Boosting | Stacking | Ensemble Learning Part-10 | Machine Learning Tutorial - InsideAIML

Machine Learning Course - 16. Ensembles 3: Stacking & Intelligence Architectures

Stacking Ensemble in Python

7.7 Stacking (L07: Ensemble Methods)

Комментарии

0:09:26

0:09:26

0:08:02

0:08:02

0:35:20

0:35:20

0:17:39

0:17:39

0:03:22

0:03:22

0:03:47

0:03:47

0:03:59

0:03:59

0:01:11

0:01:11

0:07:53

0:07:53

0:04:51

0:04:51

0:00:33

0:00:33

0:03:12

0:03:12

0:25:42

0:25:42

0:04:32

0:04:32

0:07:22

0:07:22

0:08:37

0:08:37

0:11:00

0:11:00

0:13:14

0:13:14

1:16:18

1:16:18

0:12:51

0:12:51

0:12:28

0:12:28

0:48:49

0:48:49

0:06:47

0:06:47

0:34:13

0:34:13