filmov

tv

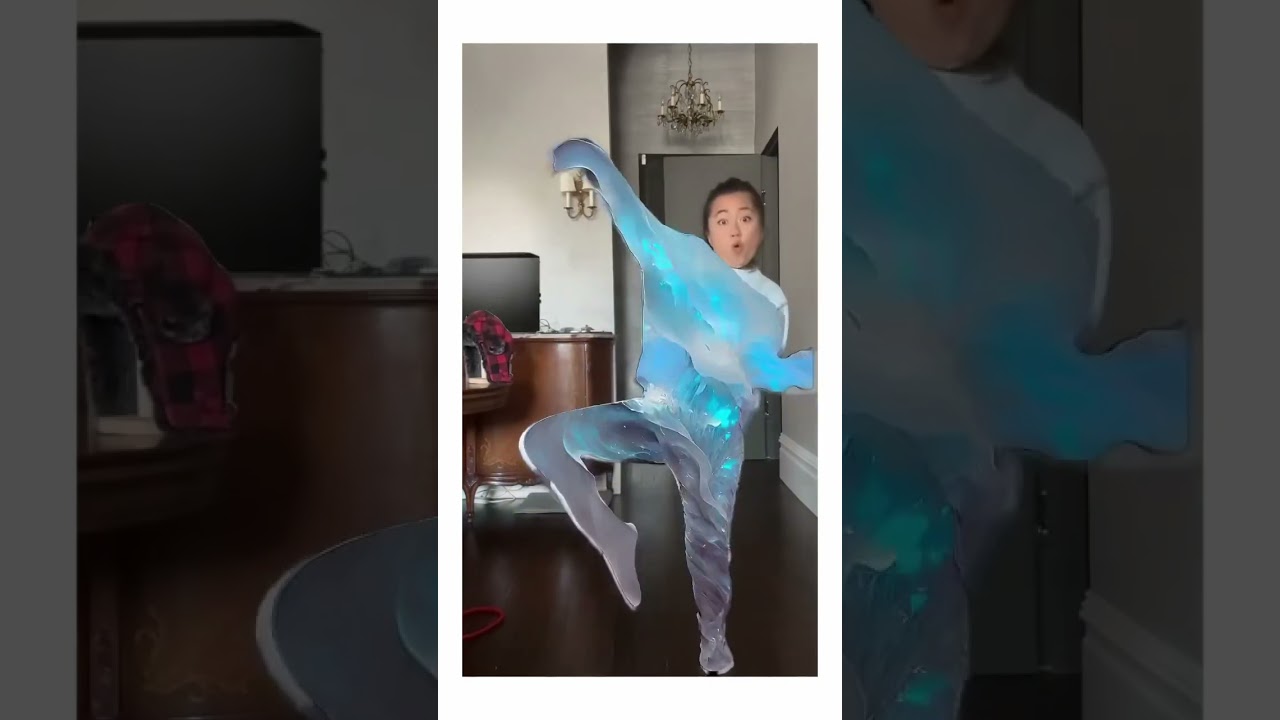

Using Stable Diffusion to upgrade my fashion choices (edited on HP Z8 Fury)

Показать описание

New video for HP! Edited on the Z8 Fury @ZbyHP

Here's our process:

Collab with @noahrobertmiller

1. First we did a bunch of tests. I tried out different outfits, and we tested how we could use Stable Diffusion to reimagine those outfits. We figured out which kinds outfits work best (it's ones with lots of textures and patterns)

2. Then we recorded a video of me dancing different styles. I'm actually changing different outfits in real-life too, and editing a match cut for each one. That's why the outfit at the end is different from the one in the beginning. Each outfit underneath provides the "structure" for us to train on, so I tried to match up the general shapes as best I could

3. Then we rotoscoped me out of the video, to isolate me from the background, providing a cleaner plate for training

4. We used Stable Diffusion to re-imagine the outfits, prompting with different outfits that would match with the dance styles

5. Btw SD tends to do some wonky stuff with the faces (it doesn't handle human faces that well) so as a finishing touch we decided to rotoscope out the faces, and use my real human face instead

A bit about the machine we used -

The Z8 Fury @zbyhp is a monster of a machine. 56 core CPU, 4 GPUs, 128gb of memory, a process that took hours on my old machine took minutes. One of the reasons I like using AI in my editing workflow is the speed boost you get compared to traditional and 3D animation, and the Z8 pushes that speed even further. I'm able to iterate faster and try ideas almost as quickly as I can think of them. It's a super exciting time to be in where the tools are no longer the bottleneck to creative work. #ZbyHP #MadeOnZ #Intel

Client: HP

Editing @noahrobertmiller & @karenxcheng

Choreography @austingumban

Music: Ride or Die by Yarin Primak

Here's our process:

Collab with @noahrobertmiller

1. First we did a bunch of tests. I tried out different outfits, and we tested how we could use Stable Diffusion to reimagine those outfits. We figured out which kinds outfits work best (it's ones with lots of textures and patterns)

2. Then we recorded a video of me dancing different styles. I'm actually changing different outfits in real-life too, and editing a match cut for each one. That's why the outfit at the end is different from the one in the beginning. Each outfit underneath provides the "structure" for us to train on, so I tried to match up the general shapes as best I could

3. Then we rotoscoped me out of the video, to isolate me from the background, providing a cleaner plate for training

4. We used Stable Diffusion to re-imagine the outfits, prompting with different outfits that would match with the dance styles

5. Btw SD tends to do some wonky stuff with the faces (it doesn't handle human faces that well) so as a finishing touch we decided to rotoscope out the faces, and use my real human face instead

A bit about the machine we used -

The Z8 Fury @zbyhp is a monster of a machine. 56 core CPU, 4 GPUs, 128gb of memory, a process that took hours on my old machine took minutes. One of the reasons I like using AI in my editing workflow is the speed boost you get compared to traditional and 3D animation, and the Z8 pushes that speed even further. I'm able to iterate faster and try ideas almost as quickly as I can think of them. It's a super exciting time to be in where the tools are no longer the bottleneck to creative work. #ZbyHP #MadeOnZ #Intel

Client: HP

Editing @noahrobertmiller & @karenxcheng

Choreography @austingumban

Music: Ride or Die by Yarin Primak

Комментарии

0:01:35

0:01:35

0:00:47

0:00:47

0:08:00

0:08:00

0:07:38

0:07:38

0:14:48

0:14:48

0:06:15

0:06:15

0:06:48

0:06:48

0:09:13

0:09:13

0:10:13

0:10:13

0:04:39

0:04:39

0:15:44

0:15:44

0:04:22

0:04:22

0:05:09

0:05:09

0:10:10

0:10:10

0:08:05

0:08:05

0:01:16

0:01:16

0:10:39

0:10:39

0:08:27

0:08:27

0:11:09

0:11:09

0:03:29

0:03:29

0:11:00

0:11:00

0:05:02

0:05:02

0:16:08

0:16:08

0:08:02

0:08:02