filmov

tv

Carlo Lucibello - Entropic algorithms and wide flat minima in neural networks

Показать описание

Entropic algorithms and wide flat minima in neural networks

Carlo Lucibello

13.00-14.00, Wednesday 3 November 2021, Zoom

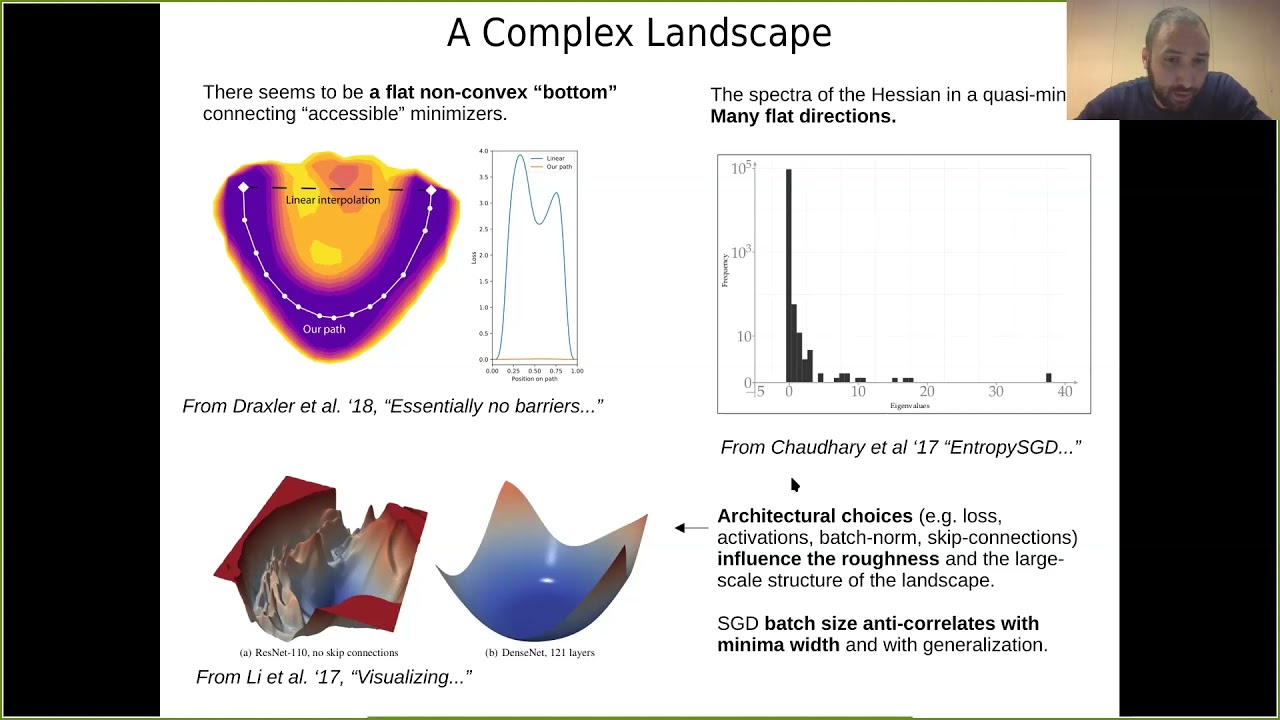

Abstract: The properties of flat minima in the training loss landscape of neural networks have been debated for some time. Increasing evidence suggests they possess better generalization capabilities with respect to sharp ones. First, we'll discuss simple neural network models. Using analytical tools from spin glass theory of disordered systems, we are able to probe the geometry of the landscape and highlight the presence of flat minima that generalize well and are attractive for learning dynamics.

Next, we extend the analysis to the deep learning scenario by extensive numerical validations. Using two algorithms, Entropy- SGD and Replicated-SGD, that explicitly include in the optimization objective a non-local flatness measure known as local entropy, we consistently improve the generalization error for common architectures (e.g. ResNet, EfficientNet). Finally, we'll discuss the extension of message passing techniques (Belief Propagation) to deep networks as an alternative paradigm to SGD training.

Carlo Lucibello

13.00-14.00, Wednesday 3 November 2021, Zoom

Abstract: The properties of flat minima in the training loss landscape of neural networks have been debated for some time. Increasing evidence suggests they possess better generalization capabilities with respect to sharp ones. First, we'll discuss simple neural network models. Using analytical tools from spin glass theory of disordered systems, we are able to probe the geometry of the landscape and highlight the presence of flat minima that generalize well and are attractive for learning dynamics.

Next, we extend the analysis to the deep learning scenario by extensive numerical validations. Using two algorithms, Entropy- SGD and Replicated-SGD, that explicitly include in the optimization objective a non-local flatness measure known as local entropy, we consistently improve the generalization error for common architectures (e.g. ResNet, EfficientNet). Finally, we'll discuss the extension of message passing techniques (Belief Propagation) to deep networks as an alternative paradigm to SGD training.

0:38:49

0:38:49

0:16:21

0:16:21

0:50:17

0:50:17

0:15:00

0:15:00

1:26:50

1:26:50

1:31:13

1:31:13

0:11:56

0:11:56

0:03:27

0:03:27

0:14:21

0:14:21

0:58:53

0:58:53

0:37:06

0:37:06

![[ECCV 2022] Improving](https://i.ytimg.com/vi/1H79C7gcmuw/hqdefault.jpg) 0:03:58

0:03:58

0:53:12

0:53:12

0:37:51

0:37:51

0:33:59

0:33:59

0:47:53

0:47:53

0:24:29

0:24:29

1:19:31

1:19:31

1:11:54

1:11:54

0:34:44

0:34:44

0:03:23

0:03:23

1:25:35

1:25:35

1:10:08

1:10:08

0:17:40

0:17:40