filmov

tv

Batch vs. Real-Time Inference Explained

Показать описание

Batch and real-time inference are two fundamental approaches to processing data in machine learning and artificial intelligence applications. The choice between these two approaches has a significant impact on the performance, efficiency, and scalability of AI systems.

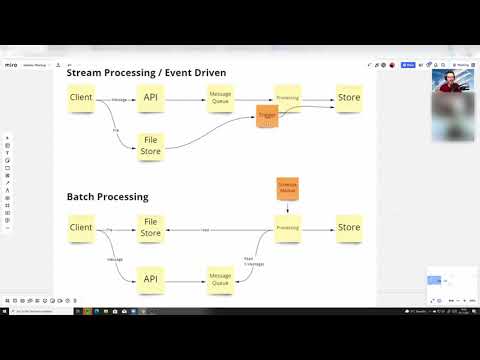

Batch processing involves collecting and processing data in batches, whereas real-time processing involves processing data as it becomes available, without any delay. Each approach has its own strengths and weaknesses. Batch processing is often used in applications where data is abundant and processing can be done in large chunks, such as image and video processing. On the other hand, real-time processing is used in applications where instantaneous results are crucial, such as autonomous vehicles and predictive analytics.

Understanding the difference between batch and real-time inference is crucial for choosing the right approach for a specific application.

For those interested in learning more about machine learning and inference methods, I recommend exploring the topics of model serving, deployment, and experimentation. These areas are crucial in understanding the overall workflow of putting AI models into production.

Additional Resources:

While not explicitly covered in this explanation, readers can follow resources on popular model serving platforms like TensorFlow Serving, AWS SageMaker, or Azure Machine Learning for a deeper dive into model deployment and experimentation.

#BatchProcessing #RealTimeInference #MachineLearning #ArtificialIntelligence #DataProcessing #ModelServing #ModelDeployment #AISystems #STEM

Find this and all other slideshows for free on our website:

Batch processing involves collecting and processing data in batches, whereas real-time processing involves processing data as it becomes available, without any delay. Each approach has its own strengths and weaknesses. Batch processing is often used in applications where data is abundant and processing can be done in large chunks, such as image and video processing. On the other hand, real-time processing is used in applications where instantaneous results are crucial, such as autonomous vehicles and predictive analytics.

Understanding the difference between batch and real-time inference is crucial for choosing the right approach for a specific application.

For those interested in learning more about machine learning and inference methods, I recommend exploring the topics of model serving, deployment, and experimentation. These areas are crucial in understanding the overall workflow of putting AI models into production.

Additional Resources:

While not explicitly covered in this explanation, readers can follow resources on popular model serving platforms like TensorFlow Serving, AWS SageMaker, or Azure Machine Learning for a deeper dive into model deployment and experimentation.

#BatchProcessing #RealTimeInference #MachineLearning #ArtificialIntelligence #DataProcessing #ModelServing #ModelDeployment #AISystems #STEM

Find this and all other slideshows for free on our website:

0:02:25

0:02:25

0:10:41

0:10:41

0:09:02

0:09:02

0:04:41

0:04:41

0:02:17

0:02:17

0:05:25

0:05:25

0:07:04

0:07:04

0:34:40

0:34:40

0:49:37

0:49:37

0:03:36

0:03:36

0:08:29

0:08:29

0:02:48

0:02:48

0:07:47

0:07:47

0:51:11

0:51:11

0:09:55

0:09:55

0:08:16

0:08:16

0:07:57

0:07:57

0:00:59

0:00:59

0:00:58

0:00:58

0:19:23

0:19:23

0:12:41

0:12:41

0:01:00

0:01:00

0:51:55

0:51:55

0:57:29

0:57:29