filmov

tv

The Kernel Trick - THE MATH YOU SHOULD KNOW!

Показать описание

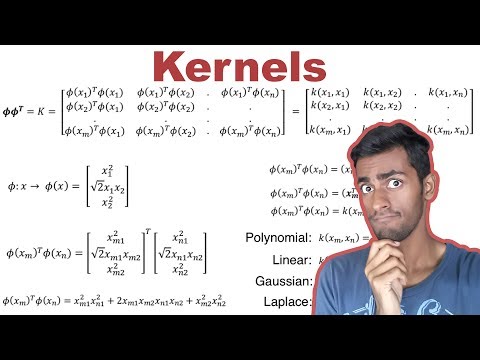

Some parametric methods, like polynomial regression and Support Vector Machines stand out as being very versatile. This is due to a concept called "Kernelization".

In this video, we are going to kernelize linear regression. And show how they can be incorporated in other Algorithms to solve complex problems.

If you like this video, hit that like button. If you’re new here, hit that SUBSCRIBE button and ring that bell for notifications!

FOLLOW ME

REFERENCES

In this video, we are going to kernelize linear regression. And show how they can be incorporated in other Algorithms to solve complex problems.

If you like this video, hit that like button. If you’re new here, hit that SUBSCRIBE button and ring that bell for notifications!

FOLLOW ME

REFERENCES

The Kernel Trick in Support Vector Machine (SVM)

The Kernel Trick - THE MATH YOU SHOULD KNOW!

The Kernel Trick

Kernel Trick

SVM Kernels : Data Science Concepts

What is Kernel Trick in Support Vector Machine | Kernel Trick in SVM Machine Learning Mahesh Huddar

1 3 1 The Kernel Trick

MFML 099 - SVMs and the kernel trick

From Non-Linear to Linear: Mastering Dimensional Mapping using Kernel SVM (Support Vector Machine)

The Kernel Trick for SVM | Lê Nguyên Hoang

The POWERFUL Kernel Trick

Support Vector Machines Part 1 (of 3): Main Ideas!!!

MLVU 6.5: The kernel trick

The Kernel Trick

Ch9 SVM the kernel trick

Machine Learning: Support Vector Machine - Kernel Trick

Kernel Trick in SVM | Geometric Intuition

11.2 The Kernel Trick (UvA - Machine Learning 1 - 2020)

04 - THE KERNEL TRICK - INTRODUCTION TO REGRESSION AND KERNEL METHODS

Support Vector Machines Part 2: The Polynomial Kernel (Part 2 of 3)

The kernel trick

Kernel Trick Visualization, Derivation, and Explanation.

BE 175, Lecture 16c: The Kernel Trick

La bidouille non-linéaire (kernel trick) | Intelligence Artificielle 10

Комментарии

0:03:18

0:03:18

0:07:30

0:07:30

0:02:37

0:02:37

0:01:40

0:01:40

0:12:02

0:12:02

0:09:46

0:09:46

0:03:29

0:03:29

0:05:17

0:05:17

0:07:55

0:07:55

0:04:42

0:04:42

0:12:38

0:12:38

0:20:32

0:20:32

0:18:06

0:18:06

0:16:20

0:16:20

0:13:35

0:13:35

0:05:52

0:05:52

0:06:18

0:06:18

0:15:22

0:15:22

0:38:38

0:38:38

0:07:15

0:07:15

0:13:19

0:13:19

0:04:21

0:04:21

0:27:52

0:27:52

0:19:46

0:19:46