filmov

tv

Neural Networks From Scratch - Lec 9 - ReLU Activation Function

Показать описание

Building Neural Networks from scratch in python.

This is the ninth video of the course - "Neural Networks From Scratch". This video covers the ReLU activation function and it's importance. We discuss the advantages of using ReLU activation function and its drawbacks. finally we discussed some tips to follow when using ReLU function in the neural networks. Also we saw the python implementation.

Course Playlist:

Sparsity in Machine Learning:

Please like and subscribe to the channel for more videos. This will help me in assessing the interests and creating more content. Thank you!

Chapter:

0:00 Introduction

0:38 Vanishing Gradient Problem

1:11 ReLU Activation function

2:07 ReLU function behaviour

2:55 Sine wave approximation using ReLU

3:20 Derivative of ReLU

3:46 Dying ReLU Problem

4:29 Advantages

6:33 Drawbacks

7:37 Tips for using ReLU

9:05 Python Implementation

#reluactivationfunction, #reluactivationfunctioninneuralnetwork, #sigmoidactivationfunction, #activationfunctioninneuralnetwork, #vanishinggradient

This is the ninth video of the course - "Neural Networks From Scratch". This video covers the ReLU activation function and it's importance. We discuss the advantages of using ReLU activation function and its drawbacks. finally we discussed some tips to follow when using ReLU function in the neural networks. Also we saw the python implementation.

Course Playlist:

Sparsity in Machine Learning:

Please like and subscribe to the channel for more videos. This will help me in assessing the interests and creating more content. Thank you!

Chapter:

0:00 Introduction

0:38 Vanishing Gradient Problem

1:11 ReLU Activation function

2:07 ReLU function behaviour

2:55 Sine wave approximation using ReLU

3:20 Derivative of ReLU

3:46 Dying ReLU Problem

4:29 Advantages

6:33 Drawbacks

7:37 Tips for using ReLU

9:05 Python Implementation

#reluactivationfunction, #reluactivationfunctioninneuralnetwork, #sigmoidactivationfunction, #activationfunctioninneuralnetwork, #vanishinggradient

I Built a Neural Network from Scratch

Building a neural network FROM SCRATCH (no Tensorflow/Pytorch, just numpy & math)

But what is a neural network? | Chapter 1, Deep learning

Neural Network from Scratch | Mathematics & Python Code

Neural Networks from Scratch - P.1 Intro and Neuron Code

How to Create a Neural Network (and Train it to Identify Doodles)

Neural Networks Explained from Scratch using Python

Understanding AI from Scratch – Neural Networks Course

Convolution Neural Network Step By Step Implementation | Tutorial | Deep Learning With Image data

Create a Simple Neural Network in Python from Scratch

Neural Networks from Scratch - P.5 Hidden Layer Activation Functions

Neural Network From Scratch In Python

Neural Networks from Scratch - P.4 Batches, Layers, and Objects

Neural Network from scratch using Only NUMPY

Convolutional Neural Network from Scratch | Mathematics & Python Code

How to train simple AIs

Creating a Neural Network from scratch in under 10 minutes

Neural Networks from Scratch - P.6 Softmax Activation

The Complete Mathematics of Neural Networks and Deep Learning

Neural Network In 5 Minutes | What Is A Neural Network? | How Neural Networks Work | Simplilearn

Running a NEURAL NETWORK in SCRATCH (Block-Based Coding)

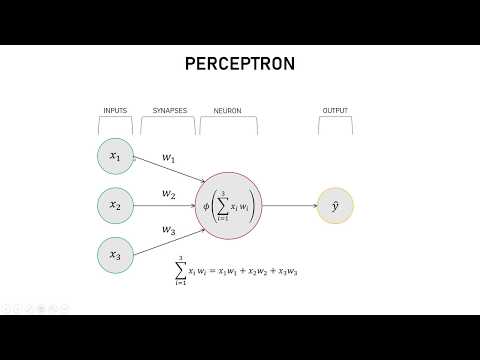

Lecture 1 - Neural Network from Scratch: Coding Neurons and Layers

Neural Networks from Scratch announcement

Neural Networks From Scratch in Rust

Комментарии

0:09:15

0:09:15

0:31:28

0:31:28

0:18:40

0:18:40

0:32:32

0:32:32

0:16:59

0:16:59

0:54:51

0:54:51

0:17:38

0:17:38

3:44:18

3:44:18

0:42:12

0:42:12

0:14:15

0:14:15

0:40:06

0:40:06

1:13:07

1:13:07

0:33:47

0:33:47

1:39:10

1:39:10

0:33:23

0:33:23

0:12:59

0:12:59

0:10:41

0:10:41

0:34:01

0:34:01

5:00:53

5:00:53

0:05:45

0:05:45

0:13:29

0:13:29

0:28:37

0:28:37

0:11:35

0:11:35

0:09:12

0:09:12