filmov

tv

Random Forest (Ensembling Technique)

Показать описание

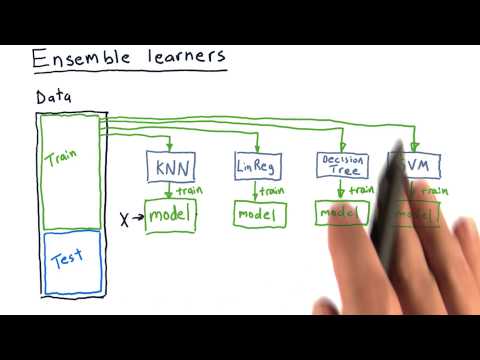

Mastering Ensemble Learning: Bagging & Boosting Explained!

Welcome to today's workshop on Ensemble Learning! 🚀 In this session, we will explore how combining multiple models improves accuracy and performance.

📌 Timestamps:

⏳ 0:00 - Introduction to Ensemble Learning

⏳ 0:20 - What is Ensemble Learning?

⏳ 0:42 - Two Main Techniques: Bagging & Boosting

⏳ 0:52 - What is Bagging (Bootstrap Aggregation)?

⏳ 1:10 - How Bagging Works: Training Models in Parallel

⏳ 1:36 - Why Bagging Helps Reduce Variance & Overfitting

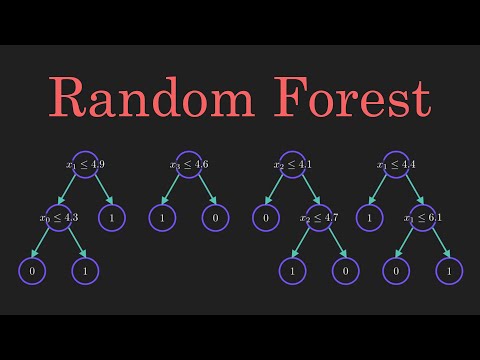

⏳ 2:00 - Random Forest: How It Uses Bagging

⏳ 3:00 - How Random Forest Works (Step-by-Step Explanation)

⏳ 5:00 - Example: Decision Trees & Majority Voting in Random Forest

⏳ 7:00 - Boosting Explained: Training Models Sequentially

⏳ 8:00 - How Boosting Corrects Previous Model Mistakes

⏳ 9:00 - Examples of Boosting: XGBoost, AdaBoost

⏳ 10:00 - Comparing Bagging vs. Boosting

⏳ 11:00 - Summary & Final Thoughts

📢 Check out our other AI & ML videos:

📌 Follow Us on Social Media for More ML & AI Content:

📲 Call/WhatsApp for AI & ML Training: +91 90432 35205

💬 Drop a comment with your questions and experiences using Docker! Don't forget to LIKE 👍 and SUBSCRIBE 🔔

#MachineLearning #AI #EnsembleLearning #Bagging #Boosting #RandomForest #MLAlgorithms #DataScience #ArtificialIntelligence #TechEducation #MachineLearning #EnsembleLearning #Bagging #Boosting #RandomForest #XGBoost #AdaBoost #AI

Welcome to today's workshop on Ensemble Learning! 🚀 In this session, we will explore how combining multiple models improves accuracy and performance.

📌 Timestamps:

⏳ 0:00 - Introduction to Ensemble Learning

⏳ 0:20 - What is Ensemble Learning?

⏳ 0:42 - Two Main Techniques: Bagging & Boosting

⏳ 0:52 - What is Bagging (Bootstrap Aggregation)?

⏳ 1:10 - How Bagging Works: Training Models in Parallel

⏳ 1:36 - Why Bagging Helps Reduce Variance & Overfitting

⏳ 2:00 - Random Forest: How It Uses Bagging

⏳ 3:00 - How Random Forest Works (Step-by-Step Explanation)

⏳ 5:00 - Example: Decision Trees & Majority Voting in Random Forest

⏳ 7:00 - Boosting Explained: Training Models Sequentially

⏳ 8:00 - How Boosting Corrects Previous Model Mistakes

⏳ 9:00 - Examples of Boosting: XGBoost, AdaBoost

⏳ 10:00 - Comparing Bagging vs. Boosting

⏳ 11:00 - Summary & Final Thoughts

📢 Check out our other AI & ML videos:

📌 Follow Us on Social Media for More ML & AI Content:

📲 Call/WhatsApp for AI & ML Training: +91 90432 35205

💬 Drop a comment with your questions and experiences using Docker! Don't forget to LIKE 👍 and SUBSCRIBE 🔔

#MachineLearning #AI #EnsembleLearning #Bagging #Boosting #RandomForest #MLAlgorithms #DataScience #ArtificialIntelligence #TechEducation #MachineLearning #EnsembleLearning #Bagging #Boosting #RandomForest #XGBoost #AdaBoost #AI

0:05:21

0:05:21

0:09:54

0:09:54

0:08:02

0:08:02

0:25:57

0:25:57

0:04:23

0:04:23

0:08:37

0:08:37

0:30:29

0:30:29

0:07:26

0:07:26

0:41:06

0:41:06

0:08:00

0:08:00

0:06:27

0:06:27

0:12:03

0:12:03

0:23:37

0:23:37

0:29:53

0:29:53

0:08:33

0:08:33

0:02:52

0:02:52

0:05:26

0:05:26

0:05:58

0:05:58

0:05:12

0:05:12

0:11:22

0:11:22

0:39:51

0:39:51

0:08:01

0:08:01

0:28:57

0:28:57

0:00:25

0:00:25