filmov

tv

Deep Learning for Symbolic Mathematics

Показать описание

This model solves integrals and ODEs by doing seq2seq!

Abstract:

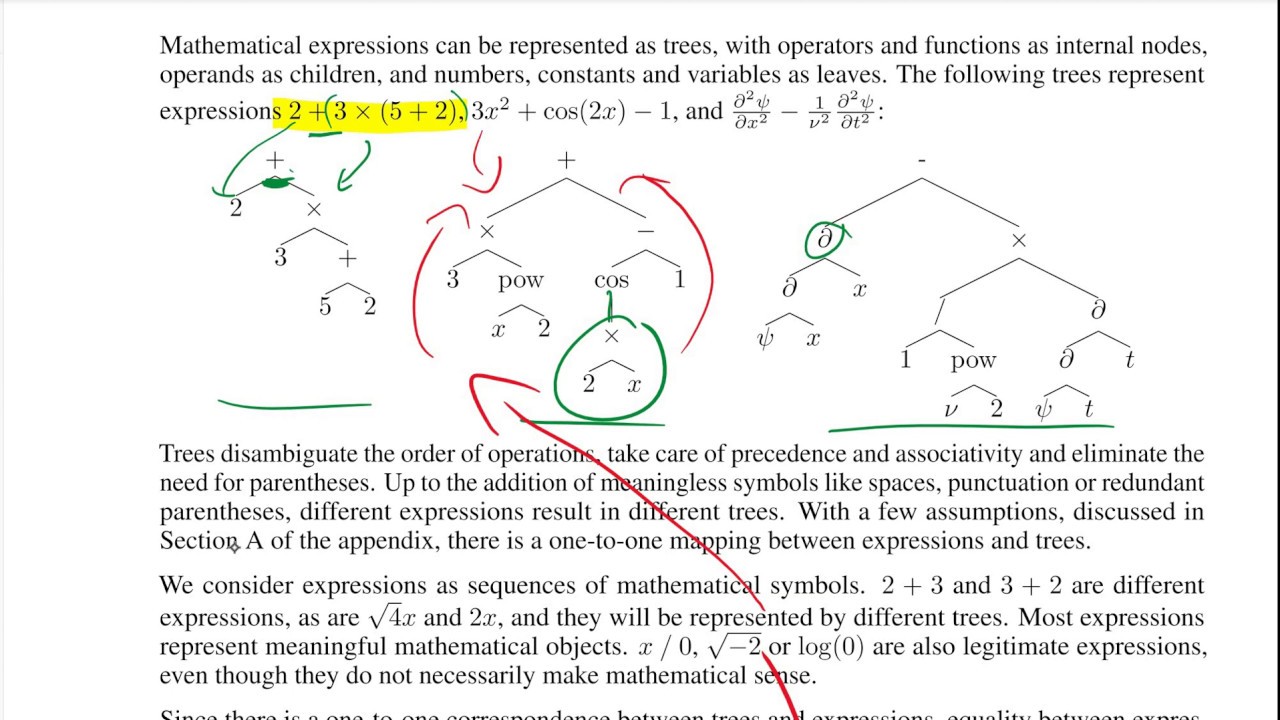

Neural networks have a reputation for being better at solving statistical or approximate problems than at performing calculations or working with symbolic data. In this paper, we show that they can be surprisingly good at more elaborated tasks in mathematics, such as symbolic integration and solving differential equations. We propose a syntax for representing mathematical problems, and methods for generating large datasets that can be used to train sequence-to-sequence models. We achieve results that outperform commercial Computer Algebra Systems such as Matlab or Mathematica.

Authors: Guillaume Lample, François Charton

Links:

Abstract:

Neural networks have a reputation for being better at solving statistical or approximate problems than at performing calculations or working with symbolic data. In this paper, we show that they can be surprisingly good at more elaborated tasks in mathematics, such as symbolic integration and solving differential equations. We propose a syntax for representing mathematical problems, and methods for generating large datasets that can be used to train sequence-to-sequence models. We achieve results that outperform commercial Computer Algebra Systems such as Matlab or Mathematica.

Authors: Guillaume Lample, François Charton

Links:

Deep Learning for Symbolic Mathematics

Deep Learning for Symbolic Mathematics!? | Paper EXPLAINED

Guillaume Lample: Deep Learning for Symbolic Mathematics

Kaggle Reading Group: Deep Learning for Symbolic Mathematics

Deep Learning for Symbolic Mathematics | AISC

Deep Learning for Symbolic Mathematics

Deep Learning for Symbolic Mathematics

Kaggle Reading Group: Deep Learning for Symbolic Mathematics (Part 2) | Kaggle

DEEP LEARNING FOR SYMBOLIC MATHEMATICS (Reproducibility study)

Learning Symbolic Equations with Deep Learning

#1 Deep Learning for Symbolic Mathematics by Facebook Research Explained

Interpretable Deep Learning for New Physics Discovery

#12 MATLAB - From Zero to Hero | Symbolic Mathematics & Expressions

Jailbreaking Large Language Models with Symbolic Mathematics 🧠🔐 | everythingAI

Deep Symbolic Regression: Recovering Math Expressions from Data via Risk-Seeking Policy Gradients

Learning Symbolic Equations with Deep Learning

AI vs Machine Learning

Embedding symbolic computation within neural computation for AI & NLP - Paul Smolensky keynote H...

Meeting #103: Deep Learning For Symbolic Mathematics

neural networks vs symbolic ai

Mathematics of Deep Learning Overview | AISC Lunch & Learn

Symbolic Regression using Evolutionary Algorithm

Lecture 24 - The Mathematical Engineering of Deep Learning

Paper - Jailbreaking Large Language Models with Symbolic Mathematics - Audio Podcast

Комментарии

0:24:16

0:24:16

0:11:29

0:11:29

0:59:49

0:59:49

1:04:09

1:04:09

0:56:39

0:56:39

0:54:36

0:54:36

0:04:46

0:04:46

0:52:38

0:52:38

0:05:03

0:05:03

0:59:01

0:59:01

0:23:05

0:23:05

0:24:08

0:24:08

0:02:50

0:02:50

0:00:33

0:00:33

1:07:34

1:07:34

0:30:44

0:30:44

0:05:49

0:05:49

0:52:48

0:52:48

1:40:47

1:40:47

0:00:45

0:00:45

0:54:20

0:54:20

0:00:06

0:00:06

0:57:27

0:57:27

0:05:33

0:05:33