filmov

tv

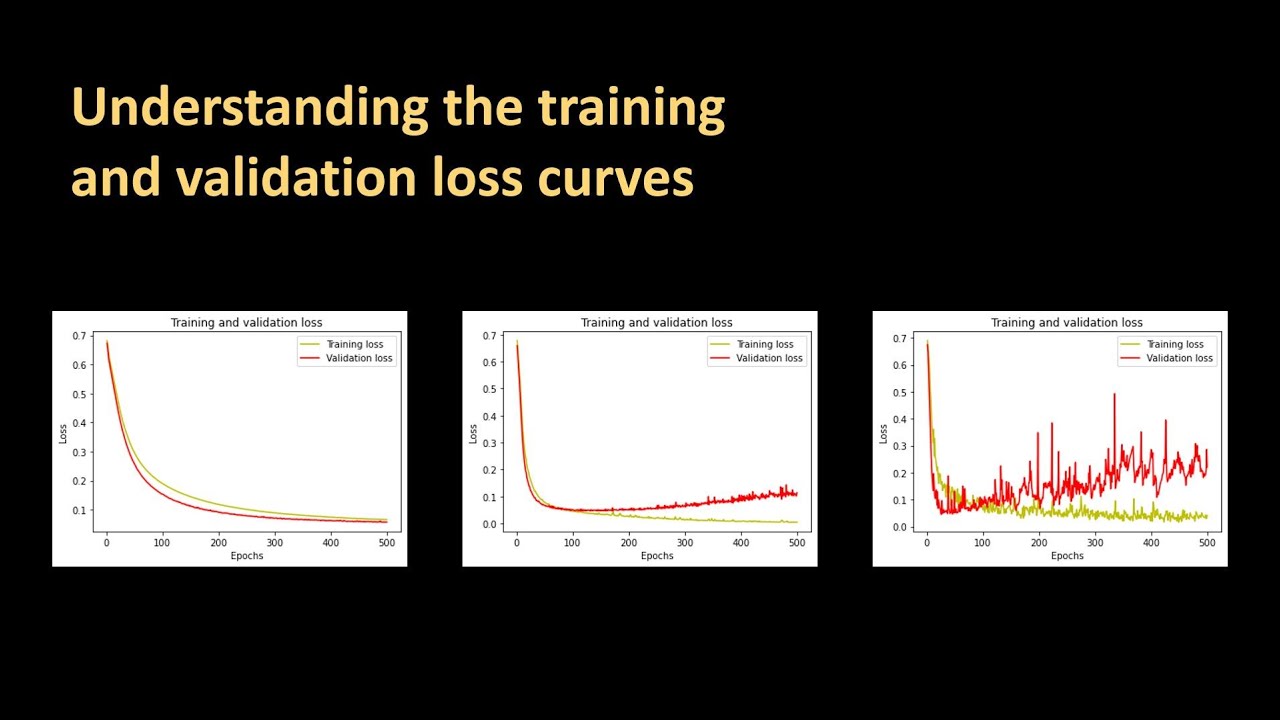

154 - Understanding the training and validation loss curves

Показать описание

Loss curves contain a lot of information about training of an artificial neural network. This video goes through the interpretation of various loss curves generated using the Wisconsin breast cancer data set.

Code generated in the video can be downloaded from here:

Code generated in the video can be downloaded from here:

154 - Understanding the training and validation loss curves

Understanding training curves from arivis AI training on the arivis Cloud (formerly APEER)

How a mechanical ventilator works | AFP

133 - What are Loss functions in machine learning?

Regularization in a Neural Network | Dealing with overfitting

What is a Good Heart Rate for My Age? Both Resting & Maximum

Elon Musk's 3 Rules To Learning Anything

How to: Set Tool Length and Work Offsets – Haas Automation Tip of the Day

High Liver Enzymes [ALT & AST] – What Do They Mean? – Dr.Berg

Understanding the Training Process

I Wish I'd Known THIS Before Yoga Teacher Training!

Electrolyte Disorders | The EM Boot Camp Course

Excel Tutorial: Learn Excel in 30 Minutes - Just Right for your New Job Application

The Psychology of Beating an Incurable Illness | Bob Cafaro | TEDxCharlottesville

How Different Types of Suspension System Works? Explained in Details

Learn the basics of SAS Programming in LESS THAN 2 HOURS: SAS for Beginners Tutorial

Why Gender Equality Is Good for Everyone — Men Included | Michael Kimmel | TED Talks

THE SECRET TO ADJUSTING VALVES ON DIESEL & INDUSTRIAL ENGINES TAUGHT BY OLD MECHANIC

Teaching Strategies: Cognitive Load Theory

Understanding Dutch Roll | Simple explanation.

Introduction to Permutations and Combinations

How I Would Learn to be a Data Analyst

PyTorch Lightning - Understanding Precision Training

How to Convert Kilograms to Pounds Fast - Easy Math Trick!

Комментарии

0:27:47

0:27:47

0:03:11

0:03:11

0:01:09

0:01:09

0:06:50

0:06:50

0:11:40

0:11:40

0:07:47

0:07:47

0:03:19

0:03:19

0:12:01

0:12:01

0:04:10

0:04:10

0:01:31

0:01:31

0:09:17

0:09:17

0:33:00

0:33:00

0:30:26

0:30:26

0:14:11

0:14:11

0:14:46

0:14:46

1:37:06

1:37:06

0:15:59

0:15:59

0:12:21

0:12:21

0:02:55

0:02:55

0:04:12

0:04:12

0:10:05

0:10:05

0:12:30

0:12:30

0:01:29

0:01:29

0:01:19

0:01:19