filmov

tv

Miles Cranmer - The Next Great Scientific Theory is Hiding Inside a Neural Network (April 3, 2024)

Показать описание

Machine learning methods such as neural networks are quickly finding uses in everything from text generation to construction cranes. Excitingly, those same tools also promise a new paradigm for scientific discovery.

Miles Cranmer - The Next Great Scientific Theory is Hiding Inside a Neural Network (April 3, 2024)

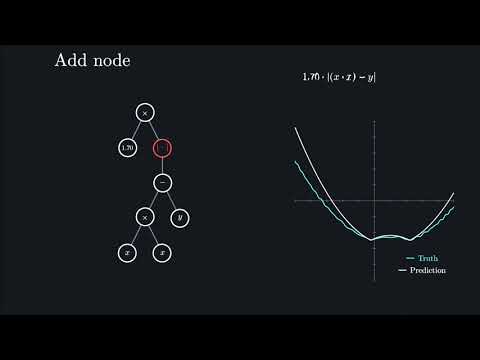

Interpretability via Symbolic Distillation

Flatiron Wide Algorithms and Mathematics - Miles Cranmer (October 20, 2020)

PySR & SymbolicRegression.jl paper 'trailer'

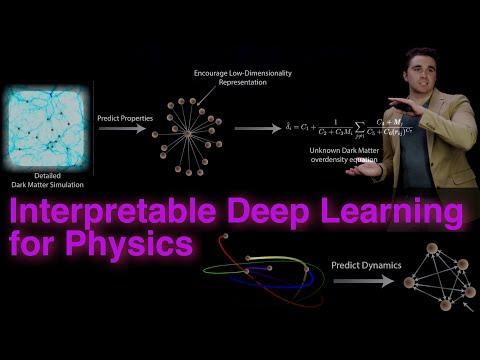

Interpretable Deep Learning for New Physics Discovery

Interpretable Machine Learning with SymbolicRegression.jl | Miles Cranmer | JuliaCon 2023

A3D3 seminar: Polymathic AI: Foundation Models for Science by Miles Cranmer

Review: Symbolic regression (Miles Cranmer)

Miles Cranmer | A Bayesian neural network predicts the dissolution of compact planetary systems

Converting Neural Networks to Symbolic Models

DDPS | The problem with deep learning for physics (and how to fix it) by Miles Cranmer

Interpretable Machine Learning Part 1

Discovering Symbolic Models from Deep Learning with Inductive Biases

Unsupervised Resource Allocation with Graph Neural Networks

шедевр 🤣🤣🤣🤣🤣

Discovering Symbolic Models from Deep Learning with Inductive Biases (Paper Explained)

[PreReg@NeurIPS'20] (60) Unsupervised Resource Allocation with Graph Neural Networks

Worst BMX Day Ever With Scotty Cranmer: Crooked World Presents

Interpretable Deep Learning for Physics

Modeling Dissolution of Compact Planetary Systems

My New One-Of-A-Kind Electric Bike Full Review!

Kyle Cranmer - Some fun examples from the intersection of machine learning and physics

Kyle Cranmer - Simulation-based Inference for Gravitational Wave Astronomy - IPAM at UCLA

CLOSE CALL AT BMX TRAILS!

Комментарии

0:55:55

0:55:55

0:51:04

0:51:04

0:58:34

0:58:34

0:01:34

0:01:34

0:24:08

0:24:08

0:31:51

0:31:51

1:00:47

1:00:47

1:05:18

1:05:18

0:44:24

0:44:24

0:59:48

0:59:48

1:06:58

1:06:58

1:37:28

1:37:28

0:01:44

0:01:44

0:01:01

0:01:01

0:00:21

0:00:21

0:46:13

0:46:13

![[PreReg@NeurIPS'20] (60) Unsupervised](https://i.ytimg.com/vi/po5oB-P8Ppo/hqdefault.jpg) 0:01:01

0:01:01

0:02:16

0:02:16

1:08:36

1:08:36

0:48:09

0:48:09

0:06:22

0:06:22

1:03:19

1:03:19

0:48:39

0:48:39

0:09:09

0:09:09