filmov

tv

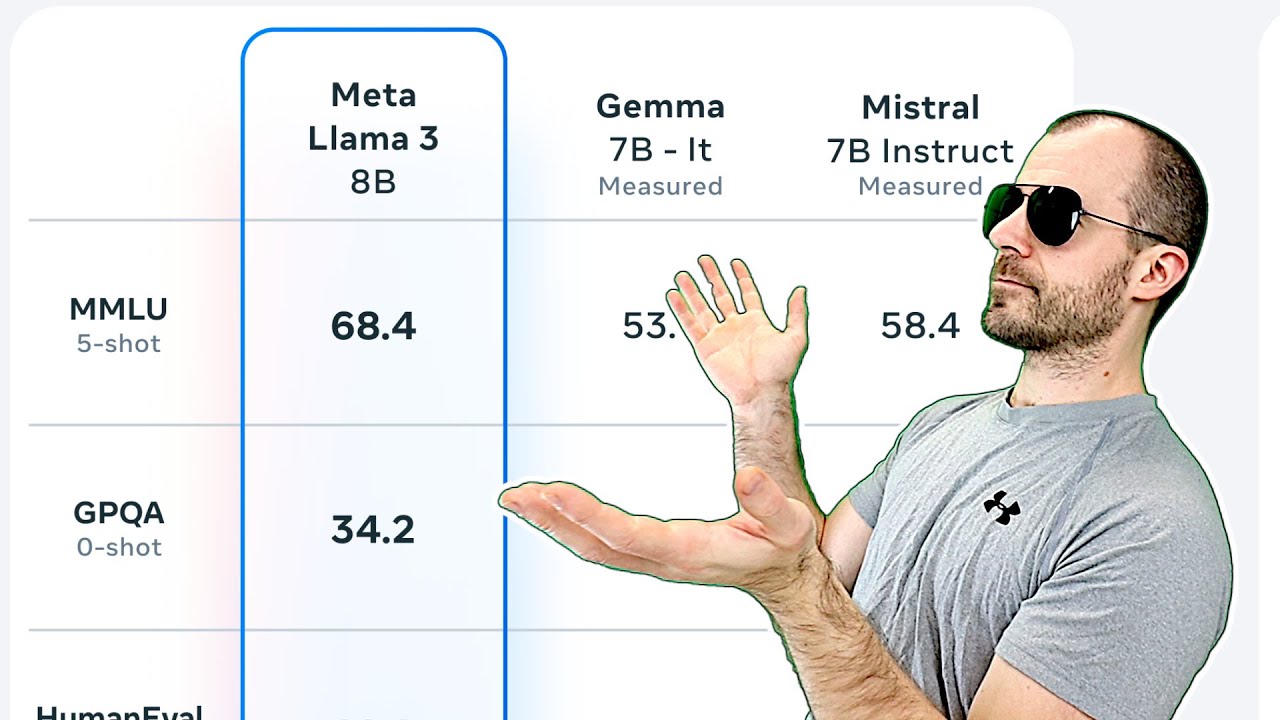

[ML News] Llama 3 changes the game

Показать описание

Meta's Llama 3 is out. New model, new license, new opportunities.

References:

Links:

If you want to support me, the best thing to do is to share out the content :)

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

References:

Links:

If you want to support me, the best thing to do is to share out the content :)

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

[ML News] Llama 3 changes the game

LLaMA 3 Tested!! Yes, It’s REALLY That GREAT

LLaMA 3 Is HERE and SMASHES Benchmarks (Open-Source)

Good job, Lamine Yamal! 💎 #fcbarcelona #Supercopa #Shorts

🔥Evolution Of Spiderman🔥 #Marvel #avengers #short #video

Llama-3.3: The BEST Opensource LLM EVER! Beats GPT-4o! (Fully Tested)

Do you want to better your life? #philippines #angelescity #expat #pampanga #travelvlog

The real Amaterasu #shorts

Minecraft RTX 187% SHORT FUSE #Shorts

The EASIEST Way To Trick Mom

Spider-Man can’t save Spider-Gwen!! 😢 #Shorts

Best Programming Languages #programming #coding #javascript

Evolution of Roblox

Vector databases are so hot right now. WTF are they?

How to reset 2025 LG TVs

PyTorch in 100 Seconds

I did plastic surgery on Lisa (BLACKPINK) to look like Momo Challenge🤣💀 #shorts

Llama 3.1 | Meta is leading Open Source AI

The new Ozempic craze & its big problem

Nvidia CUDA in 100 Seconds

Don't throw fastballs to a slowball hitter. 😅 (via br41bennett tiktok)

Ki-Killua is that you? 😂- Ragna Crimson

Breaking Down Meta's Llama 3 Herd of Models

Transformers (how LLMs work) explained visually | DL5

Комментарии

![[ML News] Llama](https://i.ytimg.com/vi/kzB23CoZG30/hqdefault.jpg) 0:31:19

0:31:19

0:15:02

0:15:02

0:15:35

0:15:35

0:00:05

0:00:05

0:00:06

0:00:06

0:14:38

0:14:38

0:00:16

0:00:16

0:00:09

0:00:09

0:00:11

0:00:11

0:00:54

0:00:54

0:00:04

0:00:04

0:00:16

0:00:16

0:00:25

0:00:25

0:03:22

0:03:22

0:00:31

0:00:31

0:02:43

0:02:43

0:00:34

0:00:34

0:14:41

0:14:41

0:00:47

0:00:47

0:03:13

0:03:13

0:00:16

0:00:16

0:00:12

0:00:12

0:47:29

0:47:29

0:27:14

0:27:14