filmov

tv

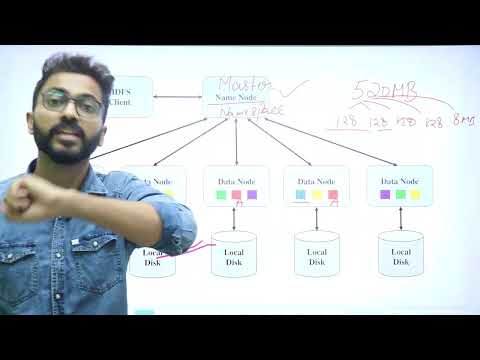

Explaining Hadoop's HDFS (Hadoop Distributed File System)

Показать описание

Big data is awash with acronyms at the moment, none more widely used than HDFS. Let's cut to the chase... it stands for Hadoop Distributed File System.

This is the system of distributing files that allows Hadoop to work on huge data sets at speed. It spreads blocks of data across different servers, as well as duplicating those blocks of data, and storing them distinctly.

Let's see why with an example.

Sarianne works in the financial markets, and runs a lot of predictive models to make sure her investments are minimum risk.

Utilising HDFS, her queries through Hadoop can run quickly because the data blocks are stored separately -- meaning all the computation can happen in one go, rather than queuing up behind each other.

As an added benefit, if one server fails (as one is bound to, given the amount of servers and disk drives needed to run big data projects) it won't stop Sarianne's models from pulling the data they need, because HDFS duplicated those blocks -- meaning Hadoop can return Sarianne's results in double quick time.

This is the system of distributing files that allows Hadoop to work on huge data sets at speed. It spreads blocks of data across different servers, as well as duplicating those blocks of data, and storing them distinctly.

Let's see why with an example.

Sarianne works in the financial markets, and runs a lot of predictive models to make sure her investments are minimum risk.

Utilising HDFS, her queries through Hadoop can run quickly because the data blocks are stored separately -- meaning all the computation can happen in one go, rather than queuing up behind each other.

As an added benefit, if one server fails (as one is bound to, given the amount of servers and disk drives needed to run big data projects) it won't stop Sarianne's models from pulling the data they need, because HDFS duplicated those blocks -- meaning Hadoop can return Sarianne's results in double quick time.

What Is HDFS And How It Works? | Hadoop Distributed File System (HDFS) Architecture | Simplilearn

Explaining Hadoop's HDFS (Hadoop Distributed File System)

What is HDFS | Name Node vs Data Node | Replication factor | Rack Awareness | Hadoop🐘🐘Framework...

Lec-127: Introduction to Hadoop🐘| What is Hadoop🐘| Hadoop Framework🖥

Hadoop🐘Ecosystem | All Components Hdfs🐘,Mapreduce,Hive🐝,Flume,Sqoop,Yarn,Hbase,Zookeeper🪧,Pig🐷...

Hadoop Distributed File System | What is HDFS | How HDFS Works | Intellipaat

What Is HDFS In Hadoop | HDFS Algorithm | HDFS Architecture In Hadoop | Intellipaat

Introduction To Hadoop | Hadoop Explained | Hadoop Tutorial For Beginners | Hadoop | Simplilearn

Hadoop HDFS Story

HDFS (Hadoop Distributed File System) | Big Data Tutorial For Beginners

Hadoop Distributed Filesystem (HDFS) And Its Features

Basics of Hadoop Distributed File System (HDFS)

Hadoop Distributed File Storage System - Part 1 -HDFS- Hadoop big data tutorials

002 What is Hadoop Distributed File System

Map Reduce explained with example | System Design

Hadoop HDFS in Detail

5.1. HDFS | Why HDFS?

What is MapReduce♻️in Hadoop🐘| Apache Hadoop🐘

Interacting with Hadoop Distributed File System (HDFS)

What is HDFS? | HDFS Explained in just a minute | Intellipaat

Hadoop HDFS Introduction | Hadoop Tutorial Videos | Mr. Srinivas

HDFS Tutorial For Beginners | HDFS Architecture | HDFS Tutorial | Hadoop Tutorial | Simplilearn

What is Hadoop Distribute FIle Systems (HDFS) Architecture? | Nodes, HeartBeat | Hadoop Tutorial 3

Introduction to HDFS-1 | Hadoop Distributed File System-1 Tutorial | Hadoop HDFS

Комментарии

0:09:11

0:09:11

0:01:06

0:01:06

0:13:48

0:13:48

0:09:28

0:09:28

0:11:03

0:11:03

0:24:58

0:24:58

0:12:56

0:12:56

0:22:23

0:22:23

0:09:56

0:09:56

0:12:18

0:12:18

0:07:02

0:07:02

0:14:14

0:14:14

0:03:50

0:03:50

0:05:50

0:05:50

0:09:09

0:09:09

0:17:56

0:17:56

0:03:23

0:03:23

0:09:58

0:09:58

0:11:58

0:11:58

0:00:58

0:00:58

0:13:15

0:13:15

0:43:16

0:43:16

0:19:50

0:19:50

0:56:47

0:56:47