filmov

tv

Hadoop HDFS Installation on single node cluster with Google cloud VM

Показать описание

In this guide we will discuss how to install Hadoop HDFS on a single node cluster with Google Cloud.

Create a new VM in google cloud as shown in video. Create instance as E2 Standard. Use Ubuntu as base image.

Open SSH terminal in browser window.

Update repositories using this command - sudo apt-get update

Install latest version of JDK using this command - sudo apt-get install default-jdk

Check java version using this command - java -version

To find java install directory, use "which" command and "read link" command as shown in video.

mkdir ~/Downloads

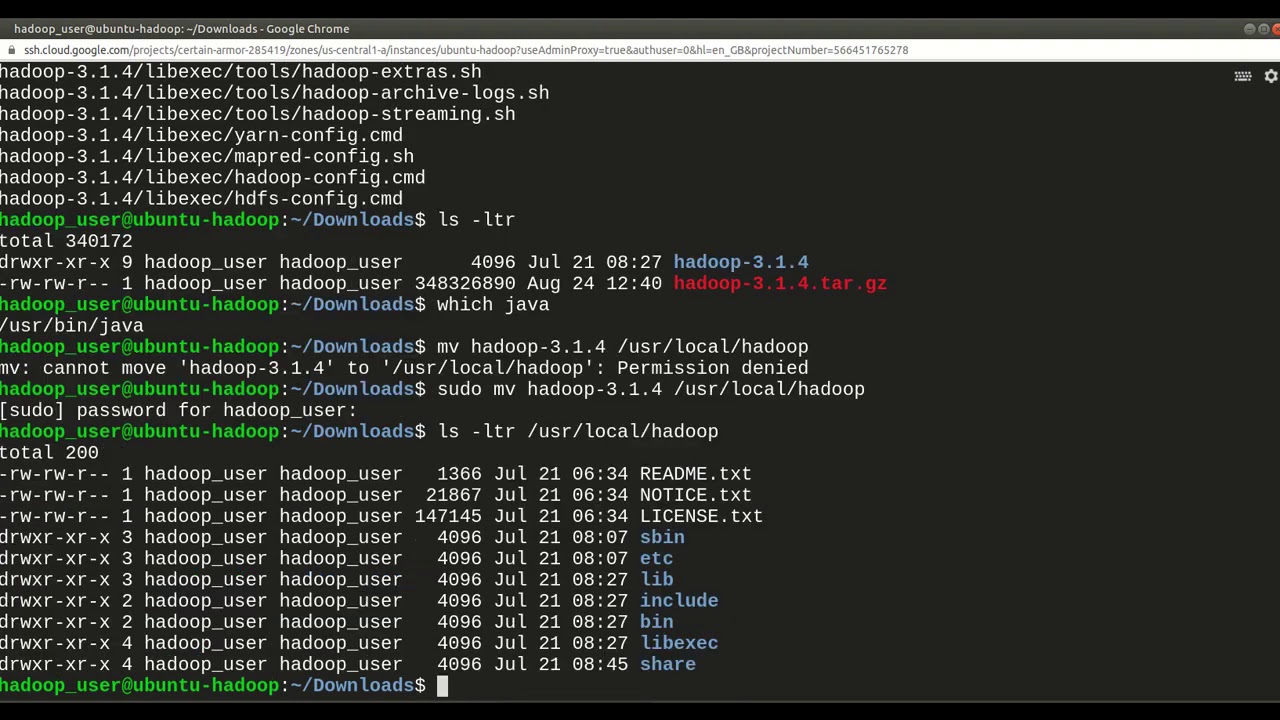

Move extracted hadoop directory to /usr/local - mv hadoop-3.1.4 /usr/local/hadoop

Edit .bashrc file and set environment variables as shown. These variables are important.

vi ~/.bashrc

In ~/.bashrc file add below lines at the end of file and save it.

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

export PATH=$PATH:$JAVA_HOME/bin

export HADOOP_HOME=/usr/local/hadoop

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

export HADOOP_CONF_DIR=/usr/local/hadoop/etc/hadoop

Take a note of JAVA HOME variable, we have used path that we found earlier using "which" and "readlink" commands. You may need to change this path if you are using different version of java.

Reload environment variables - source ~/.bashrc

Add below line in this file.

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

Edit hadoop core site settings and add lines as shown. This will set default hdfs port.

Please replace square brackets below with angled brackets. Youtube do not allow angled brackets in description. So I had to use square brackets.

[property]

[value]hdfs://localhost:54310[/value]

[/property]

Edit yarn site settings and add lines as shown.

Add below lines. Please replace square brackets below with angled brackets. Youtube do not allow angled brackets in description. So I had to use square brackets.

[property]

[value]mapreduce_shuffle[/value]

[/property]

[property]

[/property]

[property]

[value]localhost[/value]

[/property]

Add below lines

[property]

[value]localhost:54311[/value]

[/property]

[property]

[value]yarn[/value]

[/property]

Edit hdfs-site settings and add replication factor, name node and data node directories

Add/update below lines to set data and namenode directories.

[property]

[value]1[/value]

[/property]

[property]

[/property]

[property]

[/property]

[property]

[value]true[/value]

[/property]

Create hdfs data directories,

mkdir -p $HADOOP_HOME/hadoop_data/hdfs/namenode

mkdir -p $HADOOP_HOME/hadoop_data/hdfs/datanode

Create masters file, Add localhost line in this file, which is ip or host name for master node. For single node setup it’s just loclhost.

touch $HADOOP_CONF_DIR/masters

vi $HADOOP_CONF_DIR/masters

Add below line or ip address

localhost

Create Slaves file. Add localhost line in this file which is ip address or hostname for data nodes.

vi $HADOOP_CONF_DIR/slaves

Add below line or ip address

localhost

Format namenode. Use below command.

hdfs namenode -format

Setup SSH Keys for current user to have passwordless access to localhost.

Generate new SSH key and copy it to authorized keys file as shown

ssh-keygen

Add generated key t authorized_keys file.

Try ssh command now. It should be able to login without password.

ssh localhost

Start HDFS, YARN and History server

Run below command to check status of hadoop services

jps

0:06:39

0:06:39

0:33:43

0:33:43

0:11:18

0:11:18

0:37:22

0:37:22

0:17:34

0:17:34

0:17:52

0:17:52

0:29:00

0:29:00

0:07:07

0:07:07

0:44:33

0:44:33

0:15:48

0:15:48

0:13:24

0:13:24

0:10:34

0:10:34

0:16:10

0:16:10

0:15:05

0:15:05

0:24:29

0:24:29

0:17:56

0:17:56

0:10:12

0:10:12

0:11:36

0:11:36

0:06:21

0:06:21

0:11:58

0:11:58

0:19:39

0:19:39

0:18:35

0:18:35

0:29:15

0:29:15

0:10:16

0:10:16