filmov

tv

Active Vision Based Embodied-AI Design For Nano-UAV Autonomy | Ph.D. Defense of Nitin J. Sanket

Показать описание

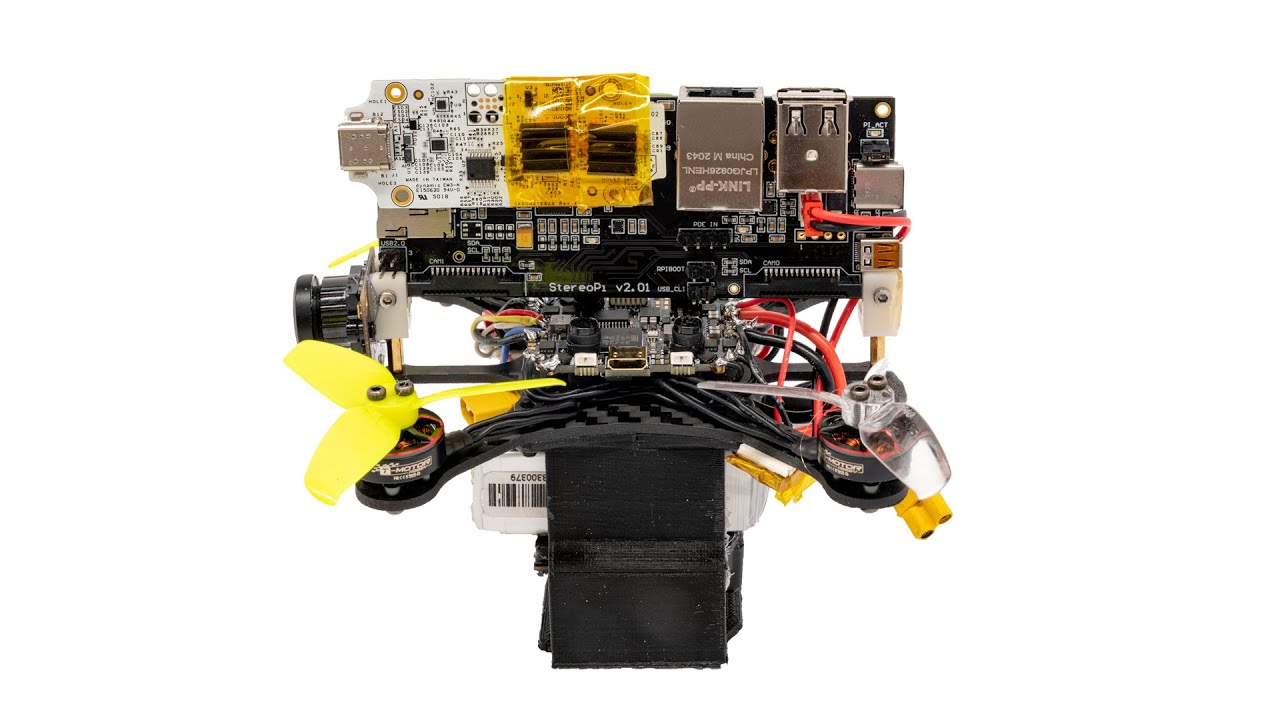

Currently, state-of-the-art in aerial robot autonomy uses sensors that can directly perceive the world in 3D and a massive amount of computation to process this information. This is in stark contrast to the methods used by small living beings such as birds and bees: they use exploratory and active movements to gather more information to simplify the perception task at hand. Using this active vision-based philosophy, we achieve state-of-the-art autonomy on nano-quadrotors using minimal on-board sensing and computation.

In particular, I showcase four methods of achieving activeness on an aerial robot: 1 By moving the agent itself, 2. By employing an active sensor, 3. By moving a part of the agent's body, 4. By hallucinating active movements. Next, to make this work practically applicable I show how hardware and software co-design can be performed to optimize the form of active perception to be used. Finally, I present the world's first prototype of a RoboBeeHive that shows how to integrate multiple competencies centered around active vision in all its glory.

Reference:

Nitin Jagannatha Sanket

Active Vision Based Embodied-AI Design For Nano-UAV Autonomy

Affiliation:

In particular, I showcase four methods of achieving activeness on an aerial robot: 1 By moving the agent itself, 2. By employing an active sensor, 3. By moving a part of the agent's body, 4. By hallucinating active movements. Next, to make this work practically applicable I show how hardware and software co-design can be performed to optimize the form of active perception to be used. Finally, I present the world's first prototype of a RoboBeeHive that shows how to integrate multiple competencies centered around active vision in all its glory.

Reference:

Nitin Jagannatha Sanket

Active Vision Based Embodied-AI Design For Nano-UAV Autonomy

Affiliation:

Active Vision Based Embodied-AI Design For Nano-UAV Autonomy | Ph.D. Defense of Nitin J. Sanket

Dark Synthetic Vision: Lightweight Active Vision to Navigate in the Dark (RAL 2020)

Embodied AI for Autonomous Driving

Active-Vision

Toward Generalizable Embodied AI for Machine Autonomy

New 12 Axes XHand + X-Bot-L Demo Shocking Embodied AI Robot Capabilities (CL-1 HUMANOID)

CVPR #18532 - 4th Embodied AI Workshop

With Spatial Intelligence, AI Will Understand the Real World | Fei-Fei Li | TED

Building and Working in Environments for Embodied AI

3DGV Talk---Manolis Savva:3D Simulation for Embodied AI: Three Emerging Directions

CVPR24 FM4AS | Alex Kendall: Building Embodied AI to be Safe and Scalable

IWAI 2023 - Tetsuya Ogata - Embodied AI with Active Inference

Computer vision based smart and sensible construction site - Abstract

Robotics 3 - Chapter 7 - Active Vision and Gaze Stabilization

The 1st Workshop on Simulation Technology for Embodied AI

ALVIO (Adaptive Line Visual Inertial Odometry) in a multi-story building

Embodied AI Panel I

Alison Gopnik | CVPR 2020 Embodied AI Workshop Talk

Doing for our robots what nature did for us | Embodied AI Lecture series at AI2

Driver monitoring system with AM62A processors

Manolis Savva - 3D Simulation for Embodied AI: Three Emerging Directions

Soft Robotics and AI towards Embodied Intelligence | Embodied AI Lecture Series at AI2

Automated Vision-Based Civil Infrastructure Health Inspection and Assessment Post-Construction

Judy Hoffman | CVPR 2020 Embodied AI Workshop Talk

Комментарии

0:37:23

0:37:23

0:04:55

0:04:55

0:32:49

0:32:49

0:03:54

0:03:54

0:56:20

0:56:20

0:08:01

0:08:01

8:29:47

8:29:47

0:15:12

0:15:12

3:07:19

3:07:19

1:25:06

1:25:06

0:50:48

0:50:48

0:41:20

0:41:20

0:00:51

0:00:51

1:47:29

1:47:29

4:25:47

4:25:47

0:01:13

0:01:13

1:33:21

1:33:21

0:23:51

0:23:51

0:57:28

0:57:28

0:00:59

0:00:59

1:00:31

1:00:31

0:54:38

0:54:38

0:02:19

0:02:19

0:28:27

0:28:27