filmov

tv

Entropy is NOT Disorder | #VeritasiumContest

Показать описание

This video has three goals:

1. Provide a better definition of entropy (number of ways a state can exist).

2. Explain why it's useful (dE = T dS).

3. Give an intuitive reason for why it tends towards a maximum in an isolated system.

I'm using the Boltzmann definition of entropy in the same manner as Daniel Schroeder in his textbook.

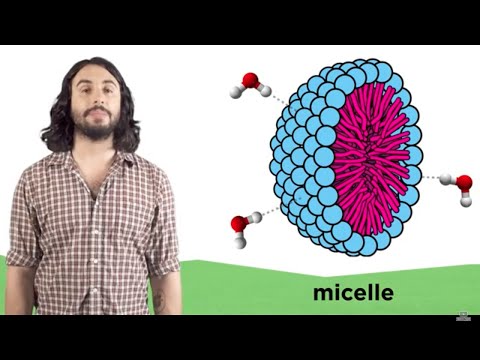

Also, I just want to point out most people think disorder means disorganization, like atoms scattered about randomly. In that sense, forming a crystal or oil and water separating leads to an increase in order at the expense of air molecules moving slightly faster, which seems to be a net increase in order. If your response does not take this common understanding of disorder into account, your response is going to be much weaker and likely incorrect.

Other videos for the Veritasium Contest about how entropy is not disorder

___________________________________________________________________________

If given the choice between losing the Veritasium contest and having everyone understand that entropy is not disorder, I would lose the contest. For this reason, I am linking to other videos in the Veritasium contest in the hopes of boosting as many entropy videos to the top 100 as possible. Also, I like their explanations.

Links

___________________________________________________________________________

#VeritasiumContest #veritasiumcontest

Transcript

___________________________________________________________________________

Entropy is not disorder. If it were, the formation of highly ordered materials such as crystals or the separation of oil and water would be impossible. Instead, entropy, which is denoted as S, connects two concepts:

First, entropy measures the number of ways a state could exist.

Say we have 100 coins. There is only one way for all coins to be heads, so this is a low entropy state. On the other hand, there are billions of billions of billions of ways for 100 coins to be half heads and half tails, so this is a high entropy state.

Second, entropy measures how energy relates to temperature.

Since one number describes both, you can figure out how temperature affects a system by counting the number of ways each state could exist within a system.

So why does entropy tend to a maximum in isolated systems per the Second Law of Thermodynamics? States with higher entropy have more ways to exist, and therefore a higher probability. In other words, the Second Law says "isolated systems tend to their most likely states".

1. Provide a better definition of entropy (number of ways a state can exist).

2. Explain why it's useful (dE = T dS).

3. Give an intuitive reason for why it tends towards a maximum in an isolated system.

I'm using the Boltzmann definition of entropy in the same manner as Daniel Schroeder in his textbook.

Also, I just want to point out most people think disorder means disorganization, like atoms scattered about randomly. In that sense, forming a crystal or oil and water separating leads to an increase in order at the expense of air molecules moving slightly faster, which seems to be a net increase in order. If your response does not take this common understanding of disorder into account, your response is going to be much weaker and likely incorrect.

Other videos for the Veritasium Contest about how entropy is not disorder

___________________________________________________________________________

If given the choice between losing the Veritasium contest and having everyone understand that entropy is not disorder, I would lose the contest. For this reason, I am linking to other videos in the Veritasium contest in the hopes of boosting as many entropy videos to the top 100 as possible. Also, I like their explanations.

Links

___________________________________________________________________________

#VeritasiumContest #veritasiumcontest

Transcript

___________________________________________________________________________

Entropy is not disorder. If it were, the formation of highly ordered materials such as crystals or the separation of oil and water would be impossible. Instead, entropy, which is denoted as S, connects two concepts:

First, entropy measures the number of ways a state could exist.

Say we have 100 coins. There is only one way for all coins to be heads, so this is a low entropy state. On the other hand, there are billions of billions of billions of ways for 100 coins to be half heads and half tails, so this is a high entropy state.

Second, entropy measures how energy relates to temperature.

Since one number describes both, you can figure out how temperature affects a system by counting the number of ways each state could exist within a system.

So why does entropy tend to a maximum in isolated systems per the Second Law of Thermodynamics? States with higher entropy have more ways to exist, and therefore a higher probability. In other words, the Second Law says "isolated systems tend to their most likely states".

Комментарии

0:10:29

0:10:29

0:08:20

0:08:20

0:07:25

0:07:25

0:05:20

0:05:20

0:01:01

0:01:01

0:27:15

0:27:15

0:01:00

0:01:00

0:05:49

0:05:49

0:09:15

0:09:15

0:31:58

0:31:58

0:09:31

0:09:31

0:00:44

0:00:44

0:10:20

0:10:20

0:03:00

0:03:00

0:08:12

0:08:12

0:17:33

0:17:33

0:08:25

0:08:25

0:06:42

0:06:42

0:17:12

0:17:12

0:11:13

0:11:13

0:01:30

0:01:30

0:03:33

0:03:33

0:04:19

0:04:19

0:00:06

0:00:06