filmov

tv

Challenges in AI-infused Telehealth in the Post-Covid 19 Era

Показать описание

1. Issues

The sudden emergence of the COVID-19 pandemic accelerated the use and acceptance of technology in healthcare. On the other hand, the effectiveness and potential implications of using advanced artificial intelligence (AI) algorithms in certain areas of healthcare are still in their infancy. Hence, to understand the practical limitations of the technologies that utilize AI, should go through a thorough evaluation before they can be of clinical relevance for sensitive application areas such as COVID-19, mental health, and suicide. In this work, we critically examine the utilization of existing state-of-the-art AI methods for two specific problems: (i) Virtual Health Assistants (VHAs) for patients with suicide risk, and (ii) Recommender systems in mental health and addiction.

2. AI and COVID-19-related Projects

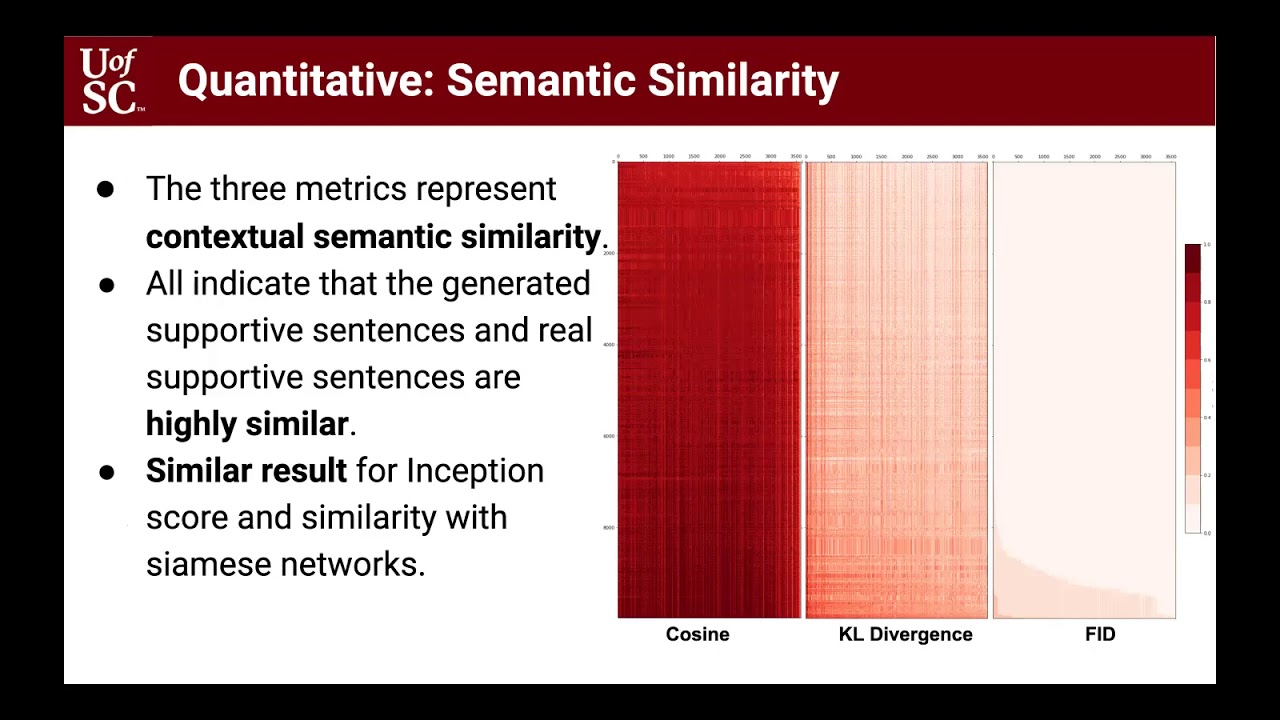

(a) Virtual Health Assistants (VHAs) may aid clinicians in mental health care as they could provide timely support. We trained generative adversarial networks (GANs) that automatically generate statements that would support and comfort suicidal patients, improve their mood, ease their burden, and ultimately prevent them from harming themselves. We quantitatively evaluated the generated supportive text for coherence, meaningfulness, semantic similarity, and readability. Three practitioners qualitatively evaluated the outcome for safety, understandability, supportiveness, and empathy. The quantitative metrics show that generated language is very similar to a human-generated supportive language, while the practitioners were ambivalent about the supportive nature of the statements and found its clinical use premature and unsafe without proper monitoring.

(b) Recommender Systems in Healthcare. COVID-19 pandemic mandated certain restrictions such as stay-at-home, impacting the overall social quality of the population concerning the prevalence of addiction and mental health. Such prevalence in the middle of a pandemic and the overloaded situation of the healthcare system prompted individuals to seek help on alternative venues, such as Reddit. Users on Reddit enact different informal roles, such as support providers and support seekers. We designed a recommender system based on PHQ-9 and GAD mapping support providers to support seekers for addiction and mental health. This system could be utilized in a formal online platform to match patients to an appropriate practitioner. Evaluated by practitioners, our findings show promising results achieving a 75% accuracy, while yet, it is far from a safe deployment in a real-world healthcare application.

3. Lessons learned

Our findings for both applications suggest that the advanced AI methods (e.g., automated text generation models, recommender systems) to be used in VHAs or virtual platforms for mental health and suicidal patients, need substantial progress and thorough qualitative evaluation accompanied by safety protocols. As the high-precision and patient-centric solutions are crucial in healthcare, explainable AI methods deliberately centering around the specific needs of patients and their treatment will play a significant role.

Members - Ugur Kursuncu, Manas Gaur, Kaushik Roy, Amit Sheth

The sudden emergence of the COVID-19 pandemic accelerated the use and acceptance of technology in healthcare. On the other hand, the effectiveness and potential implications of using advanced artificial intelligence (AI) algorithms in certain areas of healthcare are still in their infancy. Hence, to understand the practical limitations of the technologies that utilize AI, should go through a thorough evaluation before they can be of clinical relevance for sensitive application areas such as COVID-19, mental health, and suicide. In this work, we critically examine the utilization of existing state-of-the-art AI methods for two specific problems: (i) Virtual Health Assistants (VHAs) for patients with suicide risk, and (ii) Recommender systems in mental health and addiction.

2. AI and COVID-19-related Projects

(a) Virtual Health Assistants (VHAs) may aid clinicians in mental health care as they could provide timely support. We trained generative adversarial networks (GANs) that automatically generate statements that would support and comfort suicidal patients, improve their mood, ease their burden, and ultimately prevent them from harming themselves. We quantitatively evaluated the generated supportive text for coherence, meaningfulness, semantic similarity, and readability. Three practitioners qualitatively evaluated the outcome for safety, understandability, supportiveness, and empathy. The quantitative metrics show that generated language is very similar to a human-generated supportive language, while the practitioners were ambivalent about the supportive nature of the statements and found its clinical use premature and unsafe without proper monitoring.

(b) Recommender Systems in Healthcare. COVID-19 pandemic mandated certain restrictions such as stay-at-home, impacting the overall social quality of the population concerning the prevalence of addiction and mental health. Such prevalence in the middle of a pandemic and the overloaded situation of the healthcare system prompted individuals to seek help on alternative venues, such as Reddit. Users on Reddit enact different informal roles, such as support providers and support seekers. We designed a recommender system based on PHQ-9 and GAD mapping support providers to support seekers for addiction and mental health. This system could be utilized in a formal online platform to match patients to an appropriate practitioner. Evaluated by practitioners, our findings show promising results achieving a 75% accuracy, while yet, it is far from a safe deployment in a real-world healthcare application.

3. Lessons learned

Our findings for both applications suggest that the advanced AI methods (e.g., automated text generation models, recommender systems) to be used in VHAs or virtual platforms for mental health and suicidal patients, need substantial progress and thorough qualitative evaluation accompanied by safety protocols. As the high-precision and patient-centric solutions are crucial in healthcare, explainable AI methods deliberately centering around the specific needs of patients and their treatment will play a significant role.

Members - Ugur Kursuncu, Manas Gaur, Kaushik Roy, Amit Sheth

0:29:49

0:29:49

0:10:34

0:10:34

1:32:15

1:32:15

0:23:17

0:23:17

0:10:32

0:10:32

0:19:35

0:19:35

2:42:21

2:42:21

0:32:02

0:32:02

0:36:35

0:36:35

0:24:40

0:24:40

1:42:32

1:42:32

3:42:39

3:42:39

1:58:46

1:58:46

0:57:20

0:57:20

0:23:50

0:23:50

1:37:10

1:37:10

0:57:12

0:57:12

0:48:05

0:48:05

1:01:00

1:01:00

1:07:11

1:07:11

1:15:51

1:15:51

1:02:37

1:02:37

1:02:15

1:02:15

0:52:46

0:52:46