filmov

tv

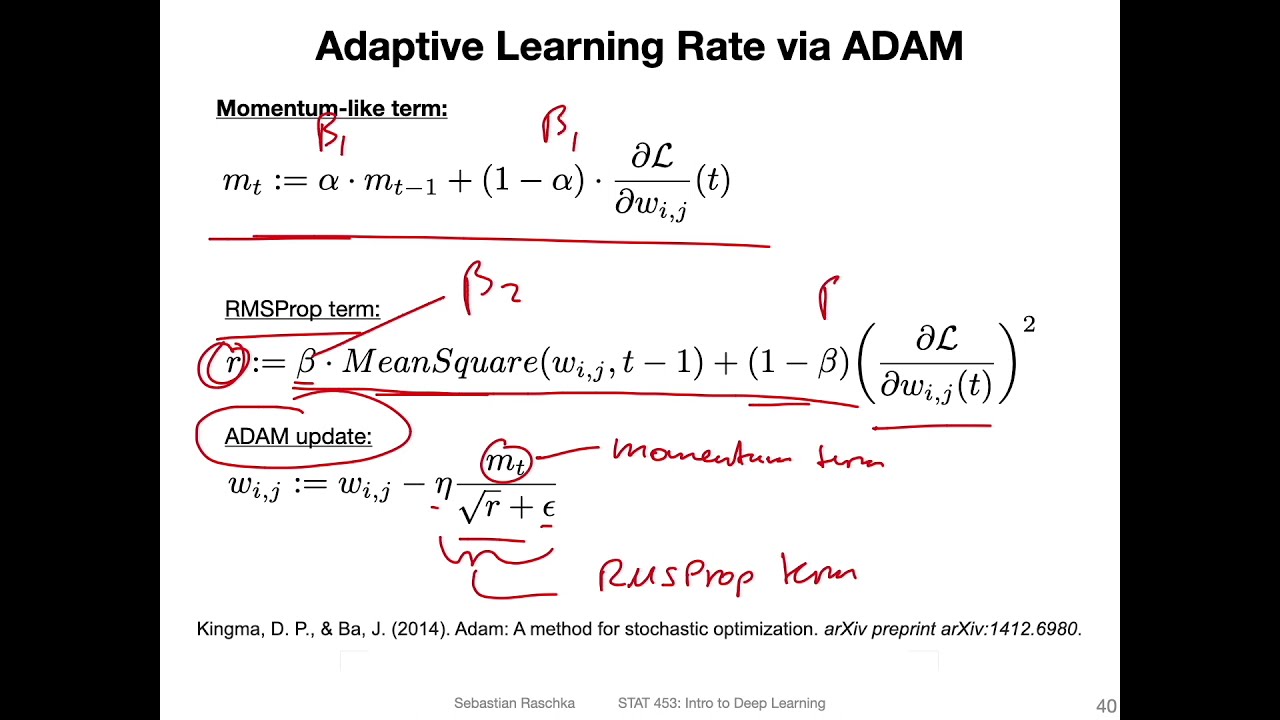

L12.4 Adam: Combining Adaptive Learning Rates and Momentum

Показать описание

-------

This video is part of my Introduction of Deep Learning course.

-------

L12.4 Adam: Combining Adaptive Learning Rates and Momentum

l12 4 adam combining adaptive learning rates and momentum

Adam Optimizer Explained in Detail | Deep Learning

AdamW Optimizer Explained #datascience #machinelearning #deeplearning #optimization

Optimization in Data Science - Part 4: ADAM

Adam Optimizer

Unit 6.3 | Using More Advanced Optimization Algorithms | Part 2 | Adaptive Learning Rates

Deep Learning Lecture 4.4 - RMSprop & Adam

Adaptive Gradient Descent

Adam Optimization Part-8

Adam Optimizer Explained in Detail with Animations | Optimizers in Deep Learning Part 5

PYTHON : How to set adaptive learning rate for GradientDescentOptimizer?

Adaptive learning

EUSIPCO 2020 Tutorial 7-3: Adaptive Optimization Methods for Machine Learning and Signal Processing

Lecture 45 Optimisers RMSProp, AdaDelta and Adam Optimiser

Adam Optimizer for Neural Network || Lesson 15 || Deep Learning || Learning Monkey ||

Adam Optimizer or Adaptive Moment Estimation Optimizer

Top Optimizers for Neural Networks

function optimization with Adam Optimizer

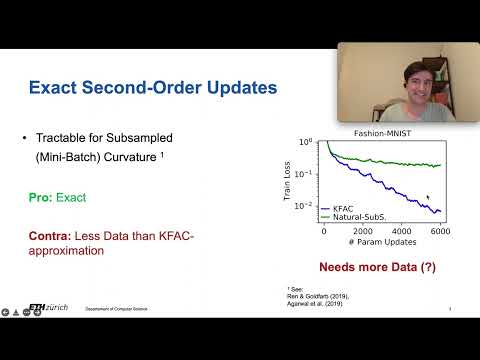

Gradient Descent on Neurons and its Link to Approximate Second-order Optimisation

69 Adam (Adaptive Moment Estimation) Optimization - Reduce the Cost in NN

Yutian Chen | 'Towards Learning Universal Hyperparameter Optimizers with Transformers'

ADAM OPTIMIZER IMPLEMENTATION

03 - Methods for Stochastic Optimisation: AdaGrad, RMSProp and Adam

Комментарии

0:15:33

0:15:33

0:05:36

0:05:36

0:05:05

0:05:05

0:00:50

0:00:50

0:31:12

0:31:12

0:00:36

0:00:36

0:05:20

0:05:20

0:12:52

0:12:52

0:05:03

0:05:03

0:11:19

0:11:19

0:12:39

0:12:39

0:01:16

0:01:16

0:04:28

0:04:28

0:42:30

0:42:30

0:29:00

0:29:00

0:08:43

0:08:43

0:04:19

0:04:19

0:29:00

0:29:00

0:11:48

0:11:48

0:06:39

0:06:39

0:10:24

0:10:24

0:38:56

0:38:56

0:06:10

0:06:10

0:14:14

0:14:14