filmov

tv

Q: How put 1000 PDFs into my LLM?

Показать описание

A subscriber asks: HOW to put 1000s of PDFs into a Large Language Model (LLM) and perform Q&A? How to integrate huge amount of corporate and private data into an open source LLM?

#aieducation

#chatgpt

#aieducation

#chatgpt

Q: How put 1000 PDFs into my LLM?

Build a PDF Document Question Answering LLM System With Langchain,Cassandra,Astra DB,Vector Database

Using Question Banks for PDF Tests

Build a PDF Document Question Answering System with Llama2, LlamaIndex

[full] PDFTriage: Question Answering over Long, Structured Documents

Loaders, Indexes & Vectorstores in LangChain: Question Answering on PDF files with ChatGPT

AFRI Forest Guard Answer Key 2021 PDF of All Question Booklets Set Wise Download

Kerala SET Physics Exam 2023: Previous Question Paper PDF Download

1500 LDC Question Pattern Set // Download PDF

IDBI EXECUTIVE EXAM 2023|COMPUTER AWARENESS PDF 1000 QUESTION |

how to download all pdf |airforce physics 1000 question| download physics question |physics notes

10th Question Bank Solution with pdf 🔥 Maharashtra Board ll class 10th ll ssc ll #shorts

DU Library Attendant Question paper free pdf of 50 Lis Questions #libraryjobs

How to Set Up Your Photoshop Documents: Composition

Exploring the Power Automate Visual in Power BI | Sending emails

🏅TAMIL 1000+ QUESTIONS 🏅 PREVIOUS YEAR QUESTION PAPER OVERALL PDF |

army #bharti #notes #pdf free 2800 question 📄answer

What If No One Ever Died? | Immortality | The Dr Binocs Show | Peekaboo Kidz

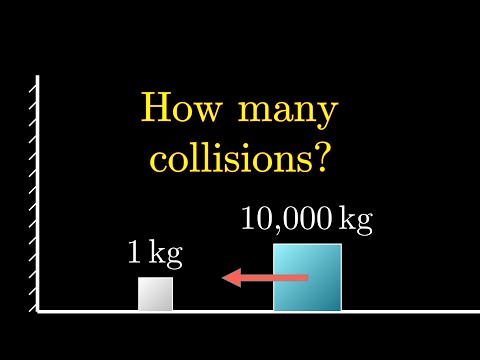

The most unexpected answer to a counting puzzle

Private Pilot Checkride Prep - 10 Multiple Choice Question Quiz and FREE PDF

Ponziani Opening TRAPS | Chess Tricks to WIN Fast | Brilliant Moves, Tactics, Ideas & Strategy

ABC Song | Wendy Pretend Play Learning Alphabet w/ Toys & Nursery Rhyme Songs

TNPSC question paper pdf | TNPSC previous year question papers last ten years | - TNPSC

NestJS in 100 Seconds

Комментарии

0:14:12

0:14:12

0:23:25

0:23:25

0:04:42

0:04:42

0:19:36

0:19:36

![[full] PDFTriage: Question](https://i.ytimg.com/vi/jGQmI5fftzI/hqdefault.jpg) 0:17:43

0:17:43

0:24:11

0:24:11

0:00:54

0:00:54

0:01:29

0:01:29

0:05:06

0:05:06

0:00:53

0:00:53

0:00:56

0:00:56

0:00:57

0:00:57

0:00:28

0:00:28

0:19:32

0:19:32

0:07:09

0:07:09

0:04:41

0:04:41

0:00:15

0:00:15

0:06:23

0:06:23

0:05:13

0:05:13

0:02:16

0:02:16

0:07:40

0:07:40

0:06:33

0:06:33

0:08:12

0:08:12

0:02:21

0:02:21