filmov

tv

A Helping Hand for LLMs (Retrieval Augmented Generation) - Computerphile

Показать описание

Mike Pound discusses how Retrieval Augmented Generation can improve the performance of Large Language Models.

Mike is based at the University of Nottingham's School of Computer Science.

This video was filmed and edited by Sean Riley.

Mike is based at the University of Nottingham's School of Computer Science.

This video was filmed and edited by Sean Riley.

A Helping Hand for LLMs (Retrieval Augmented Generation) - Computerphile

Mano: Your LLM-powered helping hand

LLM Explained | What is LLM

AI as a helping hand, not the final answer: Promoting responsible usage in research

'Top 5 Business Applications of LLM Agents! 🚀 AI-Powered Super Assistants'

EfficientML.ai Lecture 13 - LLM Deployment Techniques (MIT 6.5940, Fall 2024)

Are AI Coding Assistants Helping or Hurting You? my thoughts on LLM coding and AI assistants.

Jay Alammar on LLMs, RAG, and AI Engineering

Can LLMs Generate Novel Research Ideas? A Large Scale Human Study with 100+ NLP Researchers 2409

Lessons From A Year Building With LLMs

Best 12 AI Tools in 2023

Hands-On with Large Language Models: A Must-Read #llms

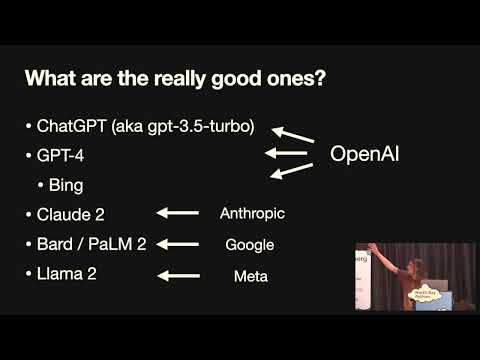

'Catching up on the weird world of LLMs' - Simon Willison (North Bay Python 2023)

Retrieval Augmented Generation explained for Beginners | RAG in LLMs

All Indian LLMs explained in 10 minutes

EfficientML.ai Lecture 14 - LLM Post-Training (MIT 6.5940, Fall 2024)

EfficientML.ai Lecture 13 - LLM Deployment Techniques (MIT 6.5940, Fall 2024, Zoom Recording)

Hands on LLM and RAG

Building with Instruction-Tuned LLMs: A Step-by-Step Guide

Generative AI with Large Language Models: Hands-On Training feat. Hugging Face and PyTorch Lightning

EfficientML.ai Lecture 14 - LLM Post-Training (MIT 6.5940, Fall 2024, Zoom Recording)

Mirror, mirror: LLMs and the illusion of humanity - Jodie Burchell - NDC Oslo 2024

Arize AI Phoenix: Open-Source Tracing & Evaluation for AI (LLM/RAG/Agent)

Running Microsoft Phi-3 LLM using Hugging Face | Hands-on deployment

Комментарии

0:14:08

0:14:08

0:00:43

0:00:43

0:04:17

0:04:17

0:00:49

0:00:49

0:01:00

0:01:00

1:16:22

1:16:22

0:11:39

0:11:39

0:57:35

0:57:35

1:31:36

1:31:36

0:35:21

0:35:21

0:00:36

0:00:36

0:00:53

0:00:53

0:39:13

0:39:13

1:42:57

1:42:57

0:10:13

0:10:13

1:17:58

1:17:58

1:16:43

1:16:43

0:42:57

0:42:57

0:59:35

0:59:35

2:17:12

2:17:12

1:18:40

1:18:40

0:59:22

0:59:22

0:59:05

0:59:05

0:00:00

0:00:00